Mastering the operational model challenge for distributed AI/ML infrastructure

Jensen Huang’s approach to running NVIDIA as its CEO is deeply rooted in a core belief in continuous innovation and solving problems that conventional computing cannot address.

This philosophy will drive NVIDIA to continue to break new frontiers in AI and deliver innovations that we may not even have heard of yet.

The ultimate AI supercomputer unveiled at NVIDIA GTC

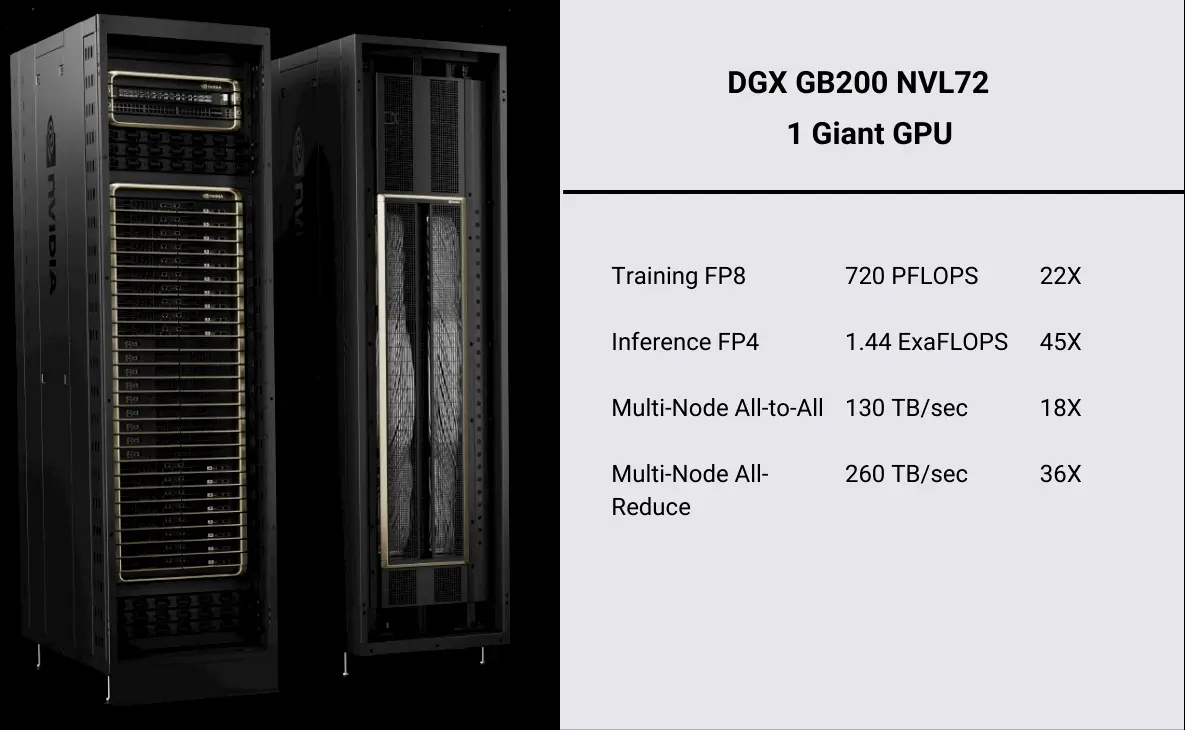

The DGX GB200 NVL72 is probably the fastest AI system in a single rack, boasting 1.4 Exaflops of AI performance with 30 TB of high-speed memory, delivering 30X faster real-time for trillion-parameter LLM inference.

Its single rack design houses 72 of NVIDIA’s latest superchips, known as Blackwell GPUs, and 36 Grace CPUs, as well as 2 miles of cabling and 900 GB/s network links.

AI/ML infrastructure will be everywhere

Furthermore, certain industries have unique workload characteristics that make private clouds, edge, or colocation facilities more appropriate than public cloud offerings.

Organizations in fields such as drug discovery, genomics research, autonomous driving, oil and gas exploration, SASE edge security, and telco 5G/6G operations often face specialized workloads. These tasks demand consistent, high-performance computing resources when run in regional data centers.

Scientific research, AI/ML model training, and data-intensive processing tasks in these fields can often be managed more cost-effectively in private cloud environments or at the edge, closer to where the data is generated.

The operational challenges of AI/ML infrastructure

Data scientists, researchers, and AI/ML teams require flexible, high-performance infrastructure to support the full lifecycle of development – from data experimentation and model training to inferencing at scale. However, managing the underlying compute, storage, and networking resources across a distributed footprint presents immense operational hurdles.

ML and data teams’ requirements

Data scientists and researchers want to focus on modeling and application development rather than dealing with infrastructure complexity. They need on-demand access to scale resources for intensive data processing, model training, and inferencing workloads without operational overhead.

MLOps and DevOps demands

On the other hand, MLOps and DevOps teams require total control, high availability, and fault tolerance of the on-premises AI/ML infrastructure. Ensuring reliable model deployment, continuous delivery, and governance is critical but extremely difficult without automation.

Operational consistency challenges

Spanning this AI/ML infrastructure across core data centers, public clouds, and edge locations significantly compounds the operational burden. Maintaining consistency in deployments, monitoring, troubleshooting, and upgrades becomes exponentially more complex in a heterogeneous distributed environment.

Streamlined operations needed

Achieving a cloud operational model for distributed AI/ML infrastructure

To effectively operationalize AI/ML infrastructure across a distributed footprint spanning core data centers, public clouds, and the edge, enterprises require an operational model with the following characteristics:

Kubernetes as a consistent compute fabric

Adopting Kubernetes as the foundational compute fabric provides a consistent abstraction layer with open APIs for integrating storage, networking, and other infrastructure services across the distributed footprint. This establishes a uniform substrate for deploying AI/ML workloads. Kubernetes is a necessary foundation, but not sufficient for a complete operational model.

- Read more about Kubernetes for AI/ML workloads.

Closed-loop automation

Public cloud providers have made significant investments in developing highly automated closed-loop systems for the full lifecycle management of their infrastructure and services. Every aspect – from initial provisioning and configuration to continuous monitoring, troubleshooting, and seamless upgrades – is driven through intelligent automation.

Manual operations that rely on people executing scripts and procedures may suffice at a small scale, but quickly become inefficient, inconsistent, and error-prone as the infrastructure footprint expands across distributed data centers, public clouds, and edge locations.

Enterprises cannot realistically employ and coordinate armies of operations personnel for tasks like scaling resources, applying patches, recovering from outages, and managing complex redundancy scenarios.

Platform engineering at scale with a cloud management plane

A comprehensive operational and management plane is needed, possessing the following characteristics:

- Reduced maintenance costs by aggregating all distributed infrastructure behind a single management pane.

- Rapid and repeatable remote deployments to hundreds or thousands of distributed cloud locations with consistent template-based configuration and policy control.

- An operational SLA through automated health monitoring, runbook-driven resolution of common problems, and streamlined upgrades.

Continuous operational improvement

An operational model that can dynamically improve and add new features/capabilities over time through a virtuous cycle of operational data, analytics, and continuous integration. Ad-hoc scripting approaches rapidly become stale and obsolete.

Enabling collaborative multi-stakeholder operations for AI/ML infrastructure

To enable this collaborative operating model, organizations need an open platform that provides clear separations of concerns with appropriate visibility, access controls, and automation capabilities tailored to each stakeholder’s needs.

Comprehensive telemetry, observability, and AIOps-driven analytics are critical for stakeholders to efficiently triage and resolve issues through a cohesive support experience. Trusted governance policies that span the hybrid infrastructure are also required for compliance and enabling cross-functional cooperation.

By designing for multi-tenant, multi-cluster operations from the outset, the platform can foster seamless collaboration across this ecosystem of internal and external entities.

Unlocking the potential of distributed AI/ML infrastructure

To truly realize the potential of distributed AI infrastructure, the industry must address the operational model challenge, allowing enterprises, independent software vendors (ISVs), and managed service providers (MSPs) to provide benefits on par with public clouds.

Embracing such an operational model, organizations can harness the power of AI/ML workloads on-premises or in colocation facilities without the complexities and inefficiencies associated with manual management. They can achieve the scalability, reliability, and cost-effectiveness needed to compete in an increasingly AI-driven world.

Let Platform9’s Always-On Assurance™ transform your cloud native management

Platform9’s mature management plane has been refined over 1200+ person-years of engineering using open source technologies Kubernetes, KubeVirt, and OpenStack. We combine a SaaS management plane along with a finely tuned Proactive-Ops methodology to offer:

- Manage infrastructure anywhere: cloud, on-premises, edge

- Comprehensive 24/7 remote monitoring

- Automated support ticket generation and alerting

- Proactive troubleshooting and resolution of customer issues

- Guaranteed operational SLAs and uptime monitoring and reporting

- Customers stay continually informed and in control

Platform9 resolves 97.2% of issues before customers even notice a cluster problem. Experience unmatched efficiency, reliability, uptime, and support in your infrastructure modernization journey. Learn more.