Configuring for Production | Platform9 Managed OpenStack

This documents describes all the steps needed to plan and prepare for building out your Platform9 Managed OpenStack production environment. It is recommended that you follow the guidelines in this document once you move past the proof-of-concept (POC) phase and are ready to deploy Platform9 for your production environment.

Whitelisting access to your Platform9 managed OpenStack

Typically your Platform9 account is accessible via public internet. But, you can restrict it such that it is only accessible from the range of IP addresses that belong to your organization. If you’d like to enable this additional filtering, please contact Platform9 support and provide us with the range of IP addresses you’d like to limit access from.

Compute and Memory

Compute hosts should meet at least the following requirements to operate Platform9.

- CPU: AMD Opteron Valencia / Intel Xeon Westmere (or newer)

- Memory: 16GB RAM

- Network: (2) 1Gbps bonded NICs (LACP)

- Boot disk: 20GB

- File system: Ext3, Ext4

Note: Disks used for virtual machine storage are supported with the following file systems: Ext 3, Ext4, GFS, GFS2, NFS, XFS, GlusterFS or CephFS. The amount of space required for virtual machine storage will vary widely between organizations depending on the size of VMs created, over-provisioning ratios, and actual disk utilization per-VM. Thus, Platform9 makes no recommendation on this value.

Resource over-provisioning

OpenStack places virtual machines on available hosts so that each VM gets a fair share of available resources. Resource overcommitment is a technique by which virtual machines share the same physical CPU and memory. OpenStack placements use this technique to reap cost savings. When planning the compute infrastructure to back the OpenStack deployment it is important to consider this feature of OpenStack.

Default overcommit ratios

- CPU: 16 virtual CPUs per CPU core (16:1)

- Memory: 150% of available memory (1.5:1)

Depending on the number of VMs you plan to run on Platform9 and the resource overcommitment you are comfortable with, appropriate number of hosts with the right CPU and memory should be used. For example, if you plan to deploy 50 VMs – each with 1vCPU and 2GB of memory – and want 5x CPU overcommitment, but no memory overcommit, together the hosts resources should total 10 CPU cores and 100GB of memory.

If you plan to use multi-vCPU VMs, each host should have at least as many cores as the largest vCPUs used by any virtual machine. e.g. In a setup with 6-core hosts, running 24 vCPU instances is not supported.

Memory oversubscription

When oversubscribing memory, it is important to ensure the Linux host has a sufficient amount of swap space allocated so that it never runs out of swap. For example, consider a server with 48GB of RAM. With the default overcommitment policy, OpenStack can provision virtual machines up to 1.5 times the memory size: 72GB total. In addition, lets assume 4GB of memory is needed for Linux OS to run properly. In this case the amount of swap space needed is (72 – 48) + 4 = 28GB.

For in-depth discussion of these concepts, refer to Resource Overcommitment Best Practices.

Networking

OpenStack Networking (Neutron) enables the use of Software Defined Networks (SDN) within OpenStack enabling an administrator to define complex, virtual network topologies using VLAN / overlay networking (GRE/VXLAN), and isolated L3 domains within the OpenStack Cloud.

A typical Neutron-enabled environment requires at least one “Network node” which serves as the egress point for north-south traffic transiting the cloud. The network node also provides layer 3 routing between tenant networks created in OpenStack.

Recommended hardware specifications

The minimum hardware requirements for the network node are the same as recommended for the compute nodes. Additionally, the network node should have 1 CPU core per Gbps of L3 routed tenant traffic.

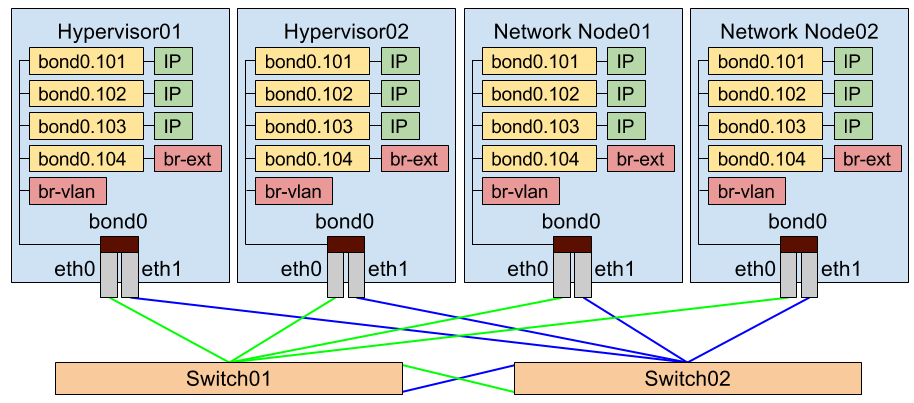

Example reference network configuration

Pictured is an example configuration – with both network & compute nodes utilizing bonded NICs – connecting to two redundant, virtually clustered upstream switches.

Additional resources

The enhanced functionality in Neutron networking introduces greater complexity. Please refer to the following articles to learn more about Neutron networking, and how to configure it within your environment.

Block Storage (Cinder)

Block storage is available in OpenStack via the Cinder service. Many backend storage arrays are supported via Cinder. To ensure your array is supported check the Cinder Compatibility Matrix.

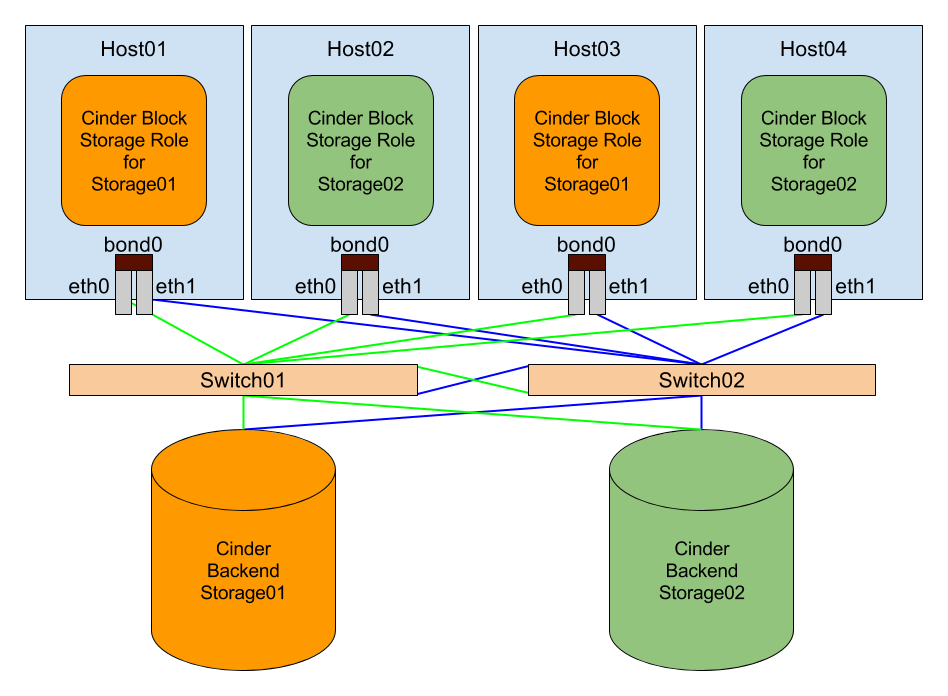

Example reference cinder configuration

Pictured is an example configuration – with both Cinder Block Storage Roles & Cinder Backends utilizing bonded NICs – connecting to two redundant, virtually clustered upstream switches. By having multiple Cinder Block Storage Roles for each Cinder Backend, and multiple Cinder Backends, you will have ultimate redundancy with zero single points of failure.

By having multiple Cinder Block Storage Roles for each Cinder Backend, and multiple Cinder Backends, you will have ultimate redundancy with zero single points of failure.