In this updated blog post we’ll compare Kubernetes (versions 1.5.0 and later) with Docker Swarm, also referred to as Docker Engine running in Swarm mode (versions 1.12.0 and later).

Overview of Kubernetes

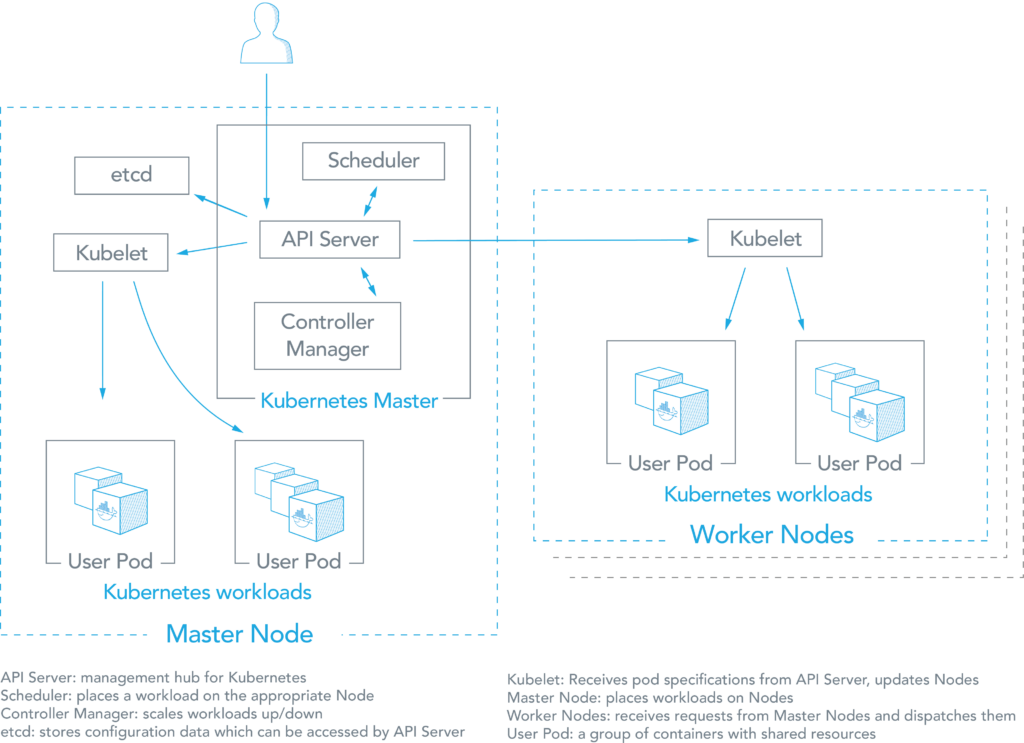

According to the Kubernetes website, “Kubernetes is an open-source system for automating deployment, scaling, and management of containerized applications.” Kubernetes was built by Google based on their experience running containers in production using an internal cluster management system called Borg (sometimes referred to as Omega). The architecture for Kubernetes, which relies on this experience, is shown below:

As you can see from the figure above, there are a number of components associated with a Kubernetes cluster. The aster node places container workloads in user pods on worker nodes or itself. The other components include:

- etcd: This component stores configuration data which can be accessed by the Kubernetes Master’s API Server using simple HTTP or JSON API.

- API Server: This component is the management hub for the Kubernetes master node. It facilitates communication between the various components, thereby maintaining cluster health.

- Controller Manager: This component ensures that the cluster’s desired state matches the current state by scaling workloads up and down.

- Scheduler: This component places the workload on the appropriate node – in this case all workloads will be placed locally on your host.

- Kubelet: This component receives pod specifications from the API Server and manages pods running in the host.

The following list provides some other common terms associated with Kubernetes:

- Pods: Kubernetes deploys and schedules containers in groups called pods. Containers in a pod run on the same node and share resources such as filesystems, kernel namespaces, and an IP address.

- Deployments: These building blocks can be used to create and manage a group of pods. Deployments can be used with a service tier for scaling horizontally or ensuring availability.

- Services: Services are endpoints that can be addressed by name and can be connected to pods using label selectors. The service will automatically round-robin requests between pods. Kubernetes will set up a DNS server for the cluster that watches for new services and allows them to be addressed by name. Services are the “external face” of your container workloads.

- Labels: Labels are key-value pairs attached to objects and can be used to search and update multiple objects as a single set.

Recommended Readings

Overview of Docker Swarm

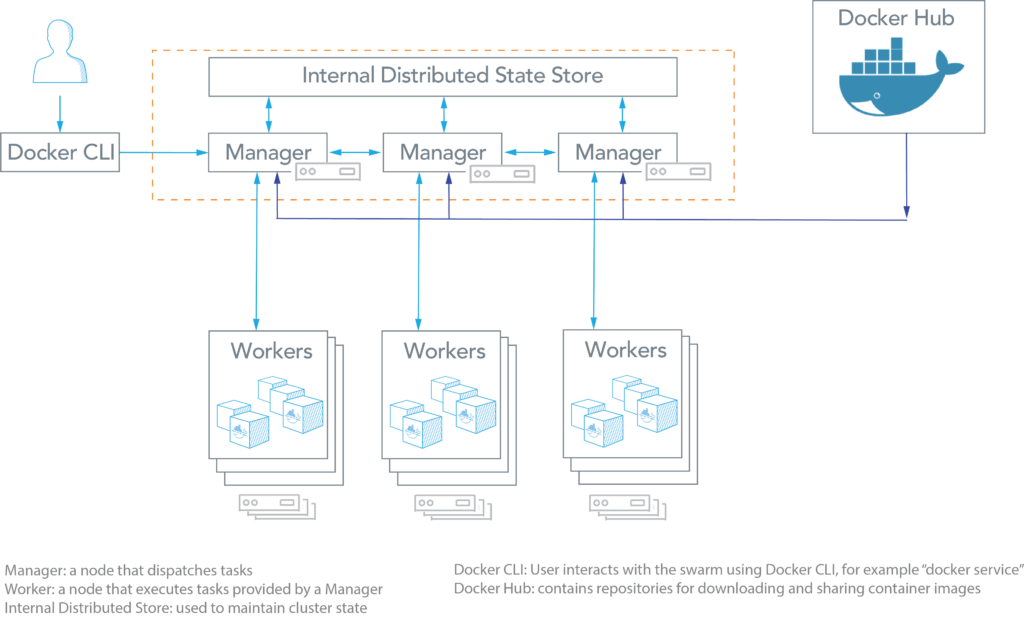

Docker Engine v1.12.0 and later allow developers to deploy containers in Swarm mode. A Swarm cluster consists of Docker Engine deployed on multiple nodes. Manager nodes perform orchestration and cluster management. Worker nodes receive and execute tasks from the manager nodes.

A service, which can be specified declaratively, consists of tasks that can be run on Swarm nodes. Services can be replicated to run on multiple nodes. In the replicated services model, ingress load balancing and internal DNS can be used to provide highly available service endpoints. (Source: Docker Docs: Swarm mode)

As can be seen from the figure above, the Docker Swarm architecture consists of managers and workers. The user can declaratively specify the desired state of various services to run in the Swarm cluster using YAML files. Here are some common terms associated with Docker Swarm:

- Node: A node is an instance of a Swarm. Nodes can be distributed on-premises or in public clouds.

- Swarm: a cluster of nodes (or Docker Engines). In Swarm mode, you orchestrate services, instead of running container commands.

- Manager Nodes: These nodes receive service definitions from the user, and dispatch work to worker nodes. Manager nodes can also perform the duties of worker nodes.

- Worker Nodes: These nodes collect and run tasks from manager nodes.

- Service: A service specifies the container image and the number of replicas. Here is an example of a service command which will be scheduled on 2 available nodes:

# docker service create --replicas 2 --name mynginx nginx

- Task: A task is an atomic unit of a Service scheduled on a worker node. In the example above, two tasks would be scheduled by a master node on two worker nodes (assuming they are not scheduled on the Master itself). The two tasks will run independently of each other.

The next section compares the features of Kubernetes with Docker Swarm and the strengths/weaknesses of choosing one container orchestration solution over the other. You can also learn more about the container ecosystem by downloading the free Making Sense of the Container Ecosystem eBook.

Kubernetes vs Docker Swarm: Technical Capabilities

|

|

|---|---|

Application Definition

|

|

|

|

High Availability

|

|

|

|

Auto-scaling for the Application

|

|

|

|

Health Checks

|

|

|

|

The first provides abstractions for individual storage backends (e.g. NFS, AWS EBS, ceph, flocker). The second provides an abstraction for a storage resource request (e.g. 8 Gb), which can be fulfilled with different storage backends. Modifying the storage resource used by the Docker daemon on a cluster node requires temporarily removing the node from the cluster. Kubernetes offers several types of persistent volumes with block or file support. Examples include iSCSI, NFS, FC, Amazon Web Services, Google Cloud Platform, and Microsoft Azure. The emptyDir volume is non-persistent and can used to read and write files with a container.

|

Shared filesystems, including NFS, iSCSI, and fibre channel, can be configured nodes. Plugin options include Azure, Google Cloud Platform, NetApp, Dell EMC, and others.

|

Networking

|

|

The model requires two CIDRs: one from which pods get an IP address, the other for services. |

By default, nodes in the Swarm cluster encrypt overlay control and management traffic between themselves. Users can choose to encrypt container data traffic when creating an overlay network by themselves. |

|

|

Kubelet adds a set of environment variables when a pod is run. Kubelet supports simple {SVCNAME_SERVICE_HOST} AND {SVCNAME_SERVICE_PORT} variables, as well as Docker links compatible variables. DNS Server is available as an addon. For each Kubernetes Service, the DNS Server creates a set of DNS records. If DNS is enabled in the entire cluster, pods will be able to use Service names that automatically resolve. |

Swarm Manager node assigns each service a unique DNS name and load balances running containers. Requests to services are load balanced to the individual containers via the DNS server embedded in the Swarm. Docker Swarm comes with multiple discovery backends:

|

Performance and scalability

|

|

|

|

Synopsis

|

|

|---|---|

Pros of Kubernetes

|

|

|

|

|

|

|

|

Common Features |

|

|

|

Takeaways

Kubernetes has been deployed more widely than Docker Swarm, and is validated by Google. The popularity of Kubernetes is evident in the chart, which shows Kubernetes compared with Swarm on five metrics: news articles and scholarly publications over the last year, Github stars and commits, and web searches on Google.

Kubernetes leads on all metrics: when compared with Docker Swarm, Kubernetes has over 80% of the mindshare for news articles, Github popularity, and web searches. However, there is general consensus that Kubernetes is also more complex to deploy and manage. The Kubernetes community has tried to mitigate this drawback by offering a variety of deployment options, including Minikube and kubeadm. Platform9’s Managed Kubernetes product also fills this gap by letting organizations focus on deploying microservices on Kubernetes, instead of managing and upgrading a highly available Kubernetes deployment. Further details on these and other deployment models for Kubernetes can be found in The Ultimate Guide to Deploy Kubernetes.

In a follow-up blogs, we’ll compare Kubernetes with Amazon ECS and Mesos.