This post has been updated by Kubernetes vs Mesos + Marathon.

—

In a previous blog we discussed why you may need a container orchestration tool. Continuing the series, in this blog post we’ll give an overview of and compare Kubernetes vs Mesos.

Kubernetes vs Mesos

Overview of Kubernetes

According to the Kubernetes website – “Kubernetes is an open-source system for automating deployment, scaling, and management of containerized applications.” Kubernetes was built by Google based on their experience running containers in production over the last decade. See below for a Kubernetes architecture diagram and the following explanation.

The major components in a Kubernetes cluster are:

- Pods – Kubernetes deploys and schedules containers in groups called pods. A pod will typically include 1 to 5 containers that collaborate to provide a service.

- Flat Networking Space – The default network model in Kubernetes is flat and permits all pods to talk to each other. Containers in the same pod share an IP and can communicate using ports on the localhost address.

- Labels – Labels are key-value pairs attached to objects and can be used to search and update multiple objects as a single set.

- Services – Services are endpoints that can be addressed by name and can be connected to pods using label selectors. The service will automatically round-robin requests between the pods. Kubernetes will set up a DNS server for the cluster that watches for new services and allows them to be addressed by name.

- Replication Controllers – Replication controllers are the way to instantiate pods in Kubernetes. They control and monitor the number of running pods for a service, improving fault tolerance.

Overview of Mesos (+Marathon)

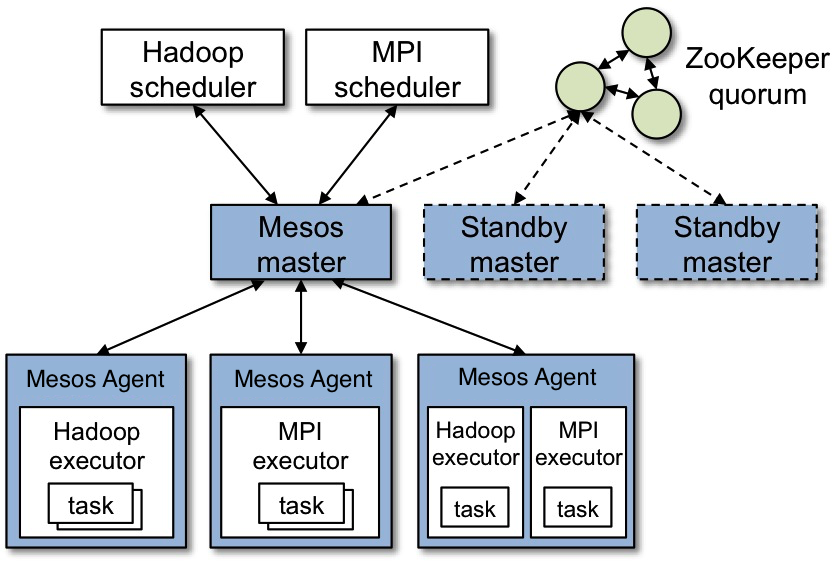

Apache Mesos is an open-source cluster manager designed to scale to very large clusters, from hundreds to thousands of hosts. Mesos supports diverse kinds of workloads such as Hadoop tasks, cloud native applications etc. The architecture of Mesos is designed around high-availability and resilience.

Credit: http://mesos.apache.org/documentation/latest/architecture/

The major components in a Mesos cluster are:

- Mesos Agent Nodes – Responsible for actually running tasks. All agents submit a list of their available resources to the master.

- Mesos Master – The master is responsible for sending tasks to the agents. It maintains a list of available resources and makes “offers” of them to frameworks e.g. Hadoop. The master decides how many resources to offer based on an allocation strategy. There will typically be stand-by master instances to take over in case of a failure.

- ZooKeeper – Used in elections and for looking up address of current master. Multiple instances of ZooKeeper are run to ensure availability and handle failures.

- Frameworks – Frameworks co-ordinate with the master to schedule tasks onto agent nodes. Frameworks are composed of two parts-

- the executor process runs on the agents and takes care of running the tasks and

- the scheduler registers with the master and selects which resources to use based on offers from the master.

There may be multiple frameworks running on a Mesos cluster for different kinds of task. Users wishing to submit jobs interact with frameworks rather than directly with Mesos.

In the figure above, a Mesos cluster is running alongside the Marathon, framework as the scheduler. The Marathon scheduler uses ZooKeeper to locate the current Mesos master which it will submit tasks to. Both the Marathon scheduler and the Mesos master have stand-bys ready to start work should the current master become unavailable.

Marathon, created by Mesosphere, is designed to start, monitor and scale long-running applications, including cloud native apps. Clients interact with Marathon through a REST API. Other features include support for health checks and an event stream that can be used to integrate with load-balancers or for analyzing metrics.

Comparison Between Kubernetes and Mesos(+Marathon)

[table id=11 /]

Mesos can work with multiple frameworks and Kubernetes is one of them. To give you more choice, if it meets your use case, it is also possible to run Kubernetes on Mesos.

In a follow up blog, we’ll compare Kubernetes with Docker Swarm.