Overview

In this post you’ll learn:

- CI/CD platforms for Kubernetes

- How to Install Jenkins on Kubernetes

- Scaling CI/CD Jenkins Pipelines with Kubernetes

- Best Practices to use Kubernetes for CI/CD at scale

Kubernetes has a vast list of use cases in the cloud-native world. It can be utilized to deploy application containers in the cloud, schedule batch jobs, handle workloads and perform gradual rollouts. Kubernetes handles those operations using efficient orchestrating algorithms that perform well at scale.

Additionally, one of the main use cases of Kubernetes is to run Continuous Integration or Continuous Delivery (CI/CD) pipelines. That is, we deploy a unique instance of a CI/CD container that will monitor a code version control system, so whenever we push to that repository, the container will run pipeline steps. The end goal is to achieve a ‘true or false’ status. True, if the commit passes the various tests in the Integration phase; false, if it does not.

In addition to the CI pipeline described above, another pipeline can then pick up the process, once the CI tests have passed, to handle the CD part of the release process. In this stage, the pipeline will attempt to roll out the Application Container to production.

The key thing to understand is that those operations run on-demand or are automatically triggered by various actions (a code check-in, a test trigger, a result of a previous step in the workflow, etc.). This is why we need to have a mechanism to spin up individual nodes to run those pipeline steps and retire them when they are unneeded. This way of managing immutable infrastructure helps us preserve resources and reduce costs.

The key mechanism here is, of course, Kubernetes, which – with its declarative structure and customizability – allows you to efficiently schedule jobs, nodes and pods for any kind of scenario.

This article is comprised of three parts: In the first part, we will explore the most popular CI/CD platforms that work on Kubernetes at the moment.

Next, we will look at two use cases. In the first case, we will install Jenkins on Kubernetes in simple steps and see how we can configure it so we can use the popular open source tools to run our CI pipelines on Kubernetes. In the second use case, we will take this Jenkins deployment to the next level. Here we provide tips and recommendations for scaling up CI/CD pipelines in Kubernetes.

Finally, we discuss the most reasonable ways and practices to run Kubernetes for CI/CD at scale.

The goal of this article is to provide you with a thorough understanding of the efficiency of Kubernetes in handing those workloads.

Let’s get started.

CI/CD platforms for Kubernetes

Kubernetes is an ideal platform for running CI/CD platforms, as it has plenty of features that make it easy to do so. How many CI/CD platforms can run on Kubernetes at the moment you ask? Well, as long as they can be packaged in a container, Kubernetes can run them. There are quite a few options that work more closely with Kubernetes and are worth mentioning:

- Jenkins: Jenkins is the most popular and the most stable CI/CD platform. It’s used by thousands of enterprises around the world due to its vast ecosystem and extensibility. If you plan to use it with Kubernetes, it’s recommended to install the official plugin.JenkinsX is a version of Jenkins suited specifically for the Cloud Native world. It operates more harmoniously with Kubernetes and offers better integration features like GitOps, Automated CI/CD and Preview Environments.

- Spinnaker: Spinnaker is a CD platform for scalable multi-cloud deployments, with backing from Netflix. To install it, we can use the relevant Helm Chart.

- Drone: This is a versatile, cloud-native CD platform with many features. It can be run in Kubernetes using the associated Runner.

- GoCD: Another CI/CD platform from Thoughtworks that offers a variety of workflows and features suited for cloud native deployments. It can be run in Kubernetes as a Helm Chart.

Additionally, there are cloud services that work closely with Kubernetes and provide CI/CD pipelines like CircleCI and Travis, so it’s equally helpful if you don’t plan to have hosted CI/CD platforms.

Let’s see how we can get our hands dirty installing JenkinsX on a Kubernetes Cluster.

How to Install Jenkins on Kubernetes

How to Install Jenkins on Kubernetes

Note that installing JenkinsX on a public Kubernetes provider is a tricky business. I tried both GKE and AKS Kubernetes options without much success. I would say that currently, JenkinsX is WIP when it comes to deploying it to a working cluster, as there are lots of issues to solve.

First we need to install Helm, which is the package manager for Kubernetes:

$ curl https://raw.githubusercontent.com/helm/helm/master/scripts/get-helm-3 > get_helm.sh

$ chmod 700 get_helm.sh

$ ./get_helm.sh -v v2.15.0

Also, we need to install Tiller, for Helm to run properly:

$ kubectl -n kube-system create serviceaccount tiller

serviceaccount/tiller created

~/.kube

$ kubectl create clusterrolebinding tiller --clusterrole cluster-admin --serviceaccount=kube-system:tiller

clusterrolebinding.rbac.authorization.k8s.io/tiller created

~/.kube

$ helm init --service-account tiller

$HELM_HOME has been configured at /Users/itspare/.helm.

Once we’ve done those steps, we need to run inspect command to check the configuration values of the deployment:

$ helm inspect values stable/jenkins > values.yml

Carefully inspect the configuration values and make changes if required.

Then install the chart:

$ helm install stable/jenkins --tls \

--name jenkins \

--namespace jenkins

The installation process will spit out some instructions for what to do next:

NOTES:

-

- Get your ‘admin’ user password by running:

printf $(kubectl get secret --namespace default my-jenkins -o jsonpath="{.data.jenkins-admin-password}" | base64 --decode);echo - Get the Jenkins URL to visit by running these commands in the same shell:

export POD_NAME=$(kubectl get pods --namespace default -l "app.kubernetes.io/component=jenkins-master" -l "app.kubernetes.io/instance=my-jenkins" -o jsonpath="{.items[0].metadata.name}") echo http://127.0.0.1:8080 kubectl --namespace default port-forward $POD_NAME 8080:8080

- Get your ‘admin’ user password by running:

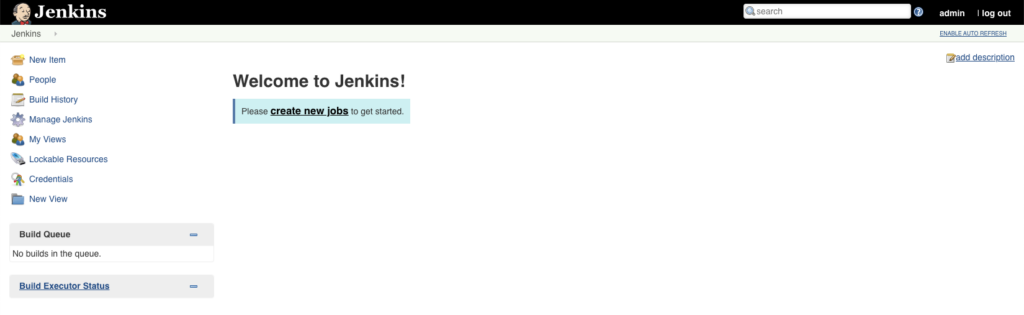

Follow those steps and they will start the proxy server at http://127.0.0.1:8080.

Navigate there and enter your username and password. Voila! Your own Jenkins server in 5 minutes:

Bear in mind that there is still a lot of configuration options left to apply, so you should visit the chart documentation page for more information. By default, this server comes installed with the most basic plugins such as Git, and Kubernetes-Jenkins, and we can install more on demand.

Overall, I would say that the experience of installing Jenkins with Helm was effortless; but I wouldn’t say that for JenkinsX, which was … well, painful.

Scaling CI/CD Jenkins Pipelines with Kubernetes

Now that we have a rough understanding of how CI/CDs run on Kubernetes, let’s see an example use case of deploying a highly-scalable Jenkins deployment in Kubernetes. This is in use (with small modifications) at a customer to handle CI/CDs in their infrastructure, so it’s more opinionated than the general case. Let’s start:

Consider using an opinionated Jenkins distro

While the official Jenkins image is a good choice for starting out, it needs more configuring than we may want. Some users opt for a more opinionated distro – for example using my-bloody-jenkins, which offers quite a long list of pre-installed plugins and configuration options. Out of the available plugins, we use the saml plugin, SonarQubeRunner, Maven and Gradle.

Although, it can be installed via a Helm Chart using the following commands:

$ helm repo add odavid https://odavid.github.io/k8s-helm-charts

$ helm install odavid/my-bloody-jenkins

We chose to deploy a custom image instead, using the following Dockerfile;

FROM odavid/my-bloody-jenkins:2.190.2-161

USER jenkins

COPY plugins.txt /usr/share/jenkins/ref/

RUN /usr/local/bin/install-plugins.sh < /usr/share/jenkins/ref/plugins.txt

USER root

Where the plugins.txt file is a list of additional plugins we want to pre-install into the image:

build-monitor-plugin

xcode-plugin

rich-text-publisher-plugin

jacoco

scoverage

dependency-check-jenkins-plugin

greenballs

shiningpanda

pyenv-pipeline

s3

pipeline-aws

appcenter

multiple-scms

Testng-plugin

Then we use this generic Jenkinsfile to build the master whenever the dockerfile changes:

#!/usr/bin/env groovy

node('generic') {

try {

def dockerTag, jenkins_master

stage('Checkout') {

checkout([

$class: 'GitSCM',

branches: scm.branches,

doGenerateSubmoduleConfigurations: scm.doGenerateSubmoduleConfigurations,

extensions: [[$class: 'CloneOption', noTags: false, shallow: false, depth: 0, reference: '']],

userRemoteConfigs: scm.userRemoteConfigs,

])

def version = sh(returnStdout: true, script: "git describe --tags `git rev-list --tags --max-count=1`").trim()

def tag = sh(returnStdout: true, script: "git rev-parse --short HEAD").trim()

dockerTag = version + "-" + tag

println("Tag: " + tag + " Version: " + version)

}

stage('Build Master') {

jenkins_master = docker.build("jenkins-master", "--network=host .")

}

stage('Push images') {

docker.withRegistry("https://$env.DOCKER_REGISTRY", 'ecr:eu-west-2:jenkins-aws-credentials') {

jenkins_master.push("${dockerTag}")

}

}

if(env.BRANCH_NAME == 'master') {

stage('Push Latest images') {

docker.withRegistry("https://$env.DOCKER_REGISTRY", 'ecr:eu-west-2:jenkins-aws-credentials') {

jenkins_master.push("latest")

}

}

stage('Deploy to K8s cluster') {

withKubeConfig([credentialsId: 'dev-tools-eks-jenkins-secret',

serverUrl: env.TOOLS_EKS_URL]) {

sh "kubectl set image statefulset jenkins jenkins=$env.DOCKER_REGISTRY/jenkins-master:${dockerTag}"

}

}

}

currentBuild.result = 'SUCCESS'

} catch(e) {

currentBuild.result = 'FAILURE'

throw e

}

}

We use a dedicated cluster composed of a few large and medium instances in AWS for Jenkins jobs, which brings us to the next section.

Use of Dedicated Jenkins Slaves and labels

For scaling some of our Jenkins slaves we use Pod Templates and assign labels to specific agents, so in our Jenkinsfiles we can reference them for jobs. For example, we have some agents that need to build Android applications. So we reference the following label:

pipeline {

agent { label "android" }

…

And that will use the specific pod template for Android. We use this Dockerfile, for example:

FROM dkr.ecr.eu-west-2.amazonaws.com/jenkins-jnlp-slave:latest

RUN apt-get update && apt-get install -y -f --no-install-recommends xmlstarlet

ARG GULP_VERSION=4.0.0

ARG CORDOVA_VERSION=8.0.0

# SDK version and build-tools version should be different

ENV SDK_VERSION 25.2.3

ENV BUILD_TOOLS_VERSION 26.0.2

ENV SDK_CHECKSUM 1b35bcb94e9a686dff6460c8bca903aa0281c6696001067f34ec00093145b560

ENV ANDROID_HOME /opt/android-sdk

ENV SDK_UPDATE tools,platform-tools,build-tools-25.0.2,android-25,android-24,android-23,android-22,android-21,sys-img-armeabi-v7a-android-26,sys-img-x86-android-23

ENV LD_LIBRARY_PATH ${ANDROID_HOME}/tools/lib64/qt:${ANDROID_HOME}/tools/lib/libQt5:$LD_LIBRARY_PATH/

ENV PATH ${PATH}:${ANDROID_HOME}/tools:${ANDROID_HOME}/platform-tools

RUN curl -SLO "https://dl.google.com/android/repository/tools_r${SDK_VERSION}-linux.zip" \

&& echo "${SDK_CHECKSUM} tools_r${SDK_VERSION}-linux.zip" | sha256sum -c - \

&& mkdir -p "${ANDROID_HOME}" \

&& unzip -qq "tools_r${SDK_VERSION}-linux.zip" -d "${ANDROID_HOME}" \

&& rm -Rf "tools_r${SDK_VERSION}-linux.zip" \

&& echo y | ${ANDROID_HOME}/tools/android update sdk --filter ${SDK_UPDATE} --all --no-ui --force \

&& mkdir -p ${ANDROID_HOME}/tools/keymaps \

&& touch ${ANDROID_HOME}/tools/keymaps/en-us \

&& yes | ${ANDROID_HOME}/tools/bin/sdkmanager --update

RUN chmod -R 777 ${ANDROID_HOME} && chown -R jenkins:jenkins ${ANDROID_HOME}

We also use a Jenkinsfile that works similarly with the previous one for building the master. Whenever we make changes to the Dockerfile, the agent will rebuild the image. This gives enormous flexibility with our CI/CD infrastructure.

Use of Autoscaling

Although we have assigned a specific number of nodes for deployments, we can do even more by enabling cluster autoscaling. This means that in cases of increased workloads and spikes, we can spin up extra nodes to handle the jobs. Currently, if we have a fixed number of nodes, we can only handle a fixed number of jobs. This is roughly estimated taken the fact that the minimum resources for each slave assigned typically 500ms CPU and 256MB memory, and setting a concurrency limit too high might not be respected at all.

This can happen when, for example, at times when you have a major release cut and need to deploy lots of microservices. Then a storm of jobs will pile up the pipeline, imposing significant delays.

In such cases we can increase the number of nodes for that phase. For example, we can add extra VM instances in the pool, and at the end of the process we remove them.

We can do this from the command line using the auto scaling option for configuring either the Vertical or Cluster autoscaling option. However, this approach requires careful planning and configuration, as sometimes the following will happen:

- An increased number of jobs reach a plateau.

- Autoscaler adds new nodes, but takes 10 minutes to deploy and assign.

- Old jobs will have finished by now and new jobs fill the gap, lowering the need for new nodes.

- The new nodes are available, but remain stale and underutilized for at least X amount of minutes defined by the –scale-down-unneeded-time flag.

- Same effect happens multiple times per day.

In that case, it’s better if we either configure it based on our specific needs or just increase the nodes for that day and revert them back when the process is finished. This all has to do with finding the optimal way to utilize all resources and minimize costs.

In either case, we have a scalable and simple-to-use Jenkins cluster in place. For each job, a pod gets created to run a specific pipeline and gets destroyed after it is done.

Best Practices to use Kubernetes for CI/CD at scale

Now that we have learned which CI/CD platforms exist for Kubernetes and how to install one on your cluster, we will discuss some of the ways to operate them at scale.

To begin with, the choice of Kubernetes Distribution is one of the most critical factors that we need to take into account. Finding the most suitable one needs to be an informed, technical decision, as it’s not trivial to find good compatibility between them. One example of a solid production-grade Kubernetes distribution is our Managed Kubernetes solution from Platform9, as it offers first-class solutions that cater to different needs like devops-automation and hybrid clouds. Furthermore, it is 100% upstream opensource, certified by CNCF, while being delivered as a SaaS- service- so you do not need to worry about day2 operations of your Kubernetes environment once you’ve created your infrastructure (either for CI/CD on your dev/test environments, or in Production).

Second, the choice of a Docker registry and package management is equally important. We are looking for secure and reliable image management that can be retrieved on-demand, as quickly as possible. Using Helm as a package manager is a sound choice as it’s the best way to discover, share, and use software built for Kubernetes.

Third, using modern integration flows such as GitOps and ChatOps offer significant benefits in terms of ease of use and predictability. Using Git as a single source of truth allows us to run “operations by pull requests,” which simplifies control of the infrastructure and application deployments. Using team chat tools such as Slack or Mattermost for triggering automated tasks for CI/CD pipelines assist us in eliminating repetitiveness, and simplifies integration.

Overall, if we want to go more low-level, you could customize or develop your own Kubernetes Operator that works more closely with Kubernetes API. The benefits of using a custom operator are numerous, as they aim to build a greater automation experience.

As a final word, we could say that Kubernetes and CI/CD platforms form a great match and it’s the recommended way to run them. If you are just entering the Kubernetes ecosystem, then the first task you could try to integrate is a CI/CD pipeline. This is a good way to learn more about its inner workings. The key is to allow room for experimentation, which can make it easier to change in the future.