The secret to the success of any DevOps initiative is a stable CI/CD pipeline. CI – or Continuous Integration – involves automatically taking newly-merged code and then building, testing, and depositing that code in an artifact repository. CD – Continuous Deployment – involves taking that freshly-deposited artifact and deploying it into a production environment.

In this tutorial, we’re going to build the infrastructure for a CI/CD pipeline in our Kubernetes environment. A successful merge to the master branch in a GitHub project will trigger a Jenkins 2 pipeline, which can build, test and deploy an updated project into our environment.

In addition to GitHub and Jenkins, we’ll be making use of a private JFrog repository to store the image for our pipeline, and we’ll be deploying everything on a cluster managed by Platform9’s Managed Kubernetes (PMK) service.

Anatomy of the Pipeline

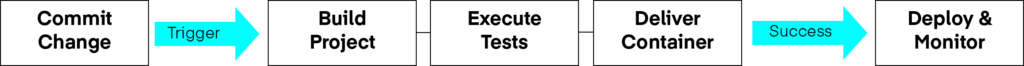

CI/CD Pipelines can be as straightforward or as complicated as you choose to make them. The objective is an automated process that can take an updated codebase, build it, run a battery of tests, and then deliver the project to the target environment. If the pipeline is successful, the project is then deployed into the environment and monitored.

Fig. 1 A Simple CI/CD Pipeline

The testing phase should include the execution of unit tests, integration tests, and performance tests. This phase can also include static code analysis, security scans, and any other tools that your organization uses to validate and secure applications.

Following the testing phase, the application is packaged and delivered to the environment. In our example, we’ll use this phase to build the Docker container, tag it, and push it to our JFrog Container Registry. Your environment may vary depending on how you package your applications and store them.

Finally, when the pipeline completes successfully, we’ll automatically deploy the artifact into our environment and monitor it. There are different deployment strategies you can use. Canary, Blue/Green, and Rolling Deployment are some options.

Getting Jenkins Into The Container Registry

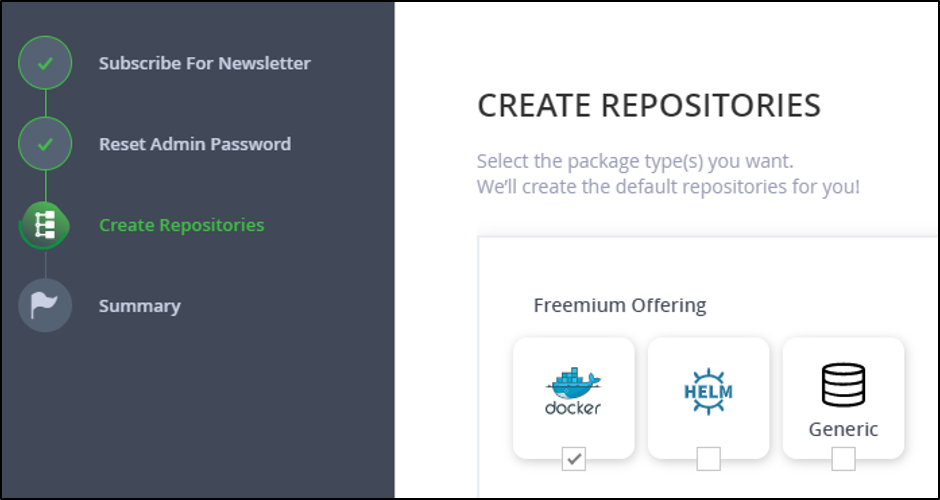

We’ll be using the JFrog Container Registry to store our Jenkins image and all of the artifacts we create with our pipeline. I signed up for the free trial of the JFrog Container Registry. The process was intuitive, and I created a Docker repository to store my artifacts.

Fig 2. Creating a Docker Repository

Now that we have a container registry, we need to create and configure a Jenkins image, and then push it into our registry. By doing this, we’ll have an image that is configured for our environment and ready to go as soon as we deploy it.

You’ll need to have Docker installed for this next step, as well as the address and account credentials for your JFrog registry. We’ll start by taking the latest image of the Jenkins container and configuring it with the standard plugins and an admin user. Below, I’ve listed the Dockerfile – a text file containing a list of plugins (plugins.txt), and a groovy script (userCreate.groovy) that will automatically configure the first user account.

Fig 3. Dockerfile for a Custom Jenkins Container:

FROM jenkins/jenkins:lts

ENV JENKINS_USER=admin \

JENKINS_PASSWORD=password

COPY userCreate.groovy /usr/share/jenkins/ref/init.groovy.d/

# Install Jenkins plugins

COPY plugins.txt /usr/share/jenkins/plugins.txt

RUN /usr/local/bin/install-plugins.sh $(cat /usr/share/jenkins/plugins.txt) && \

mkdir -p /usr/share/jenkins/ref/ && \

echo lts > /usr/share/jenkins/ref/jenkins.install.UpgradeWizard.state && \

echo lts > /usr/share/jenkins/ref/jenkins.install.InstallUtil.lastExecVersionFig 4. Text file of Jenkins Plugins to Install – plugins.txt:

apache-httpcomponents-client-4-api

authentication-tokens

bouncycastle-api

branch-api

build-timeout

command-launcher

credentials

credentials-binding

display-url-api

docker-commons

docker-workflow

durable-task

favorite

ghprb

git

git-changelog

git-client

git-server

github

github-api

github-branch-source

github-issues

github-oauth

github-organization-folder

github-pr-coverage-status

github-pullrequest

gradle

gravatar

handlebars

icon-shim

javadoc

jquery-detached

jsch

junit

kerberos-sso

kubectl

ldap

mailer

mapdb-api

metrics

momentjs

oauth-credentials

oic-auth

pipeline-build-step

pipeline-github-lib

pipeline-githubnotify-step

pipeline-graph-analysis

pipeline-input-step

pipeline-milestone-step

pipeline-model-api

pipeline-model-declarative-agent

pipeline-model-definition

pipeline-model-extensions

pipeline-rest-api

pipeline-stage-step

pipeline-stage-tags-metadata

pipeline-stage-view

pipeline-utility-steps

plain-credentials

pubsub-light

rebuild

resource-disposer

scm-api

script-security

sse-gateway

ssh-agent

ssh-credentials

ssh-slaves

structs

timestamper

token-macro

url-auth-sso

variant

workflow-aggregator

workflow-api

workflow-basic-steps

workflow-cps

workflow-cps-global-lib

workflow-durable-task-step

workflow-job

workflow-multibranch

workflow-scm-step

workflow-step-api

workflow-support

ws-cleanupFig 5. Groovy Script to Configure User Account -userCreate.groovy

#!groovy

import jenkins.model.*

import hudson.security.*

import jenkins.security.s2m.AdminWhitelistRule

def instance = Jenkins.getInstance()

def env = System.getenv()

def user = env['JENKINS_USER']

def pass = env['JENKINS_PASSWORD']

if ( user == null || user.equals('') ) {

println "Jenkins user variables not set (JENKINS_USER and JENKINS_PASSWORD)."

}

else {

println "Creating user " + user + "..."

def hudsonRealm = new HudsonPrivateSecurityRealm(false)

hudsonRealm.createAccount(user, pass)

instance.setSecurityRealm(hudsonRealm)

def strategy = new FullControlOnceLoggedInAuthorizationStrategy()

instance.setAuthorizationStrategy(strategy)

instance.save()

Jenkins.instance.getInjector().getInstance(AdminWhitelistRule.class).setMasterKillSwitch(false)

println "User " + user + " was created"

}We’ll build the Docker container from the folder with each of those scripts, and tag it jenkins as the name with the following command.

$ docker -t jenkins:lts .It’ll take a minute or two for the build to complete. Now that we have our container, we’re going to push it up to our private JFrog repository. The first step is to log in to our JFrog repository with Docker. We use the following command, replacing <repository_name> with the name of the repository, with -docker appended for the docker repository. After entering the command, Docker will prompt you for your JFrog username and password.

$ docker login <repository_name>-docker.jfrog.ioOnce we’ve authenticated to our repository, we can tag the image with the name of our repository and push it up. Again, replace <repository_name>with the name of your repository.

$ docker tag jenkins:lts <repository_name>-docker.jfrog.io/jenkins:lts$ docker push <repository_name>-docker.jfrog.io/jenkins:ltsOnce you have uploaded the image successfully, you’re ready to move on to the next step.

Setting Up The Environment

For this example, I provisioned a pair of virtual machines in the cloud. I’m using Google Cloud, but you could use machines from Azure, AWS, or even a local workstation. You’ll want the instances to be publicly accessible if you’re going to trigger a build from GitHub.

If you’re new to Platform9 and need a hand setting up virtual machines or creating a new cluster, the tutorials listed below are invaluable.

- Create a Single Node Cluster on VirtualBox VM on Mac OS

- Getting Started with PMKFT on a Windows Machine

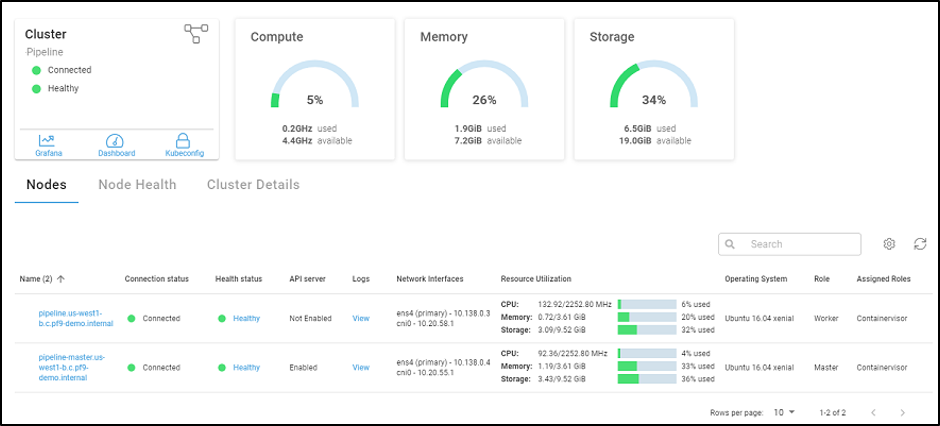

I created a cluster named “Pipeline” and added my new nodes to this cluster: one node as a master and the other as a worker.

Fig 6. The Pipeline Cluster – Platform9 Dashboard

Once my cluster was running, click on the Kubeconfig icon to download the config for kubectl. Select the Token option, and then download the config. Mine was named Pipeline.yaml. You’ll need this config to connect the Kubernetes Dashboard through Platform9, and on the cluster to use kubectl locally.

On the cluster itself, you’ll need to set an environment variable with the configuration. I downloaded the file to my user’s Downloads folder, and set the variable as shown below:

$ export KUBECONFIG=~/Downloads/Pipeline.yamlThe next thing we need to do is add the connection information to our JFrog registry. We’ll store this as a Kubernetes Secret called regcred. The easiest way to set this up is directly on the master instance. You’ll need the address for the registry, your username, and your password. Replace the appropriate text with your information in the following command.

$ kubectl create secret docker-registry regcred --docker-server= https://<repository_name>-docker.jfrog.io --docker-username=XXXXXX --docker-password=XXXXXXXX

We’re now ready to deploy our Jenkins pod to our cluster. From the Pods, Deployments, Services tab in my Platform9 dashboard, I selected the Deployments section, and clicked on + Add Deployment. I selected my cluster (Pipeline), the default namespace, and then used the text below as my Resource YAML. You’ll need to update <repository_name> with your repository.

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: jenkins-deployment

spec:

replicas: 1

template:

metadata:

labels:

app: jenkins

spec:

containers:

- name: jenkins

image: <repository_name>-docker.jfrog.io/jenkins:lts

ports:

- containerPort: 8080

imagePullSecrets:

- name: regcredOnce we’ve deployed the pod, we need to expose it to the outside world. From the Pods, Deployments, Services tab in my Platform9 dashboard, I selected the Services section, and clicked on + Add Service. As above, I picked my cluster (Pipeline), the default namespace, and then used the text below as my Resource YAML. You’ll need to update <repository_name> with your repository.

apiVersion: v1

kind: Service

metadata:

name: jenkins-service

spec:

selector:

app: jenkins

ports:

- protocol: TCP

port: 8080

targetPort: 8080

type: NodePortOnce Jenkins is deployed and connected as a service, you can connect to the Kubernetes dashboard for the cluster to retrieve the port, which allows access to the server. You’ll need the Kubeconfig for this step as well.

Select jenkins-service in the Services section of the Kubernetes dashboard. Look for the connection information, which will include the local IP address of the cluster, with the exposed port number. The port number is in a range between 30000 and 32767. Mine is shown below. If you’re using cloud-based instances, you may need to open the firewall for this port to allow you to connect. If this were more than a POC, we’d also set up a load balancer, and configure a ‘prettier’ routing solution.

Fig 7. Connection information for the Jenkins Service

I can now connect to the Jenkins service by combining the public IP address of my master node, and the port number (30315).

Triggering a Build

Navigate to the Jenkins instance in your browser to verify that it is accessible, and to begin setting up the pipeline. Log in with the credentials you specified in the Dockerfile (admin:password) and then click on the link to create new jobs. Enter a name for your project and then select Multibranch Pipeline as the type. Click OK to continue.

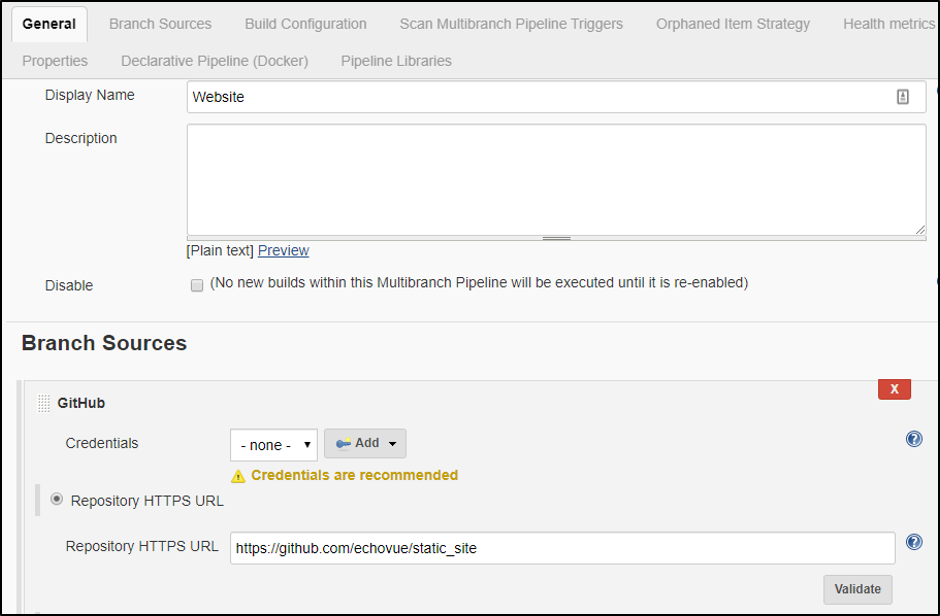

Under the General tab, add a display name, and then under Branch Sources, click on Add source. I’ll be using a simple website project I set up in GitHub, so I selected GitHub and then added the URL for my repository. If you want to check it out, you can view it here

Fig 8. Configuring the Project in Jenkins

Finally, ensure that the Build Configuration is by Jenkinsfile, and then under Scan Multibranch Pipeline Triggers, check the Periodically if not otherwise run option. I set mine to a 30-minute interval. If you click on Save at the bottom of the page, it saves the project and begins scanning your repository for changes.

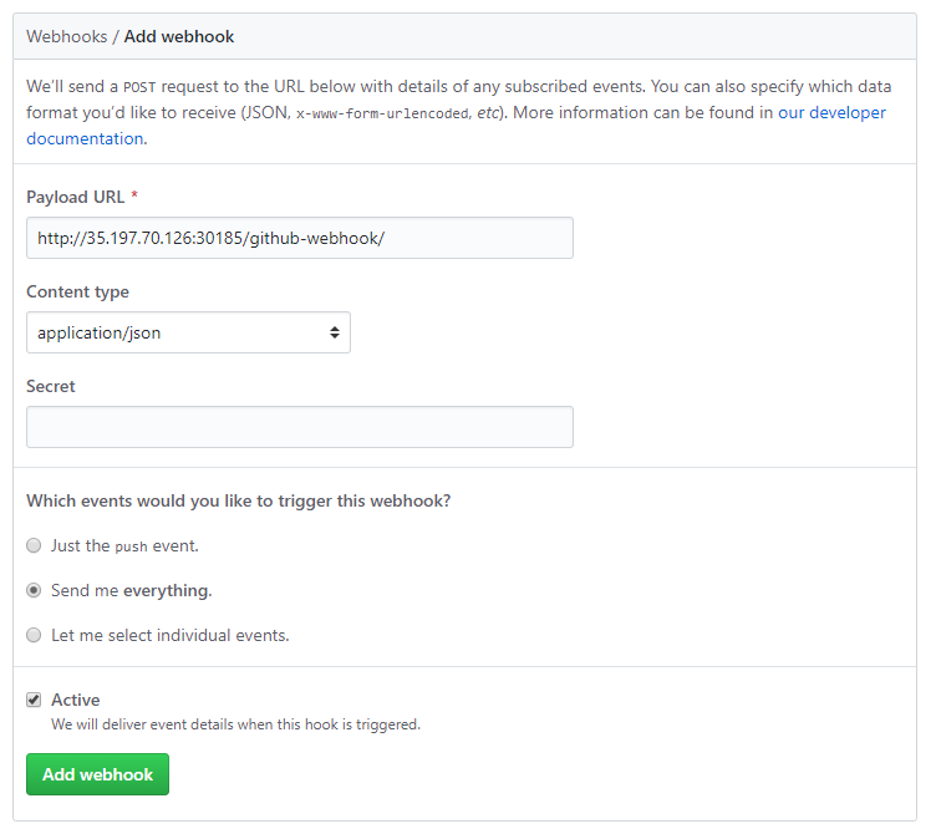

The next step is to create a webhook that will trigger a Jenkins build whenever our project is changed. From your project in GitHub, click on Settings, then Webhooks, and then click the Add webhook button.

The payload URL is the IP address and port number combination we set up in the previous section. Add /github-webhook/ to the end of this URL. I set the Content-type to application/json, and chose the Send me everything option.

Fig 8. Adding the Webhook to the Github Project

Managing the Pipeline with the Jenkinsfile

Each project manages the configuration for its build pipeline. The default location for the configuration is at the root level of the project in a file named Jenkinsfile. The file specifies how Jenkins should construct the pipeline, and details each step required to build, test, deploy, and monitor the application.

One of the best resources to use when building a new pipeline is the Jenkins website itself. Getting started with pipelines explains each of the steps and describes how to build a pipeline that meets your needs.

Learning More

If you’ve worked through this tutorial, you should now have a cluster that is managed through Platform9’s Managed Kubernetes. You should also have a private Docker repository with JFrog that provides a secure place to store your customized Jenkins image, and other images for your cluster. Finally, you have a Jenkins instance that serves as the backbone for your CI/CD process.

For more information and to learn more about each of these products, I’d recommend the resources below: