What is Benchmarking?

Benchmarking is the process of measuring performance of a software system. Benchmarking provides capacity analysis and helps determine the best tool for a specific use case. OpenStack clouds require benchmarking because of their multi-services architecture. Benchmarking OpenStack clouds can help in answering questions like how efficiently sets of servers perform.

Need for Benchmarking-as-a-Service (BaaS)

OpenStack benchmarking tools are difficult to set up and require the user to dig into documentation and modify configuration files to be up and running. However, capacity analysis and performance measurement services should be extremely easy to use and not require users to read dozens of manuals. The simpler the benchmarking procedure, the easier it is for cloud operators to compare performance metrics and scale effectively. To solve this need for simpler benchmarking with OpenStack, we developed a distributed benchmarking framework.

The service, which can be used via a web-UI, makes it extremely easy to benchmark cloud deployments. It builds on OpenStack Rally, and creates a benchmark-as-a-service (BaaS) system. The system can be used by multiple users in parallel to benchmark multiple OpenStack deployments.

Instead of reinventing the wheel we decided to build upon an existing benchmarking tool. We containerized Rally and used it in our service to benchmark cloud deployments. To deploy this service, we decided to use the Kubernetes platform, leveraging Platform9 Managed Kubernetes. Kubernetes design principles enforce a distributed and containerized application paradigm which nicely aligns to our objectives.

The blog below elaborates on a summer internship project focused on BaaS. Platform9 submitted a talk about building this service which has been selected in the OpenStack Summit 2017 in Sydney.

Rally – OpenStack’s Benchmarking Tool

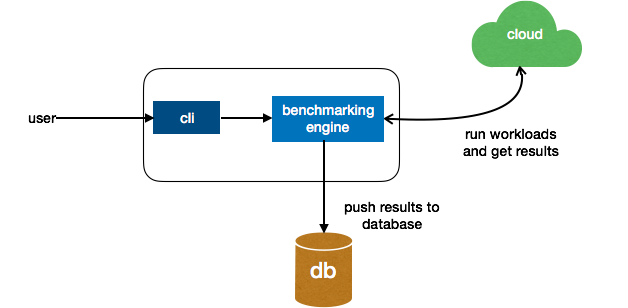

Let’s understand how Openstack Rally works at a high-level before we take a look at containerizing its services.

Rally is a benchmarking CLI tool for OpenStack. You can attach cloud deployments to Rally and then run benchmarking tasks. Rally contains two main components: CLI and the benchmarking engine. The user submits benchmarking tasks via the CLI which delegates them to the benchmarking engine to run. Rally then benchmarks the cloud deployment and stores the results in a database. A benchmarking task is a JSON file detailing which benchmarks you want to run against your cloud.

Benchmarking-as-a-Service and Containerized BaaS

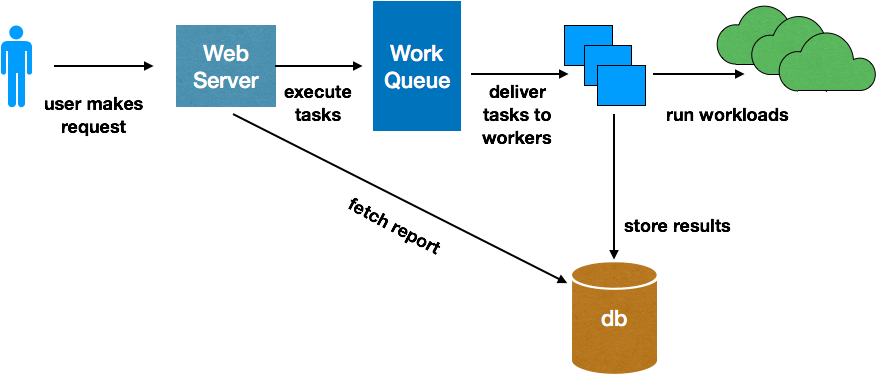

A high-level design of the service has a web server to front all the user requests. There is a work queue which accepts jobs and pushes them to workers which run the actual workloads on an OpenStack deployment and store results to the database. The web server then fetches a benchmarking report from the database once the task is done.

The task processing unit uses a publisher and subscriber model where the back-end (web server) acts as the publisher and the workers act as the subscribers.

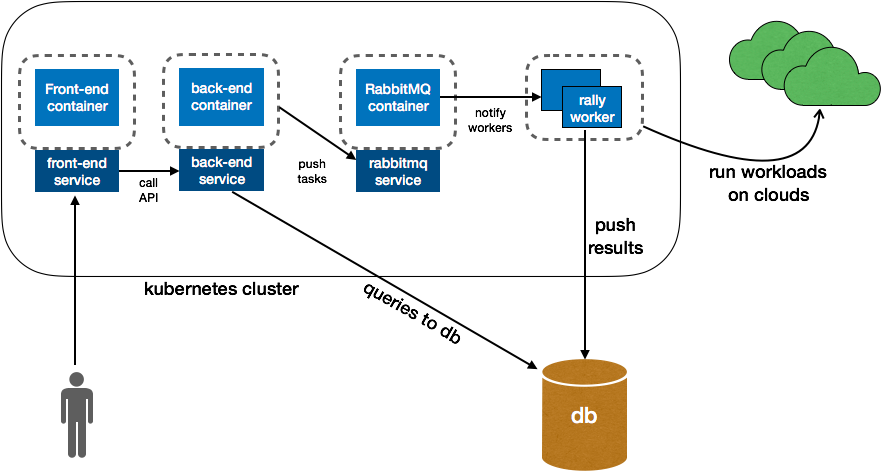

We containerized every component of the service and deployed it as a Kubernetes App. This ensures scalability and makes the service distributed in nature. Here is the Kubernetes App architecture.

The web server is split into front-end and back-end components. We are using RabbitMQ as the task broker for the message queue. The workers are basically containers which have Rally installed on them.

Every component is enclosed in a pod and exposes a service for communication with other components. The front-end-service also acts as a reverse proxy, fronting all the user requests and making appropriate API calls.

When the user gives the service a task to run on a particular cloud deployment, the back-end server pushes this task to the task broker as a job. The task broker then delegates this job to a worker which executes it and returns.

Implementation Details

The front-end container is an nginx server and also acts as a reverse proxy to the back-end. The back-end container is a Python Flask app which exposes a set of APIs. We decided to use Python Celery, which is a task scheduling framework in conjunction with RabbitMQ as the task broker. The database is a MySQL database which is present outside the Kubernetes ecosystem. All the configuration is handled using Kubernetes Secrets.

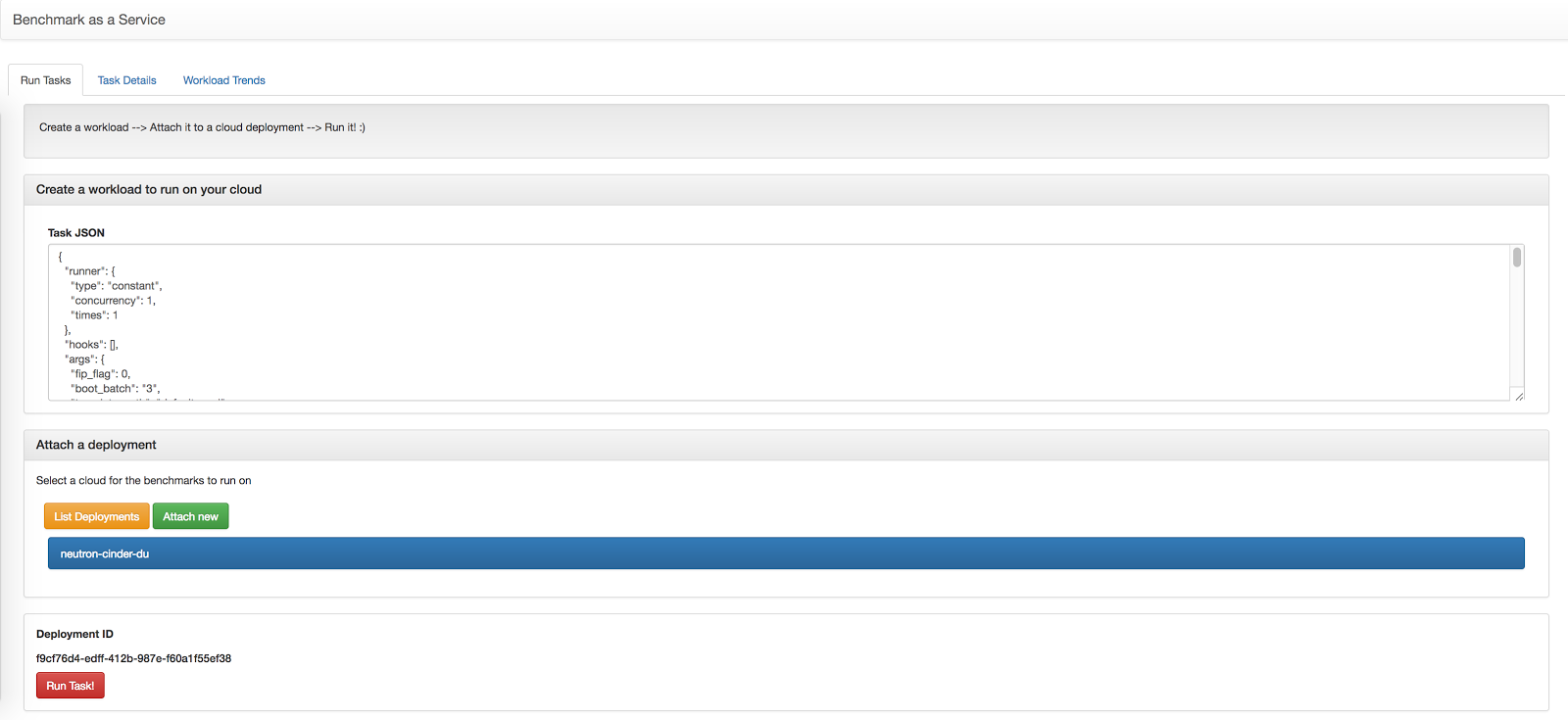

The following screenshot shows a benchmark or workload, specified in JSON, to be run on a cloud with the name “neutron-cinder-du.” In this particular case, task that was run creates a number of Keystone users.

The JSON file for this test is shown below:

{

“runner”: {

“type”: “constant”,

“concurrency”: 1,

“times”: 2

},

“hooks”: [],

“args”: {},

“sla”: {},

“context”: {}

}

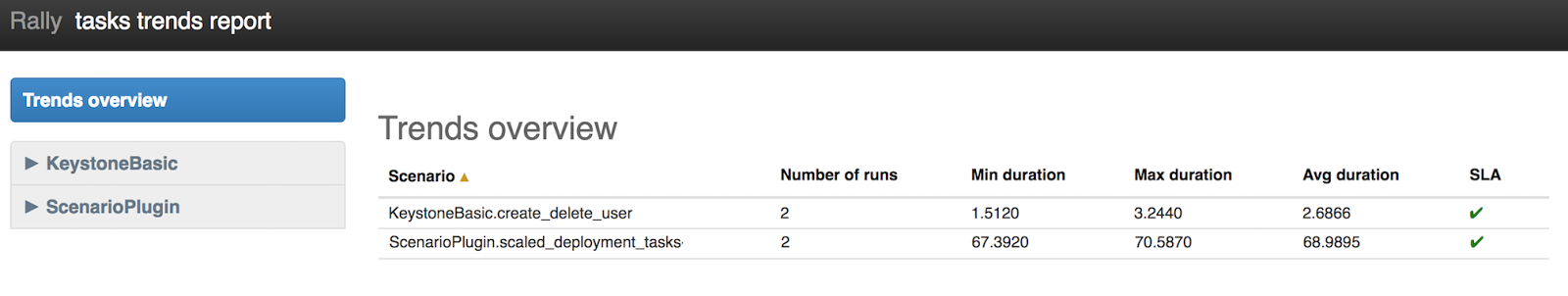

After running this task, the results then became available in the “Trends Overview” page. As shown below, this task has been run twice with an average duration of approximately 2.66 seconds.

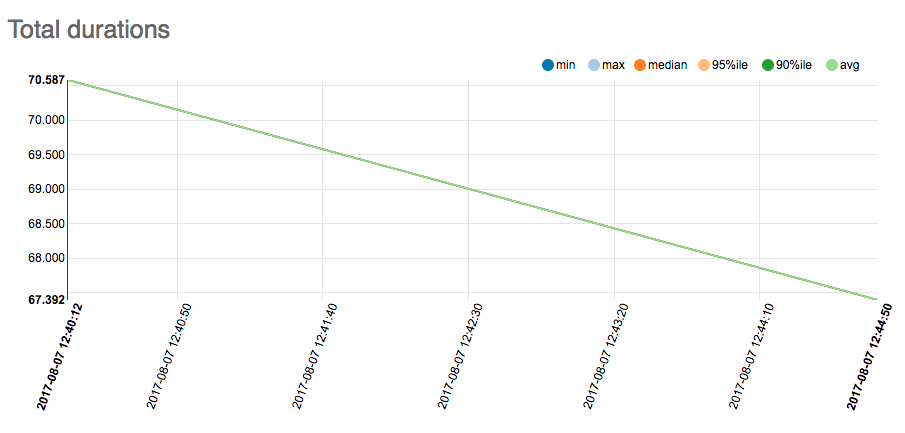

As shown above, there is a second task called “Scaled Deployment.” This task simultaneously creates a number of OpenStack Nova instances with attached Cinder volumes. On this cloud, it has taken an average of 70.587 seconds on the test run at 12:40:12 and 67.392 seconds on the test run at 12:44:50. Both tests were run on 2017-08-07.

The graph above demonstrates the capability to chart trend lines through multiple tests, and capture the minimum, maximum, median, 95th, and 90th percentile, as well as the overall average for each benchmark.

Future work

The service is currently in a fledgling state, but we’re looking at ways to use the infrastructure for repeatable scale testing. There is also an opportunity to make this a cross-cloud benchmarking service. As mentioned previously, this project used Platform9 Managed Kubernetes to containerize OpenStack’s Rally project.

—

This project was jointly done by Kaustubh Gondhalekar (Software Engineering Intern) and Kaustubh Phatak (Software Engineer) at Platform9 during Summer 2017.