Prometheus Installed Using Helm Not Alerting PersistsentVolumeClaim Full Alerts.

Problem

Alerts are not fired in Prometheus when PersistsentVolumeClaims are filled or exceeded the volume limits set for alerts.

Environment

- Platform9 Managed Kubernetes - v5.5 and Higher

Cause

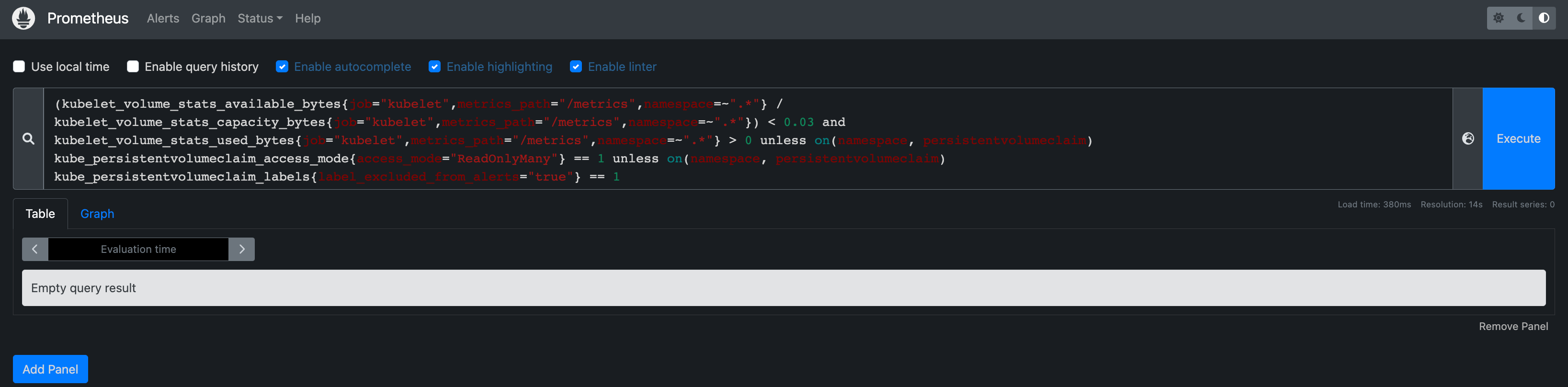

The query used in the upstream prometheus rule to trigger the KubePersistentVolumeFillingUp is giving Empty query result.

(kubelet_volume_stats_available_bytes{job="kubelet",namespace=~".*"} / kubelet_volume_stats_capacity_bytes{job="kubelet",namespace=~".*"}) < 0.15 and kubelet_volume_stats_used_bytes{job="kubelet",namespace=~".*"} > 0 and predict_linear(kubelet_volume_stats_available_bytes{job="kubelet",namespace=~".*"}[6h], 4 * 24 * 3600) > 0 unless on(namespace, persistentvolumeclaim) kube_persistentvolumeclaim_access_mode{access_mode="ReadOnlyMany"} == 1 unless on(namespace, persistentvolumeclaim) kube_persistentvolumeclaim_labels{label_excluded_from_alerts="true"} == 1

Rules used in the prometheusrule kubernetes object:

- alert: KubePersistentVolumeInodesFillingUp annotations: description: The PersistentVolume claimed by {{ $labels.persistentvolumeclaim }} in Namespace {{ $labels.namespace }} only has {{ $value | humanizePercentage }} free inodes. runbook_url: https://runbooks.prometheus-operator.dev/runbooks/kubernetes/kubepersistentvolumeinodesfillingup summary: PersistentVolumeInodes are filling up. expr: |- ( kubelet_volume_stats_inodes_free{job="kubelet", namespace=~".*", metrics_path="/metrics"} / kubelet_volume_stats_inodes{job="kubelet", namespace=~".*", metrics_path="/metrics"} ) < 0.03 and kubelet_volume_stats_inodes_used{job="kubelet", namespace=~".*", metrics_path="/metrics"} > 0 unless on(namespace, persistentvolumeclaim) kube_persistentvolumeclaim_access_mode{ access_mode="ReadOnlyMany"} == 1 unless on(namespace, persistentvolumeclaim) kube_persistentvolumeclaim_labels{label_excluded_from_alerts="true"} == 1 for: 1m labels: severity: criticalResolution

This issue is resolved in PMK-5.9 release- Tracked in the internal jira: PMK-5701

Workaround

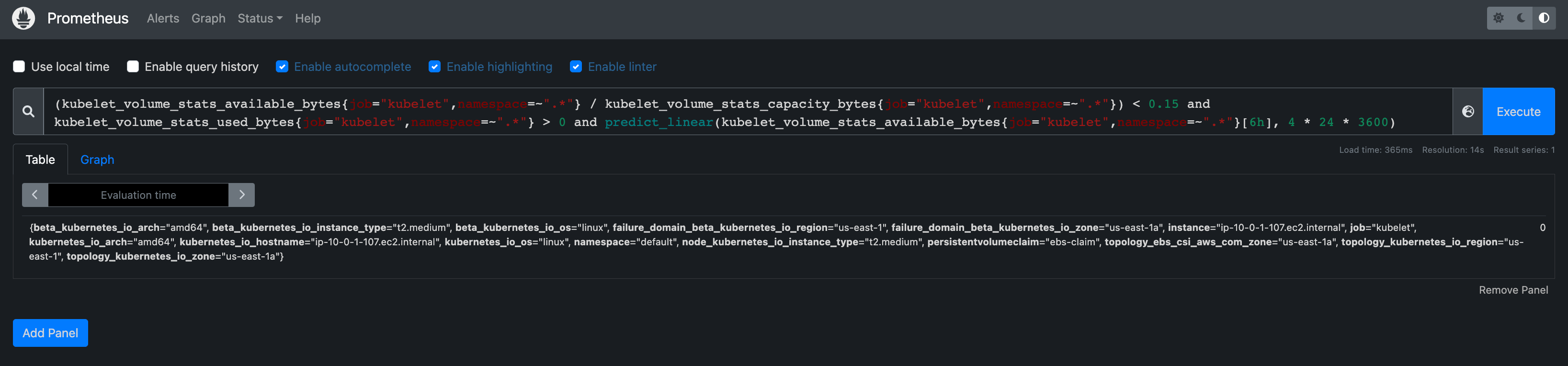

Modify the query compatible with the Platform9 Managed Kubernetes environment as shown below in the prometheusrule kubernetes object.

(kubelet_volume_stats_available_bytes{job="kubelet",namespace=~".*"} / kubelet_volume_stats_capacity_bytes{job="kubelet",namespace=~".*"}) < 0.15 and kubelet_volume_stats_used_bytes{job="kubelet",namespace=~".*"} > 0 and predict_linear(kubelet_volume_stats_available_bytes{job="kubelet",namespace=~".*"}[6h], 4 * 24 * 3600)

Once you are getting the right result for the query, you can edit the existing prometheus rules with working query using the kubectl command:

# kubectl -n <namespace> edit prometheusrule -- - alert: KubePersistentVolumeFillingUp annotations: description: Based on recent sampling, the PersistentVolume claimed by {{ $labels.persistentvolumeclaim }} in Namespace {{ $labels.namespace }} is expected to fill up within four days. Currently {{ $value | humanizePercentage }} is available. runbook_url: https://runbooks.prometheus-operator.dev/runbooks/kubernetes/kubepersistentvolumefillingup summary: PersistentVolume is filling up. expr: |- ( kubelet_volume_stats_available_bytes{job="kubelet",namespace=~".*"} / kubelet_volume_stats_capacity_bytes{job="kubelet",namespace=~".*"}) < 0.15 and kubelet_volume_stats_used_bytes{job="kubelet",namespace=~".*"} > 0 and predict_linear(kubelet_volume_stats_available_bytes{job="kubelet",namespace=~".*"}[6h], 4 * 24 * 3600 ) for: 1m labels: severity: warningOnce edited prometheus should automatically detect the change in rule and fire the alerts if PVCs have exceeded the space limits.

Additional Information

The workaround suggested will not persist across upgrades unless changes made in the helm chart.