Imagine you are a developer who is a fresh college graduate. You have got a dream job at an amazing company which values talent and merit. You are getting a chance to work on the latest technologies like Kubernetes, Cloud and Edge stack and develop solutions for end users.

You take a few months to settle in, get familiar with the workflow and start some tasks on internal clusters. Now if your company is good, this process will be a breeze as they have started documenting everything from the get go to ensure developers aren’t spending unnecessary time on items not related to their task. In my case, it was a managed private and public cloud company which promises a 99.98% uptime – so you can imagine how high the stakes were.

A few months later, one of our customers requested a feature to import external clusters into our management plane. With some brilliant and meticulous engineers whom I have the pleasure to ask guidance and work with, this was delivered within a release and we added support for EKS, AKS and GCP. The idea was to just add these as cloud providers and then import external clusters. Sounds easy, right? Here’s where you’re wrong.

Behind the scenes, what AWS/EKS does is something you’ll be surprised with. They ensure that people developing and working with these clusters have the hardest time possible and spend their time on unrelated activities. This blog is just a gist of what I faced for the better half of a month while working on a simple deployment for external cluster.

For starters, the process of creating a cluster using EKS is pretty ahem straightforward. You’ll provide the version and configuration, jump from one page to another creating security groups, access, roles, god knows what else and as an added bonus keep checking permissions that your user does not have. Dance around requesting DevOps to grant you these and then somehow you’ll get a cluster up and running. Granted that the policies are managed by our DevOps engineers, but remember that they are managing 10 teams at the same time and granting blanket access to everyone will be a disaster. But congrats, you’ve scaled the first peak:

Mind you this is just for a simple cluster that I want to test a ninety line YAML on, there is no feature where you can just deploy a simple one-click cluster for testing purposes. Why do I need to have a PHD in cloud technologies to do this?

Now comes the fun part. Usually with AWS users you have an access key and secret that is displayed once while you create the access token. Now it’s a good thing if you stashed this somewhere carefully but me, I’m just a simple man trying to make my way in the universe (Boba Fett). So I took a different path which didn’t take this into account. More on this later.

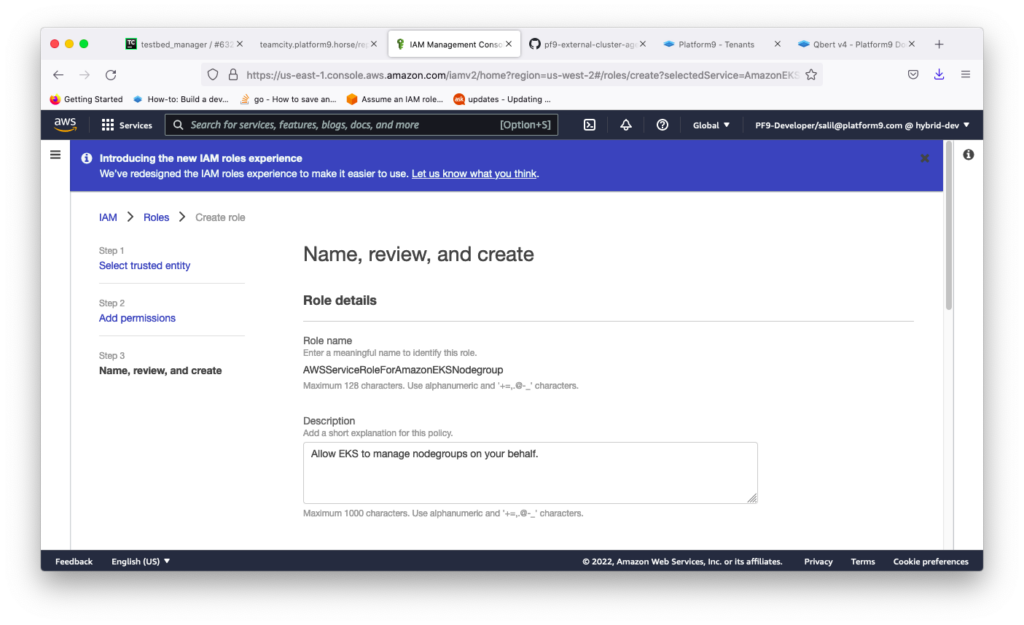

So now you have a cluster, you rub your hands with the prospect that you’ll finally start working on your task. Here comes the next rock, creating a node group. Great, another feather in your cap for needing something not related to your task. Now you need to create a node role – for this to erm work. For that you need a new policy.

When you try to create a AWS EKSNodeGroup policy for yourself, you’ll be greeted with this wonderful screen:

So now my cluster is ready, I use kubectl to deploy on it. Prior to this I have to download the awscli and set the configuration. Here I enter another access key than the one I used to create the cluster and all hell breaks loose. Now it will not allow you to access your own cluster because you used a different key. Silly me didn’t open a bank locker and save this precious gold key created under a second safely.

The merry developers at AWS have released awscliv2 but they are so proud of their invention that the user doesn’t know this exists and instead tries the older CLI, stumbles into errors and later finds this divine prophecy called awscliv2 with which you can create/update your cluster kubeconfig properly. Wow, thanks for this feature. I’m eternally grateful that you decided to create this, but mind removing the older version or back-porting the change?

Speaking of hiding things, during this ‘Dora The Explorer’ adventure I also found out that there’s a tool called eksctl which deploys a cluster for you using your AWS credentials. For all intents and purposes, it works. Mind mentioning that on the AWS EKS page? Hello, its your own tool right?

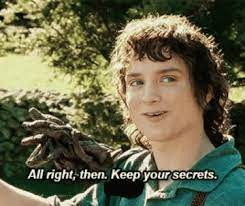

Sorry for the pun but I’m a LOTR fan currently reading the books and watched the movies 20 times instead of studying. But not bad huh? I’m writing this blog here and you’re reading it.

So now I have to delete the cluster, redeploy it again (*pfft* very easy) and ensure that I have the same access key and secret stored in my AWS config with armed guards. All of this is hilariously because I simply want to check whether my ninety line YAML works correctly (I might have put tabs instead of spaces don’t judge me, the linters are unhelpful)

After you sort all of this out, if you switch your cluster API access from public to private you’ll again be unable to access your cluster sometimes even after allowing inbound SSH access – I understand it needs to be protected but my test cluster with spot instances is not Alibaba’s cave which has treasure. If someone wants to do that, here’s my PayPal account … wait that’s illegal!

After all of this, kudos if you got the cluster up. Here’s a cached cookie, but probably by that time you might finish writing and publishing a book, your manager probably fired you (fingers crossed that didn’t happen) and of course, you mostly forgot what you were working on. Time to get back to my imaginary world where this is simpler.

My name is Boba Fett, and I am still no closer: