Last June, my co-founder Madhura Maskasky announced the beginning of our journey towards delivering an enterprise-class Container management product: Platform9 Managed Kubernetes. We started a private beta program involving existing and new customers. Our engineering team dove deep into the guts of all things Kubernetes, the good and the bad. We learned the hard way how the many elements of the Kubernetes stack could break, and how to recognize and fix the issues. We observed how workloads behave on Kubernetes and started measuring and tuning their performance. Most importantly, we listened carefully to our customers, which helped us shape and prioritize the feature set. For example, we learned that many customers had mixed or hybrid deployment use cases involving deploying Kubernetes to both on-premises hardware and the cloud, requiring a unified platform to interoperate and manage the clusters.

Today, I am happy to announce the general availability of Platform9 Managed Kubernetes (PMK)! With this post, I’d like to discuss the original motivation for our journey, the problems that we felt were worth solving, and how we built PMK to help solve these problems.

Why Containers?

It is clear that containers utilize technologies that had existed in Linux for many years, so why did the Docker tool bring such a mini-revolution to the IT, devops and application development industry? I think that Docker’s success started with the revolutionary way it allows a developer to package an application with all of its dependencies into a lightweight image that can be deployed almost anywhere. Next, operations teams started taking advantage of the portability, deployment speed, and versioning capabilities of those images, and modified their processes to optimize for containers. Then, application architects and forward-thinking CIOs realized that containers are the perfect building blocks for re-architecting applications with modern design patterns that take advantage of cloud-like APIs and infrastructures.

Those patterns typically call for breaking monolithic applications into smaller single purpose components which can then be independently scaled and made highly available. As more and more applications became architected this way, a new issue arose: how to automate the deployment and lifecycle management of all of these small components? This led to the need for container orchestration frameworks.

Why Kubernetes?

Kubernetes is the leading container orchestration framework because it was originally built by people with decades of experience managing containerized applications at a very large scale. A quick glance of the most important constructs in Kubernetes make it clear that it provides the right building blocks to simplify deployment, discoverability, scale, high availability, and serviceability of containerized applications:

- Pods enable multiple tightly coupled containers to work together, while still allowing their code to maintained independently

- Deployments provide a declarative construct for specifying horizontally scaling service tiers built out of pods

- Services direct network traffic to groups of pods in a flexible, load-balanced way. They also form the basis for dynamic service discovery.

- Labels are ubiquitous and allow relationships between resources to be created and modified dynamically.

These capabilities, combined with the unmatched open source community backing the project, made Kubernetes a no-brainer choice as the base technology for PMK.

Kubernetes Challenges

Kubernetes is undoubtedly powerful, but customer conversations and our own experience have also revealed a number of challenges that can hinder its adoption.

- Properly configuring Kubernetes is difficult and complex

A Kubernetes deployment is a large, complex aggregation of dozens (if not hundreds) of services, components, optional add-ons, plugins, which can be configured in innumerable ways. The distributed (broad) and layered (deep stack) of Kubernetes makes it susceptible to misconfiguration or suboptimal performance problems. It also makes it fragile in the sense that unexpected changes to any component (such as a version upgrade of the underlying container engine e.g. Docker) can break the entire stack. - It isn’t easy to manage clusters across different clouds / data-centers

Many customers wish to build not just one Kubernetes cluster on a single infrastructure platform, but rather multiple clusters on different platforms (e.g multi-cloud or hybrid on-premises + cloud) that can be aggregated and managed in a unified way to enable use cases such as high availability and dynamic capacity expansion. No single tool currently exists that can provide this capability. - Ongoing monitoring and troubleshooting is challenging

The majority of existing Kubernetes “management” tools focus on initial deployment. We learned that the greatest challenges come after Due to the complex and distributed nature of Kubernetes, we’ve learned through months of testing that things can and do break. As mentioned earlier, a minor change to a sub-component can have grave consequences to the overall stack. Network glitches or low-disk scenarios can also cause failure. The worst part is the failure symptoms are often far from the root cause, and a time-consuming troubleshooting process is typically required to identify the true origin of a failure. - Implementing high availability well is complex

Designing Kubernetes to be highly available across node and zone failures is a bit of an art. There are online guides that users can read, and even some tools that attempt to provide HA configurations. However, we’ve learned that cluster HA goes beyond initial configuration: it’s an ongoing critical task. For example, it is not too difficult to configure an etcd cluster (the database used by Kubernetes to persist state) to survive the loss of a single node. However, after this incident, the etcd cluster is operating at reduced capacity and may not survive another loss; bringing back to its original level of resilience takes additional work. - Keeping Kubernetes up-to-date is easier said than done

Kubernetes, the underlying container engine, and many dependent open source components evolve at a ferocious rate, with multiple releases per year. Bug fixes are usually made on new releases, which can themselves introduce new instabilities due to new features. Keeping Kubernetes up-to-date while maintaining up-time for critical applications is a daunting challenge. Few existing Kubernetes management tools provide a robust cluster upgrade Those that do tend to focus on upgrading the Kubernetes control plane, which is relatively easy. Upgrading the data plane where kubelet, Docker, storage/network plugins and – most importantly – customer workloads run, and doing it with no application downtime remains an unsolved problem.

Platform9 Managed Kubernetes

Looking long and hard at those challenges, we concluded that a managed approach to Kubernetes is the best way to overcome them. We built Platform9 Managed Kubernetes to hide as much of the complexity of operating a Kubernetes cloud as possible, while maintaining the agility and application high availability customers expect from an enterprise-grade container orchestration platform.

Incredibly easy to use

PMK allows you to deploy multiple stable, highly available Kubernetes clusters on the hardware platform and operating system of your choice. You can have, for example, several clusters running on Linux servers in your private data center, combined with some in AWS, and manage everything with a common user database and single pane of glass UI.

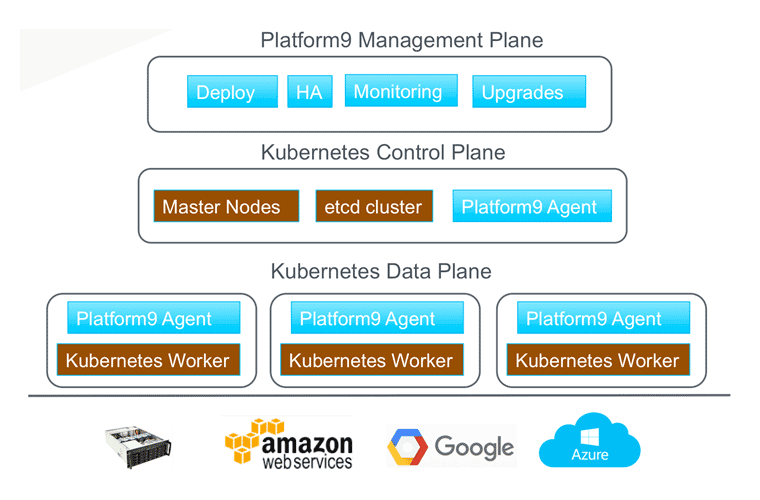

The following diagram shows the general architecture of PMK. Each Kubernetes deployment comprises a control and data plane running on the chosen virtual or physical hardware. Each layer contains a Platform9 agent responsible for all lifecycle management tasks and also health monitoring. The agents are controlled by the Management Plane, which holds all of the intelligence needed to monitor and manage Kubernetes components. Since the management plane runs entirely as a SaaS application, signing up for an account and deploying your first cluster is quick and easy.

Curated, tested, rock-solid

An entire Kubernetes stack deployed by PMK is a strongly versioned set of carefully curated components that are thoroughly tested together. We ensure that the container engine, Kubernetes services, etcd database, add-ons and plugins are all compatible with each other and perform optimally on the supported operating systems before declaring a stack release-ready. This single-stack approach carries over to how we approach upgrades, as described below.

Highly available

Two notable features ensure the high availability of your workloads. (1) Wherever possible, PMK spreads the Kubernetes control plane across availability zones to ensure that your clusters will continue to operate after the unplanned loss of either a single node or an entire zone; (2) a set of PMK monitoring probes and scripts restore the etcd cluster to full capacity after the loss of a member.

The same care is taken with planned upgrades. A bug fix or feature enhancement in Docker engine, Kubelet (the per-node agent of Kubernetes), or a network/storage plug-in will typically require an upgrade to the Kubernetes data plane where workloads (pods) run. In general, the customer has control over the maintenance windows in which upgrades are scheduled. During an upgrade, to eliminate or minimize downtime, PMK orchestrates a rolling-update of worker nodes. Every node is taken down for maintenance one at a time, and each time PMK communicates with the Kubernetes API server to shut down affected pods in an orderly way, enabling Kubernetes to immediately reschedule them on other nodes. Applications that take advantage of multiple pods for availability will suffer no downtime as a result (Refer and link to video demonstrating this capability).

Monitoring and support: you’re in good hands

Last but not least, we designed PMK with the expectation that errors can and will happen at some point throughout the stack. The Platform9 Agents that run on all Kubernetes nodes (worker and master) continually send health information to Whistle, a central monitoring and alerting system we’ve been building and perfecting over several years. Whistle aggregates data and runs a growing number of algorithms designed to detect – and in many cases predict – failures in real-time. Issues that can be corrected automatically are resolved immediately, while others will trigger notifications to the 24/7 Platform9 support team. An issue that needs customer cooperation is handled through our ticketing system.

Conclusion

Platform9 Managed Kubernetes aims to eliminate complexity from operating Kubernetes clusters, so that you can focus on architecting and running great applications that matter to your business. We are truly excited to make this product generally available. To learn more, we highly recommend:

- Read the eBook comparing Kubernetes with other container orchestration frameworks

- Learn about Fission: a new, serverless framework that allows you to consume Kubernetes by simply provisioning REST functions written in your favorite programming language