One of the more interesting developments that we are seeing within our customer base and among the users we speak with regularly is the growing desire to understand OpenStack hypervisor support and leverage OpenStack as a “manager of managers” for different types of technologies such as server virtualization and containers. This lines up with the messaging that the OpenStack Foundation has been putting forth regarding the emergence of OpenStack as an Integration Engine. At Platform9, we see the potential for leveraging OpenStack to not only integrate new technologies but to integrate legacy with next-generation, brownfield with greenfield, hypervisors with containers, etc.

In particular, we see growing interest in using OpenStack to automate the management of new and existing VMware vSphere infrastructures. In some cases, user are looking to quickly”upscale” their current vSphere environment to an elastic, self-service infrastructure; in other cases, users are looking for a tool to help bridge their legacy infrastructures with their new cloud-native infrastructures. In many cases, Platform9 customers choose us because they want to accomplish these goals without having to invest in a great deal of professional services and bearing the burden of managing a complex platform.

In this respect, the choice Platform9 made to leverage OpenStack as the underlying technology we use to provide Cloud Management-as-a-Service was a no-brainer. VMware and others in the ecosystem have put a great deal of effort to contribute code to make vSphere a first class hypervisor option in OpenStack. In addition to gaining the benefits of a solution that has been embraced by the OpenStack ecosystem, Platform9 Managed OpenStack customers also have access to the OpenStack APIs, which is the industry standard for private clouds.

However, there is still confusion in regards to understanding how vSphere integrates with OpenStack, particularly in terms of what is gained and what is lost with OpenStack. This blog post will provide a look under the hood of how vSphere integrates with OpenStack compute and the implications it has for architecting and operating an OpenStack ESXi cloud, both with vSphere as the single hypervisor and also in a mixed-mode environment. The way this integration works is exactly the same regardless of the OpenStack distribution that is being used to manage vSphere; this is true for Platform9, Red Hat Enterprise Linux OpenStack Platform (RHELOSP), Mirantis OpenStack, and even for VMware’s vSphere Integrated OpenStack (VIO). In future posts I will discuss additional capabilities Platform9 has added which we plan to contribute to the OpenStack project.

Nova Compute

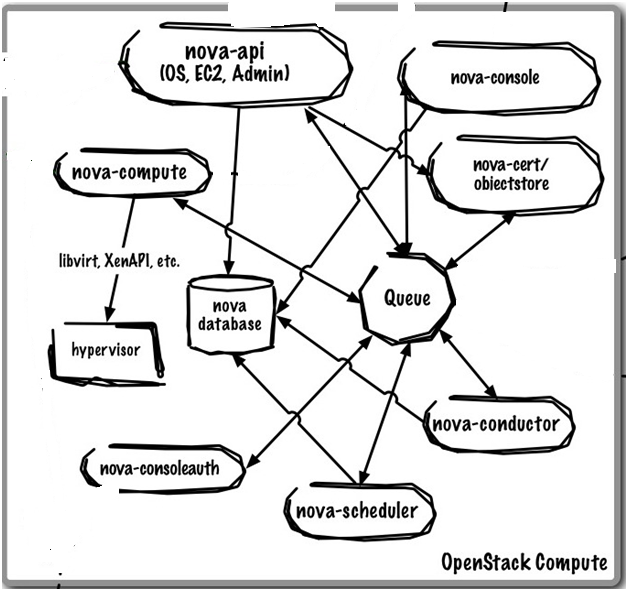

The place to focus on is the Nova compute project since it is the OpenStack component that orchestrates the creation and deletion of compute/VM instances. To gain a better understanding of how Nova performs this orchestration, you can read the Compute section of the “OpenStack Cloud Administrator Guide.” Similar to other OpenStack components, Nova is based on a modular architectural design where services can be co-resident on a single host or more commonly, across multiple hosts.

You can read more about the various services and components that make up Nova compute by reading the Compute Service section of the OpenStack Install Guides. Similar information can be found in the different installation guides available for each supported Linux operating system.

Early on, many mistakenly made direct comparisons between Nova and vSphere, which is actually quite inaccurate since Nova actually sits at a layer above the hypervisor layer. OpenStack in general and Nova in particular, is most analogous to vCloud Director (vCD) and vRealize Automation, and not ESXi, vCenter, or vSphere. In fact, it is very important to remember that Nova itself does NOT come with a hypervisor but supports multiple hypervisors, such as KVM or ESXi. Nova orchestrates these hypervisors via APIs and drivers. The list of supported hypervisors include KVM, vSphere, Xen, and others; a detailed list of what is supported can be found on the OpenStack Hypervisor Support Matrix.

Nova manages it’s supported hypervisors through APIs and native management tools. For example, Hyper-V is managed directly by Nova while KVM and vSphere are managed via virtualization management tools such as libvirt and vCenter for vSphere respectively.

Integrating VMware vSphere with OpenStack Nova

Prior to the Juno release of OpenStack, VMware supported a VMwareESXDriver which enabled Nova to communicate directly to an ESXi host via the vSphere SDK and not require a vCenter server. This driver had limited functionality and was deprecated in the Icehouse release and removed completely as of the Juno release. The limitations of theVMwareESXDriver included the following:

- Since vCenter is not involved, using the VMwareESXDriver meant advanced capabilities, such as vMotion, High Availability, and Dynamic Resource Scheduler (DRS), were not available.

- The VMwareESXDriver had a limit of one ESXi host per Nova compute service which effectively meant that each ESXi host required it’s own Linux host running a Nova compute service instance.

The removal of this limited driver is the reason vendors such as Platform9 and even VMware do not offer ESXi support, without vSphere, in our OpenStack solutions.

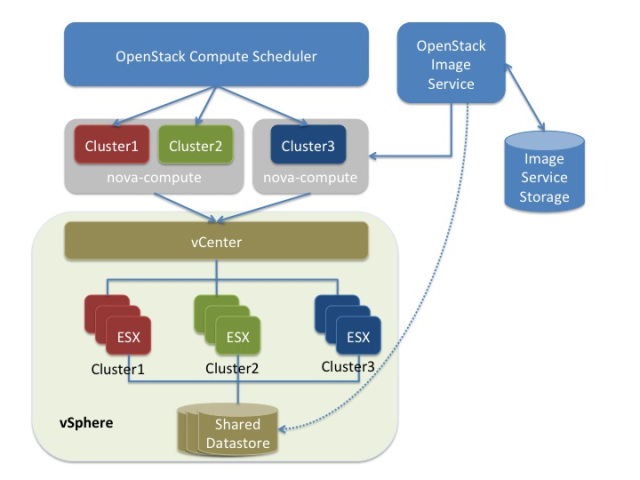

OpenStack Nova compute now manages vSphere 4.1 and higher through a compute driver provided by VMware – the VMwareVCDriver. This driver enables Nova to communicate with a VMware vCenter server managing one or more clusters of ESXi hosts. The nova-compute service communicates, via the vCenter APIs, to a vCenter Server, which handles management of one or more ESXi clusters. The nova-compute service runs on a Linux server (OpenStack compute node) which acts as a proxy that uses the VMwareVCDriver to translate nova API calls to vCenter API calls and relates them to a vCenter server for processing. Hence, when a Nova API call is made to create a VM, nova compute schedules where the VM should be created. If the VM is to be created on a vSphere cluster, nova compute hands that request off to the vCenter server which then schedules where in the cluster the VM will be created. This requires that vSphereDynamic Resource Scheduler (DRS) be enabled on any cluster that will be used with OpenStack. With vCenter in the picture, advanced capabilities, such as vMotion, High Availability, and Dynamic Resource Scheduler (DRS), are now supported. However, since vCenter abstracts the ESXi hosts from the nova-compute service running on the “proxy” compute node, the nova-scheduler views each cluster via that “proxy” compute node as a single compute/hypervisor node with resources amounting to the aggregate resources of all ESXi hosts managed by that cluster. This can cause issues with how VMs are scheduled/distributed across a multi-vSphere cluster or mixed-mode OpenStack environment.

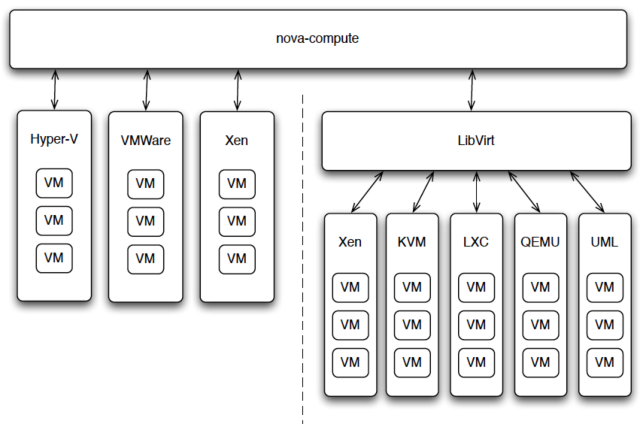

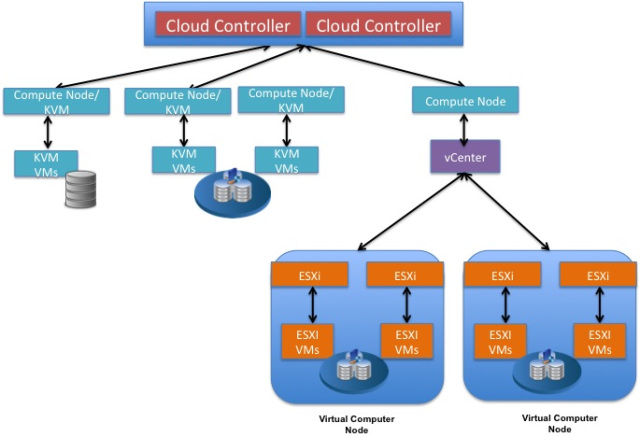

Pulling back a bit, you can begin to see below how vSphere integrates architecturally with OpenStack alongside a KVM environment.

Also, in terms of architecture, one should note the following:

- Unlike Linux kernel based hypervisors, such as KVM, vSphere on OpenStack requires a separate vCenter Server host and that the VM instances to be hosted in an ESXi cluster run on ESXi hosts distinct from a Nova compute node. In contrast, VM instances running on KVM can be hosted directly on a Nova compute node. This is due to the fact that the nova binaries cannot run directly on a vCenter sever. Therefore a Linux server, running nova compute services, must be provisioned for each vCenter instance.

- Although a single OpenStack installation can support multiple hypervisors, each compute node can support only one hypervisor. So any mixed-mode OpenStack Cloud requires at least one compute node for each hypervisor type.

- To utilize the advanced vSphere capabilities mentioned above, each Cluster must be able to access datastores sitting on shared storage.

For more information on how to configure the VMwareVCDriver, I recommend reading the VMware vSphere section of the OpenStack Configuration Reference guide.

vSphere and Resource Scheduling with Nova Compute

At this point, I want to review an important component of Nova Compute – resource scheduling, aka. nova-scheduler in OpenStack and how it interacts with Distributed Resource Scheduler (DRS) in vSphere. If you not familiar with how scheduling works in Nova compute, I recommend reading the Scheduling section of the Configuration Reference guide and an earlier post on the subject from my personal blog. The rest of this blog post will assume understanding of Nova scheduling.

As mentioned earlier, since vCenter abstracts the ESXi hosts from the nova-compute service, the nova-scheduler views each vSphere cluster as a single compute node/hypervisor host with resources amounting to the aggregate resources of all ESXi hosts in a given cluster. This has two effects:

- While the VMwareVCDriver interacts with the nova-scheduler to determine which cluster will host a new VM the nova-scheduler plays NO part in where VMs are hosted in the chosen cluster. When the appropriate vSphere cluster is chosen by the nova scheduler, nova-compute simply makes an API call to vCenter and hands-off the request to launch a VM. vCenter then selects which ESXi host in that cluster will host the launched VM, using DRS and based on Dynamic Entitlements and Resource Allocation settings. Any automatic load balancing or power-management by DRS is allowed but will not be known by Nova compute.

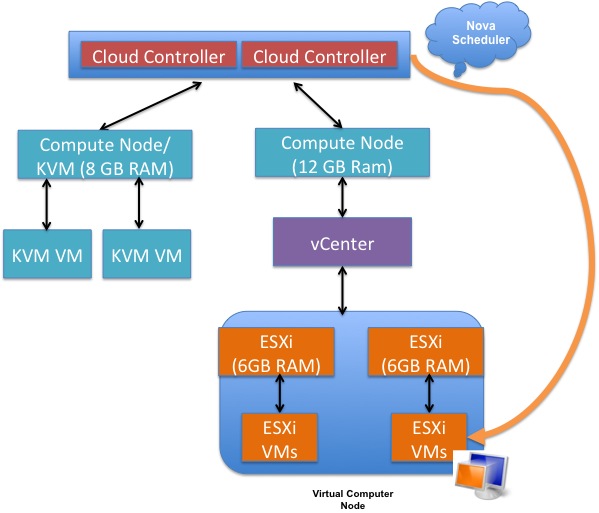

- In the mixed-mode example below, the KVM compute node has 8 GB of available RAM while the nova-scheduler sees the “vSphere compute node” as having 12 GB RAM. Again, the nova-scheduler does not take into account the actual available RAM in each individual ESXi host; it only considers the aggregate RAM available in all ESXi hosts in a given cluster. Note the same effect occurs in a vSphere only environment if you have more than one vSphere cluster.

As I also mentioned earlier, the latter effect can cause issues with how VMs are scheduled/distributed across a mixed-mode OpenStack environment. Let’s take a look at two use cases where the nova-scheduler may be impeded in how it attempts to schedule resources; we’ll focus on RAM resources, using the environment shown above, and assume we are allowing the default of 50% overcommitment on physical RAM resources.

- A user requests a VM with 10 GB of RAM. The nova-scheduler applies the Filtering algorithm and adds the vSphere compute node to the eligible host list even though neither ESXi hosts in the cluster has sufficient RAM resources, as defined by the RamFilter. If the vSphere compute node is chosen to launch the new instance, vCenter will then make the determination that there aren’t enough resources and will respond to the request based on DRS defined rules. In some cases, that will mean that a VM create request will fail.

- A user requests a VM with 4 GB of RAM. The nova-scheduler applies the Filtering Algorithm and correctly adds all three compute nodes to the eligible host list. The scheduler then applies the Cost and Weights algorithm and favors the vSphere compute node which it believes has more available RAM. This creates an unbalanced system where the hypervisor/compute node with the lower amount of available RAM may be incorrectly assigned the lower cost and favored for launching new VMs. Note the same effect occurs in a vSphere only environment if you have more than one vSphere cluster.

This means that a vSphere with OpenStack deployment must be carefully monitored if used with multiple vSphere clusters or in a mixed-mode environment with multiple hypervisors. It is one of the reasons Platform9 is advising customers to do either with care. We are also looking to implement code that will make resource scheduling more intelligent.

High Availability

As many readers of this post will know, High-Availability (HA) is one of the most important features of vSphere, providing resiliency at the compute layer by automatically restarting VMs on a surviving ESXi host when the original host suffers a failure. HA is such a critical feature, especially when hosting applications that do not have application level resiliency but assume a bulletproof infrastructure, that many enterprises consider this a nonnegotiable when considering moving to another hypervisor such as KVM. So, it’s often a surprise when customers hear that OpenStack does not have the ability natively to auto-restart VMs on another compute node when the original node fails.

In lieu of vSphere HA, OpenStack uses a feature called “Instance Evacuation” in the event of a compute node failure (keep in mind that outside of vSphere, a Nova Compute node also functions as the hypervisor and hypervisor management node). Instance Evacuation is a manual process that essentially leverages cold migration to make instances available again on a new compute node. You can read more about Instance Evacuation in the OpenStack Cloud Administrator guide.

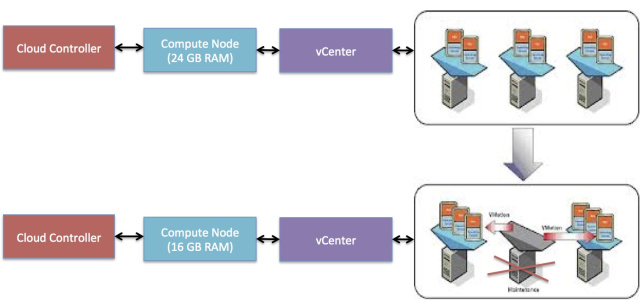

As mentioned earlier, vCenter essentially proxies the ESXi cluster under its management and abstracts all the member ESXi hosts from the Nova Compute node. When an ESXi host fails, vSphere HA kicks in and restarts all the VMs, previously hosted on the failed server, on the surviving servers; DRS would take care of balancing the VMs appropriately across the surviving servers. Nova Compute is unaware of any VM movement in the cluster or that an ESXi host has failed; vCenter, however, reports back on the lowered available resources in the cluster and Nova take that into account, in its scheduling, the next time a request to spin up an instance is made.

VM Migration

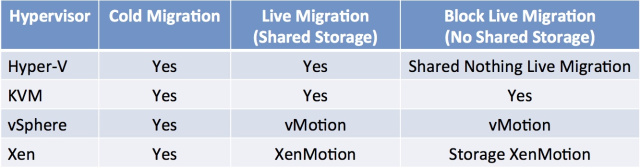

Another key feature of vSphere is vMotion or what is generically called VM Migration. Nova supports VM migration by leveraging the native migration features inherent within the individual hypervisors under its management. The table below outlines what migration features are supported by OpenStack with each hypervisor:

One big difference between vSphere and the other hypervisors in the table above is that cold migration and vMotion cannot be initiated through Nova; it has to be initiated via the vSphere Client or vSphere CLI. You can read more about VM Migration in the Cloud Administrator guide.

As you can see, integrating OpenStack with ESXi is not a trivial pursuit but one that VMware and other ecosystem partners have done a great job with making successful. At Platform9, we are big believers that OpenStack hypervisor support like vSphereis critical to customer success as they move from legacy to cloud-native. We believe that there is more that could and should be done to make this integration better for customers. Some of these things were mentioned in this post and more in a previous post. We look forward to contributing ideas and code as we help our customers with “upscaling” their VMware infrastructure to the cloud.