In business, stagnation is harmful to the health of a company. Without change, a successful company slowly loses relevance while more daring competitors evolve to provide additional value to their customers. In software development, this is especially true.

Development organizations that don’t prioritize innovation soon find that their applications and services are technologically stale and losing viability in their marketplace – and all in a relatively short time frame. With that said, innovation in the realm of software development comes at a cost. And that cost is the risk to application stability.

Below, I will discuss how innovation and stability are competing priorities in software development. In addition, I will detail the role that modern deployment strategies play in reducing the risk that comes with frequent application changes – especially for those organizations leveraging containers and Kubernetes.

Availability vs. Innovation

It goes without saying (but I’ll say it anyway) that an application or service must be available in order to provide any value at all to the customer. This means that the application must be up and running. End users must be able to utilize functionality without experiencing failures or any significant latency. This type of reliable and consistent performance is what drives users to leverage the product and provides the foundation for SLAs that may be constructed for paying customers.

The fear of alienating end users or violating SLAs can, in some instances, result in the formulation of a mindset that is resistant to change. The thought process being that if problematic changes are released into production then the application will become unstable and less reliable. These problematic changes then lead to downtime in the form of a rollback or remediation. This downtime leads to frustrated end users and SLA violations; both of which could result in harm to brand reputation and the business’ bottom line.

We can’t change the fact that code changes threaten stability. We also can’t change the fact that innovation is necessary. So what can we do to help decrease the risk to system reliability posed by delivering frequent changes to an application?

One aspect to consider is the deployment strategy being utilized to deliver these changes. And, for organizations that leverage Kubernetes to deploy and manage their container-based applications, there exist several effective strategies.

Kubernetes Deployment Strategies for Minimizing Risk and Downtime

There are a few key considerations to make when analyzing a deployment strategy. These considerations include: 1) the length of time it would take to roll back the release in the event the new version is unstable, and; 2) the amount of downtime (if any) that will be experienced by the end user during the actual deployment.

Consider the following deployment strategies (that are more streamlined than ever with Kubernetes) and how they can help minimize both the risk to application reliability and system downtime during deployment.

Blue-green deployments

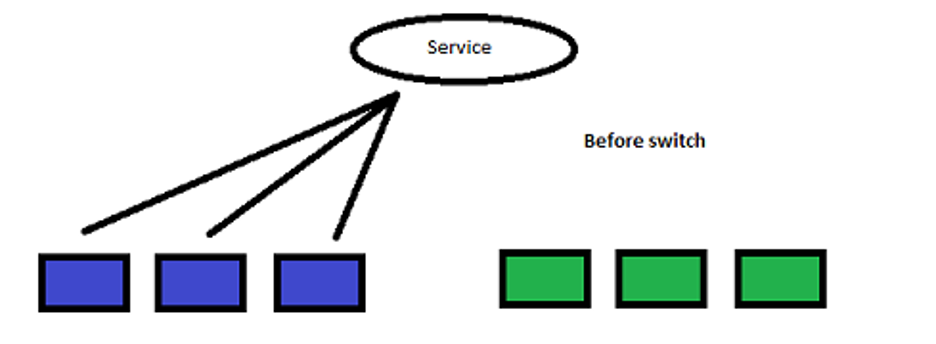

A blue-green deployment – a route to consider for organizations leveraging Kubernetes – is a deployment strategy in which two versions of the application are deployed at the same time. The blue version represents the old version, and the green version represents the new.

Initially, all traffic is routed to the blue (old) version. After the parallel deployment of the green (new) version of the application, QA personnel can verify that the deployment has been successful and that the app is in working order.

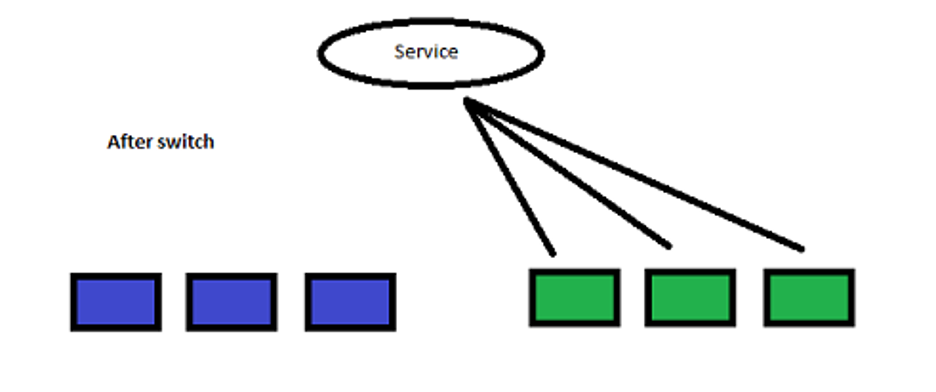

After this verification, the service is switched to the green version allowing end users to access the pods serving the new release. Finally, after enough time passes that the organization is comfortable with the green version, pods serving the blue version of the application are taken down completely.

This strategy has benefits that satisfy both of the considerations discussed above. For one, the end users will experience zero downtime during the actual deployment. With instances of both the old and new versions of the application running in parallel, users can seamlessly be directed from blue (old) to green (new). Secondly, in the event the new release proves to be unstable, the rollback process is as simple as switching the service to deliver traffic back to the pods serving the previous and stable version of the application.

Canary deployments

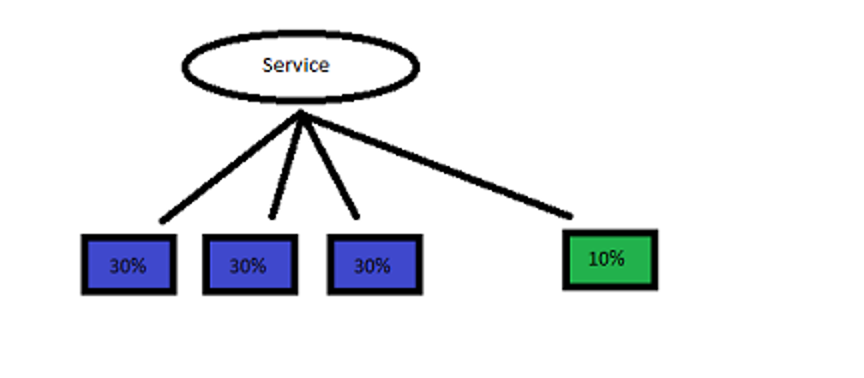

Another popular deployment strategy to consider with Kubernetes is known as a canary deployment. When deploying with this strategy, similar to the Blue-Green deployment strategy described above, pods serving the old version application remain up and running while pods serving the new version (canaries) are deployed alongside them. With the new version (canary version) deployed in parallel with the old, a small subset of the users accessing the app are routed to the new version of the application.

As the stability of the new version becomes evident (no issues within the subset of users accessing the canary version indicates that the new version is stable), more pods are deployed serving the new version of the application, and the percentage of traffic to these pods is increased. Eventually, all traffic is being sent to the new version of the application and existing pods serving the old version of the application are removed from production.

Canary deployments are yet another strategy that allows for zero downtime while also limiting the impact of a problematic release. By initially directing a small subset of traffic to the canary version, organizations can monitor the application for any performance issues such as latency, requests resulting in errors, or anything else that could be deemed out of the ordinary. If the release proves to be unstable, the organization will know quickly, and a relatively small number of end users will have been exposed – thereby limiting the damage.

Encouraging Innovation with K8s

Kubernetes is an orchestration platform for deploying, scaling and managing containerized applications. By streamlining the process for deploying your applications with the strategies mentioned above, K8s enables teams to be forward-thinking and to continue innovating in an effort to bring value to the business.

When organizations have processes in place to limit the impact of problematic releases on the end user, their concerns about catastrophic impactful bugs shortly after deployment are lessened and they can focus more completely on building rich functionality that resonates with their customers.