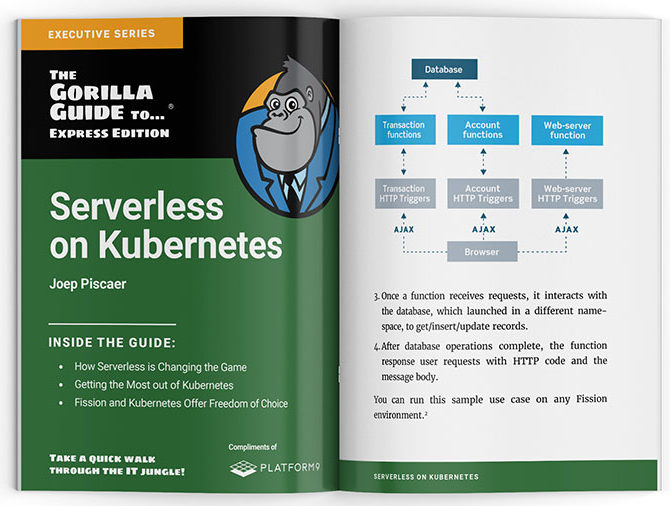

This is an excerpt from The Gorilla Guide to Serverless on Kubernetes, written by Joep Piscaer.

This is an excerpt from The Gorilla Guide to Serverless on Kubernetes, written by Joep Piscaer.

See the first chapter here. You can download the full guide here.

The biggest advantage of serverless computing is the clear separation between the developer and the operational aspects of putting code into production. This simplified model of “who does what” in the layer cake of the infrastructure underneath the code lets the operational folks standardize their layers (e.g., container orchestration and container images), while the developers are free to develop and run code without any hassle.

Because of the small and highly standardized surface area between development and operations, operational folks have greater control over what’s running in production. Thus, they can respond more quickly to updates and upgrades, security patches, or changing requirements.

The use of container technologies like Docker and Kubernetes has increased developer velocity and significantly decreased the complexity of building, deploying, and managing the supporting infrastructure as compared to VM-based approaches. But despite these advances, there is still a lot of relative friction from the developer standpoint in terms of going through required steps that add no direct value to their workflow, like building a container for every new code release or managing auto-scaling, monitoring, and logging. Even with a perfect pipeline, these jobs become naturally inert, requiring additional work to change them for a new release, software version upgrade, or security patch.

Although the default operating model is one in which each group stays within their set boundaries, there are possibilities to cross those lines. A typical example is when a developer needs additional package dependencies, like libraries, in the execution environment. In a container-based microservices approach, this would have been the developer’s problem; in the serverless approach, it’s part of the solution, abstracted away from the developer. The containers that execute the functions are short-lived, automatically created and destroyed by the FaaS platform based on runtime need.

This leads to a shorter pipeline and fewer objects (like containers) that need to be changed with a new code release, making deployments simpler and quicker. Since there’s no need to completely rebuild the underlying containers, as most new code releases are loaded dynamically into existing containers, deployment time is significantly shortened.

In addition, compiling, packaging, and deployment are simple compared to container and VM-based approaches, which usually force an admin to redeploy the entire container or VM. FaaS only requires a dev to upload a ZIP file of new code; and even that can be automated, using source version control systems like Git.

There’s no configuration management tooling, rolling restart scripts, or redeployment of containers with the new version of the code. Time isn’t the only thing that’s saved, either. Because of the fine-grained level of execution, FaaS services are metered and billed per millisecond of runtime or per number of requests (i.e., triggers). Idle functions aren’t billed.

In other words, you don’t pay for anything but code execution. Gone are the days of investing in data center space, hardware, and expensive software licenses, or long and complex projects to set up a cloud management or container orchestration platform.

A closer look at costs

The industry pricing model for serverless function execution is the same across the board for major cloud providers. Pricing varies with the amount of memory you allocate to your function. This is a pricing overview across Amazon, Microsoft and Google from October 2018:

- Requests: $0.20 – $0.40 per million requests

- Compute: $0.008 – $0.06 per hour at 1 GiB of RAM •

- Data ingress: $0.05 – $0.12 per GB

- API Gateway: $3.00 – $3.50 per million requests

These pricing models are a direct motivation to optimize a function for performance: paying for execution time directly correlates cost with performance. Any increase in performance will not only make your customers happy, but also reduce the operational cost. This is a radically different pricing approach than we’ve seen with VMs and containers: paying for only what you actually use. When a function is idle, you’re not paying at all, which negates the need to estimate resource usage beforehand; scaling from zero to peak is done dynamically and flexibly.

This also means that you’re not stuck with the amortization of investments or long-term contracts, but free to change consumption monthly or even daily. This allows teams to change direction or try something new on an extremely small scale, with similarly small operational costs associated with experimentation. This fosters a culture of trying new things, taking small steps, and learning from mistakes early, which are basic tenets of any agile organization.

Serverless is a great option.

As you can see, serverless is a great option for dynamic applications. The provider automatically scales functions horizontally based on the number of incoming requests, which is great for handling high traffic peaks.

On the next posts, we’ll dive deeper into the Serverless landscape – on the public clouds and on-prem – and discuss the key challenges with Serverless and use cases for real-world applications.

Can’t wait?

To learn more about Serverless, download the Gorilla Guide: Serverless on Kubernetes.