We just returned from Gartner IT infrastructure, Operations, and Cloud Strategies conference (IOCS) held in Las Vegas Dec 9-12, 2019, which drew more than 3500 attendees and more than 120 exhibitors (+50% vs last year).

Infrastructure, Operations, and Cloud teams who came to Gartner IOCS are positioning themselves to innovate with new technologies like Kubernetes, containers, and edge computing to deliver superior customer experiences in a fast moving digital economy. But they also need to keep the lights on and continue to manage and maintain existing mix of legacy and cloud infrastructure. This is a huge challenge.

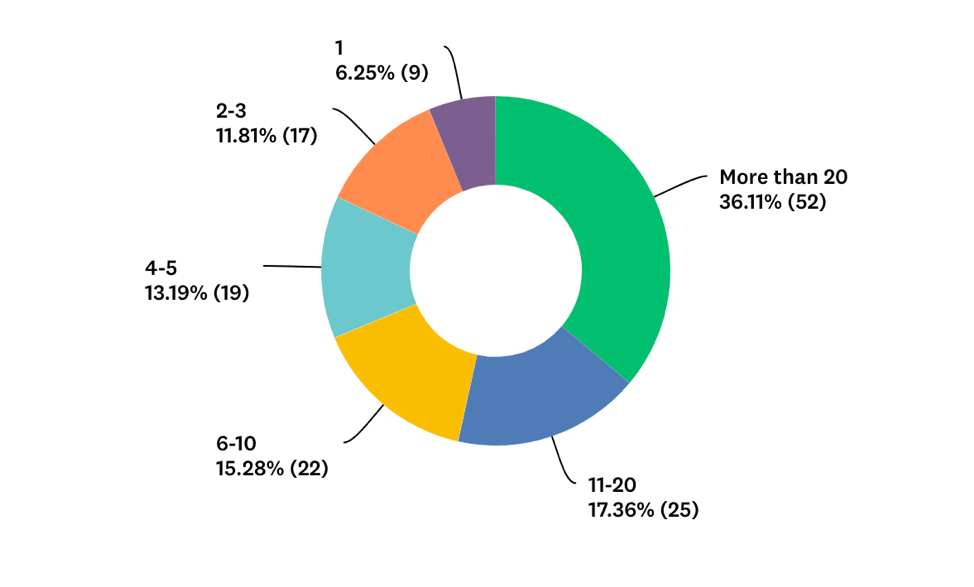

To give us an insight into their use of Kubernetes, we interacted with more than 350 attendees and had more than 250 attendees complete a survey at the booth. Reflecting the nature of the conference, the survey takers are predominantly architects, managers, directors, and VP’s in the IT infrastructure, operations, and architecture teams of large companies in management roles.

We were surprised by how much the game has changed in terms of Kubernetes adoption in the enterprise over just 12 months. As a result IT Operations teams are now challenged to deliver a reliable Kubernetes infrastructure to their Dev and DevOps teams.

Here are the six most important takeaways from the survey results:

1. IT Ops teams are increasingly being asked to run Kubernetes and Containers

Just over 50% of our survey respondents indicated that their organization is running Kubernetes today. This is quite an astonishing percentage given that this data is coming from a predominantly IT infrastructure and operations management demographic, who traditionally have dealt with administration of server, storage, network, databases, VDI’s, virtualization, backup, DR and so on.

For the past few years, software developers. product engineering, and DevOps teams have been driving adoption of Kubernetes for a number of new use cases such as SaaS software, AI/ML, CI/CD pipelines, IoT and so on. This fast adoption of containers is cutting across the functional roles of typical IT teams. The future of IT infrastructure will be driven by cloud, edge computing, IoT, DevOps, and containerization, challenging the skills of traditional IT people and demanding a new kind of dexterity and knowledge.

In our in-person conversations, many of the attendees mentioned that IT and operations teams are increasingly being asked to run Kubernetes environments and to support DevOps and development teams working on containerization initiatives.

2. Monitoring, Upgrades, and Security Patching are the Biggest Operational Challenges

Kubernetes’ complexity and abstraction is necessary and it is there for good reasons, but it also makes it hard to troubleshoot when things don’t work as expected. This makes observability through comprehensive monitoring even more critical.

No wonder then that 45% of the survey respondents indicated that monitoring is their biggest challenge, followed by upgrades (33%) and security patching (33%).

IT Ops teams need observability, metrics, logging, service mesh and they need to get to the bottom of failures quickly to ensure uptime and SLAs. An observability strategy needs to be in place in order to keep track of all the dynamic components in a containerized microservices ecosystem. Such a strategy allows you to see whether your system is operating as expected, and to be alerted when it isn’t. You can then drill down for troubleshooting and incident investigation, and view trends over time.

The rise of Prometheus (an open source monitoring tool), fluentd (an open-source logging solution) and Istio (an open source service mesh) is a direct response to these needs. However, now IT Ops teams supporting Kubernetes need to keep up to date with the constantly evolution of these CNCF projects that continue to graduate at a rapid pace.

3. 24x7x365 Support and 99.9% SLA model Is Critical for Kubernetes Deployments

Infrastructure and Operations teams are experts at ensuring performance, up-time, availability, and efficiency in production. They have honed the skills and expertise at doing this, often for several decades, with traditional virtualization platforms and infrastructure environments.

However, what is unfamiliar and challenging about Kubernetes to IT teams is the pace at which application changes are expected to take place in production. Development and DevOps teams are driving these changes as they push for faster software release cycles with CI/CD automation; these teams are looking for continuous maintenance, reliability, and agility of the underlying infrastructure platform. This scares operations teams who are used to locking down and protecting production environments to ensure high availability. .

IT Ops teams need to architect the entire platform and operations solution from the bare metal up (especially in on-premises data centers or edge environments) so they can confidently ensure workload availability while performing complex day-2 operations such as upgrades, security patching, infrastructure troubleshooting failures at the network, storage, compute, operating system, and Kubernetes layers.

4. Kubernetes Edge Deployments in Early Phase But Expected to Grow Rapidly

Edge computing is expected to account for a major share of enterprise computing. Gartner’s top ten strategic technology trends for 2020 named “The Empowered Edge” and “Distributed Cloud” as two trends that are both related to management of edge locations. Two specific data points are worth noting:

“By 2023, there could be more than 20 times as many smart devices at the edge of the network as in conventional IT roles.”

“Distributed cloud allows data centers to be located anywhere. This solves both technical issues like latency and also regulatory challenges like data sovereignty. It also offers the benefits of a public cloud service alongside the benefits of a private, local cloud.”

Another Gartner data point recently noted that around 10% of enterprise-generated data is created and processed outside a traditional centralized data center or cloud, but by 2025 this would reach 75%. Reflecting this shift, Gartner has an entire conference track dedicated to edge computing topics. This underscores the rapid pace at which enterprises are looking to deploy edge solutions.

The variety of edge applications and the scale at which they are being deployed is mind-boggling. Our survey highlighted the diversity of use cases being deployed. The top two applications being deployed at the edge are Edge gateways/access control (36%) and Remote Office and Branch Office (32%).

The use cases we discussed with attendees included edge locations owned by the company (e.g. retail stores, cruise liners, oil and gas rigs, manufacturing facilities), and in the case of on-premises software companies, their end customers’ data centers. The edge deployments typically need to support heterogeneity of location, remote management and autonomy at scale; enable developers; and integrate well with public cloud and/or core data centers.

The survey data indicated 42.9% of these edge locations are running between 1-10 servers and 35.7% are running between 26-100 nodes. These edge locations could be characterized as “thick” edges since they are running a significant number of servers.

However, many challenges need to be addressed. Specifically, companies need to to figure out consistent and scalable edge operations that can manage dozens or hundreds of pseudo-data centers with low or no touch, usually with no staff and little access.

5. Multi-Cloud Deployments Are The New Normal

In many cases, the majority of respondents indicated that they run Kubernetes on BOTH on-prem and public cloud infrastructure, and in some cases also at the edge. This is more evidence that multi-cloud or Hybrid Cloud deployment are becoming the new normal.

With the number of different, mixed environments (private/public cloud as well as at the edge) projected to continue to grow – avoiding lock-in, ensuring portability, interoperability and consistent management of K8s across all types of infrastructure — will become even more critical for enterprises in the years ahead.

6. DIY Kubernetes is not viable for most Enterprises

The majority of enterprises that are looking to modernize their infra with containers will not be adopting the (Do-It-Yourself) DIY Kubernetes path given the operational complexity, cost, and staffing challenges. Many people we talked to said that they do not have a deep bench of Kubernetes talent internally today, and that hiring and retaining talent to run Kubernetes operations is increasingly difficult. Only 12% picked the DIY option in our survey while remaining picked a vendor supported distribution, managed service, or a public cloud option.

Summary

Kubernetes adoption is accelerating in the enterprise, but the complexity of Kubernetes makes it difficult to run and operate at scale, particularly for multicloud/hybrid environments- spanning on-premises data centers, edge locations. public cloud infrastructure.

If you are looking for help scaling up Kubernetes without taking on the operational burden of a DIY approach please feel free to contact us for a free Kubernetes Day 2 operations assessment.

About Platform9 Managed Kubernetes (PMK)

Platform9 Managed Kubernetes (PMK) enables enterprises to easily run Kubernetes-as-a-Service at scale, leveraging their existing environments, with no operational burden. The solution ensures fully automated Day-2 operations with 99.9% SLA on any environment: in data-centers, public clouds, or at the edge. This is delivered using a unique SaaS Management Plane that remotely monitors, optimizes and heals your Kubernetes clusters and underlying infrastructure. With automatic security patches, upgrades, proactive monitoring, troubleshooting, auto-healing, and more — you can confidently run production-grade Kubernetes, anywhere.

Learn more about our Managed Kubernetes solution, and check out the sandbox to learn more.

Survey Demographics: