As 2023 is proving to be a year where most ‘happenings of note’ are negative, it was refreshing and uplifting to see the successful transformation of the Linux Conference into the Open Source Summit. The event was intimate and full of creative and insightful content, but there’s no hiding that everyone there thought there would be more attendees. That’s not a negative, and I don’t think it’s a sign of waning interest. Smaller groups spurred conversation and collaboration and provided an opportunity for even the most introverted attendee to engage.

Open Source Summit is a collection of micro-conferences. Of interest to me was the AI & Big Data track, with speakers from IKEA, Intel, IBM Research, RedHat and more. The event presented an opportunity to learn, engage, network and, to my astonishment, walk away surprised.

Underclocking?

Wait, what? Surprised! Yes, surprised – let me explain. In the year 2003, with my bank flush with cash from working 20 hours+ a week at KFC I bought a PC, built it from parts, and slapped on a big ass heatsink, powerful fans, and proceeded to overclock my PC.

20 years later, IBM Research presented a live demo and the foundational APIs for underclocking Nvidia v100 GPUs to reduce power consumption and heat, along with early benchmark results showing the effect of underclocking on machine learning model training and inference times.

Underclocking: intentionally reducing the throughput of a GPU to conserve power, and therefore reduce heat. This is monumental. But the team didn’t stop there. They coupled the ability to augment the GPUs performance directly into the ML model’s application manifest (the Kubernetes glue, the instructions for the cluster on how to run an application). This means, when serving, a model can be intentionally underclocked to provide the most power efficient execution without impacting user experience! We are all impatient, but is 1 second slow? Is 1.5 seconds too long to wait for a LLM to respond?

The most astounding data the team produced was that at lower clock speeds, whilst running a live workload, the Nvidia v100 GPU used less power, and operated at a cooler temperature than its “idle” state!!!!

What’s the real-world implication of this?

Less power = less electricity = less carbon.

Lower heat = less cooling requirements = less electricity = less carbon.

If you want to try it for yourself just follow the example here: https://github.com/wangchen615/OSSNA23Demo

The future of these capabilities could mean dynamic power management that autotunes for user experience and always optimizes for the least amount of power consumption.

What did I learn? To put it simply, that cloud is not the only place you can run machine learning! We all know Musk bought 10,000 GPUs. But IKEA did too, and so to G Research. And they’re both running on top of Kubernetes.

IKEA’s on-prem machine learning

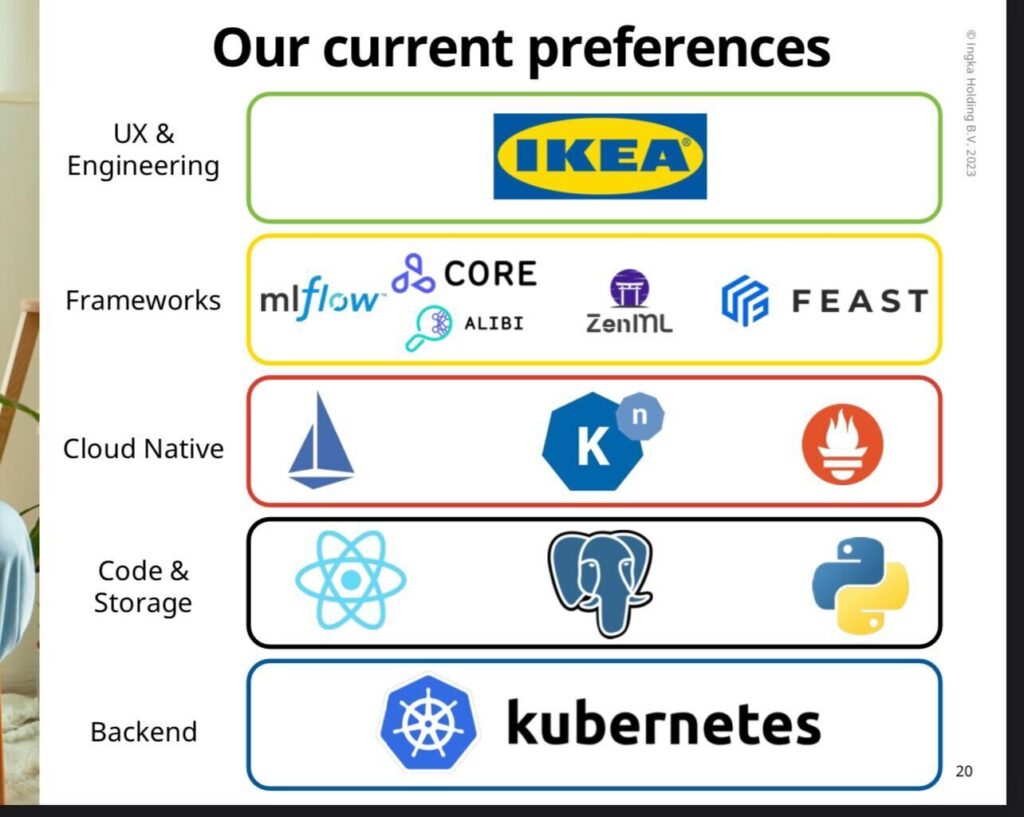

On Day 2, IKEA presented an engaging session where Karan Honavar shared the IKEA journey, their goals, and their production cloud native ML stack that delivers training on-premises and model inference in the cloud.

Many in the industry would question why use on-premise at all. However, if you do the math, the ROI on the hardware investment is recognized in months – 3ish, in general. It doesn’t require a capital investment – you can rent your servers (just ask Dell, HPE or Lenovo). Once running, you no longer need to compete for availability in the public cloud. Beyond this, there are very real data privacy and data sovereignty requirements that cause businesses to question the compliance of training in the cloud.

The IKEA stack is the who’s who of machine learning tools, and spans infrastructure (Kubernetes), serving (kNative), CD (ArgoCD), pipelines (mlflow, zenML), feature store (feast) and model drift (logging, Prometheus). You can view the presentation here: https://ossna2023.sched.com/event/1K5Ci

Big hitters and new open source projects

How do you top these sessions? You open the firehose and bring in the heavy hitters from Intel to talk about inferencing medical images at the edge, IBM to dive deep into Transformers and Relational Observability and Redhat.

Redhat kicked off Day one and introduced “A detailed discussion on how they integrated Ray with Open Data Hub to improve the user experience for developing large machine learning models” – their words, not mine.

To break it down, the session introduced two open-source projects, Kube-Ray and Project Codeflare, which, when combined, allow data scientists to set GPU and resource requests directly from their Jupyer notebooks! No Kubernetes knowledge required at all. You can grab the presentation here: https://ossna2023.sched.com/event/1K59i/accelerate-model-training-with-an-easy-to-use-high-performance-distributed-aiml-stack-for-the-cloud-michael-clifford-erik-erlandson-red-hat

To wrap up – it was a great event and I only scratched the surface. I can’t wait to attend the next event and see the progress everyone has made.