Writing code and getting it live in production has never been easy, and Kubernetes – for all of its benefits – is introducing new challenges.

In traditional virtual environments, engineers have always had to handle build issues and feedback from QA, then address issues found in integration environments. Ultimately, they could spend days on just one pull request.

Containerized cloud native platforms were meant to bring a new promise land. But all of a sudden “works on my laptop” has transformed into “works on my cluster” and businesses are missing their launch goals.

When did developers start getting their own clusters? When they were told to meet a deadline and their only choice was to stop waiting for operations.

How did we end up here? It’s thanks to a combination of complicated Role Base Access Controls (RBAC), difficulties with managing multiple clusters consistently, and little to no options provided by EKS for developer self-service.

You see, the most common approach is to configure each EKS cluster such that a developer can access the clusters where their applications will deploy and configure the RBAC to sufficiently limit their access. Once this is done, each developer then needs a set of AWS tools installed on their workstation. Lastly, they need to learn kubectl.

There are alternatives to opening AWS to every developer and forcing them to learn kubectl commands. An alternative removes the need to provide developers access to AWS and requires no installation of additional tools on their workstation. This can be achieved whilst simultaneously removing the headache associated with managing Kubernetes RBAC.

If you are using EKS and want to provide your developers with access to your clusters using the AWS tooling, you can follow the checklist below. It will take you through the requirements and each step to set up EKS for secure user access that’s integrated with your enterprise identity.

Back to the basics: providing EKS cluster access

How do I provision access to EKS clusters for my engineers using native AWS tools?

There are two approaches: EKS using AWS IAM, and EKS using OIDC. Both depend on the same basic premise – Federated Identity and Access Management. For simplicity, we will focus on EKS using AWS Identity and not Kubernetes OIDC.

EKS is integrated into AWS Identity and Access Management, meaning that to provision access to a cluster and associated workloads you need to provide each developer access to AWS, and then specifically each EKS Cluster. AWS has documented the steps here: https://docs.aws.amazon.com/eks/latest/userguide/add-user-role.html

At a high level, each developer must have access to AWS, have either the AWS CLI or AWS IAM Authenticator installed on their workstation, and the cluster must be updated to understand either the user’s identity, or a Group the user belongs to. With these elements in place, engineers can generate kubeconfig and use kubectl to interact with their workloads.

Below is a checklist of tasks with links to specific guides to help you through the process.

EKS Cluster Access Checklist

- Best practice Enable AWS SSO: Federate AWS Identity and Access Management with your corporate identity. SSO simplifies access, improves security, and makes onboarding/offboarding a single step. The AWS guide is here: https://aws.amazon.com/identity/federation/

- Setup Kubernetes Cluster Role and Role Bindings: The crucial step no matter where you run clusters or how a user is authenticated. Read more on K8s RBAC here: https://kubernetes.io/docs/reference/access-authn-authz/rbac/

- Best practice Ensure Group object continuity: For secure access your Groups setup in your enterprise identity provider must be mapped to AWS and also manually added to each Kubernetes ClusterRole Binding and/or Role Binding.

- Update EKS Clusters ConfigMap: There is a great guide here: https://aws.github.io/aws-eks-best-practices/security/docs/iam/#use-tools-to-make-changes-to-the-aws-auth-configmap

- There are a few key steps, the main step is to add the user or the role (best practice) to the authentication ConfigMap. WARNING: This can break access to the cluster, AWS advises using eksctl.

- The ConfigMap is updated to match the Groups or Users specified in the RBAC policies from item 2. If you’re using Groups, item 3 must be completed, then the group needs to exist in your IDP, mapped into AWS and then added

- Apply the ConfigMap: After updating the ConfigMap it must be re-applied.

- Wait for all nodes to be ‘ready’: As the new ConfigMap is applied each node in the cluster is removed and added back

- Distribute command line binary: EKS uses either the AWS CLI or the AWS IAM Authenticator for Kubernetes utilities for authentication, each engineer must have either one of these installed.

- Install IAM Authenticator following the guide here: https://docs.aws.amazon.com/eks/latest/userguide/install-aws-iam-authenticator.html

- Create & Distribute EKS Kubeconfig: Use the AWS CLI or manually create a kubeconfig file. Each kubeconfig is unique to the user as identified by their account_id.

NOTE: Items 2, 3, 4, 5 and 7 must be completed for each cluster you create and need to provision access to. Users will need to manage and maintain their own kubeconfig files.

EKS access the fast way

Natively, EKS requires each individual cluster to be built then manual steps taken to configure cluster access. Manually configuring RBAC and user access is a requirement that Platform9 has always worked to remove and simplify for any cluster. When we added support for EKS, this was at the top of our list.

When Platform9 builds an EKS cluster, the API Servers authentication is automatically configured to interface with Platform9. This means that 100% of clusters managed by Platform9 can be accessed directly without AWS access.

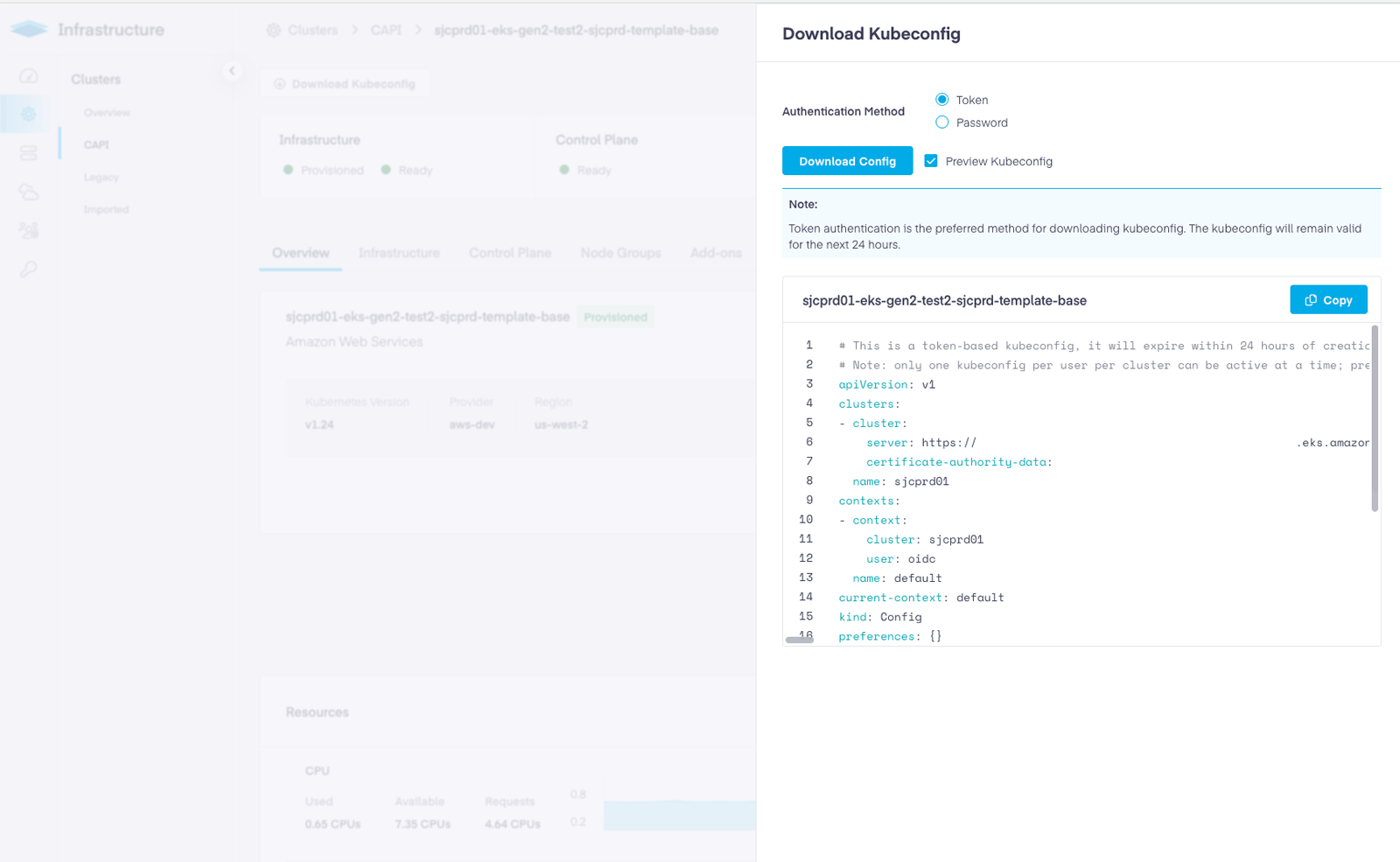

For a developer trying to access an EKS cluster, this means simply downloading a preconfigured kubeconfig and that’s it – done, no binaries to install, they’re good to go.

Our approach doesn’t route traffic through Platform9. Users still connect directly to the cluster, exactly as upstream Kubernetes was designed, creating a high performance and secure environment.

The Kubernetes Authentication Chain is configured on the cluster to resolve the identity against Platform9. The authentication workflow is setup automatically during cluster creation and completely transparent to the end user.

Access on the cluster is mapped to either a user’s username or their group membership, and can be quickly configured from the Platform9 UI. This removes the need to work directly with complex RBAC YAML files.

Beyond Kubeconfig – Platform9 K8s IDE

Not every user wants to use kubectl. Trying to manually correlate a ReplicaSet to its Pods and then onto the associated Persistent Volume Claim can be cumbersome, to say the least. Some users may be new to Kubernetes and unaware of what kubectl is, or how to use it. Or maybe a user doesn’t want to enable their VPN – they’re out away from their keyboard and they just want to quickly check in on an issue. For these users, Platform9 provides a dedicated Kubernetes workload management app, the K8s IDE.

The IDE uses the same Identity and RBAC combination as the kubeconfig + kubectl method above. The web app generates a valid kubeconfig and, via the Platform9 Comms Tunnel, proxies every request to the cluster. This ensures that user access is based on the access specific to any one cluster and that the network traffic is encrypted.

The IDE provides a rich graphical interface into multiple clusters simultaneously, making it easy to quickly navigate and compare Pods running in a production cluster against staging or development. Data in the web app is real time, and exposes container log files, application manifests, and automatically aggregates related objects. When you drill into a Deployment you can see the related Pods, Services, PVCs, and more. In providing an aggregated and centralized view we make it simple for new users and efficient for advanced users.

To get started, you can import an existing EKS cluster or create a new one using Platform9. Once the cluster is attached you need to ensure that Platform9 is connected to your enterprise identity via SAML, or use a local user within Platform9.

Platform9 EKS IAM Setup Using SAML

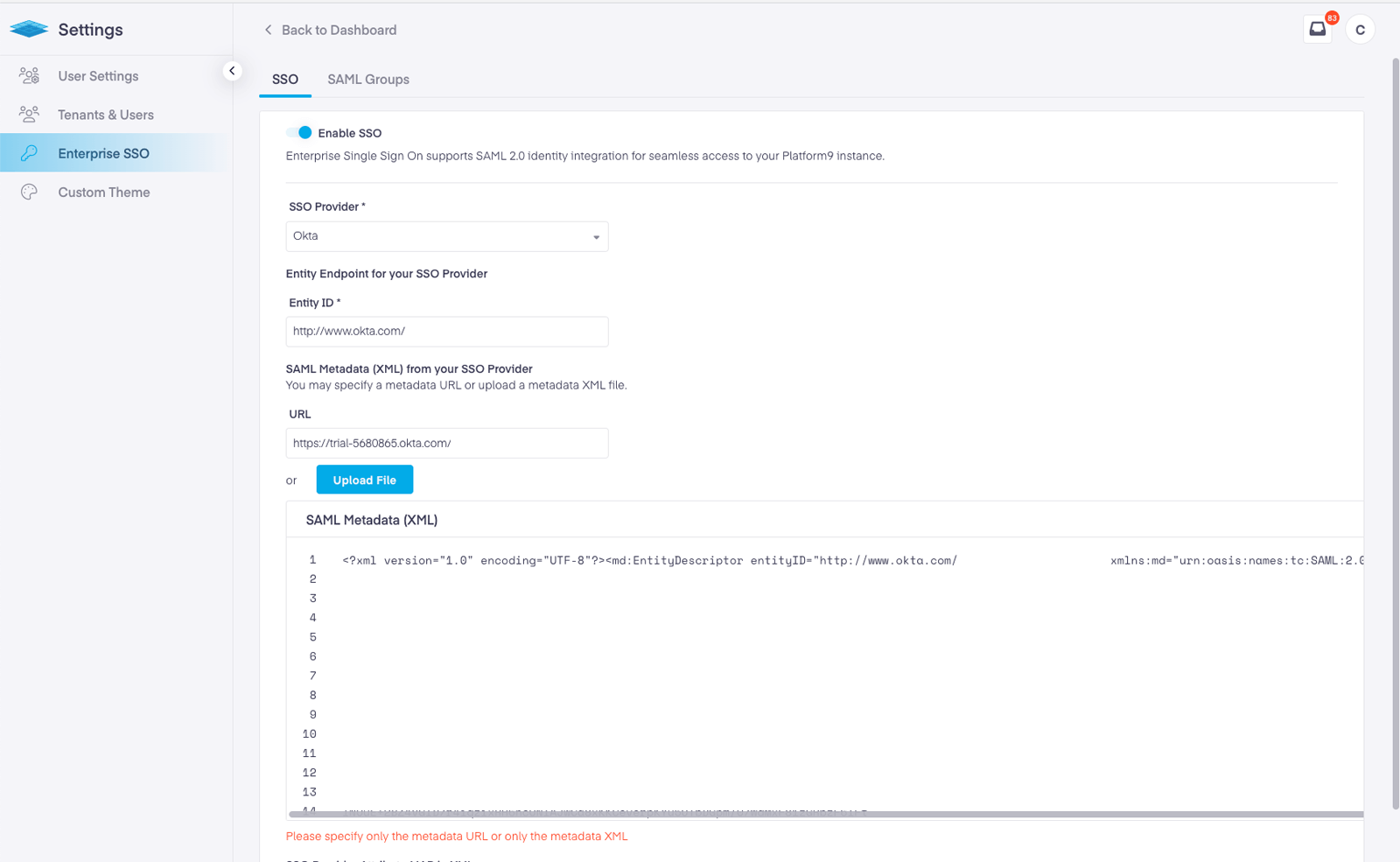

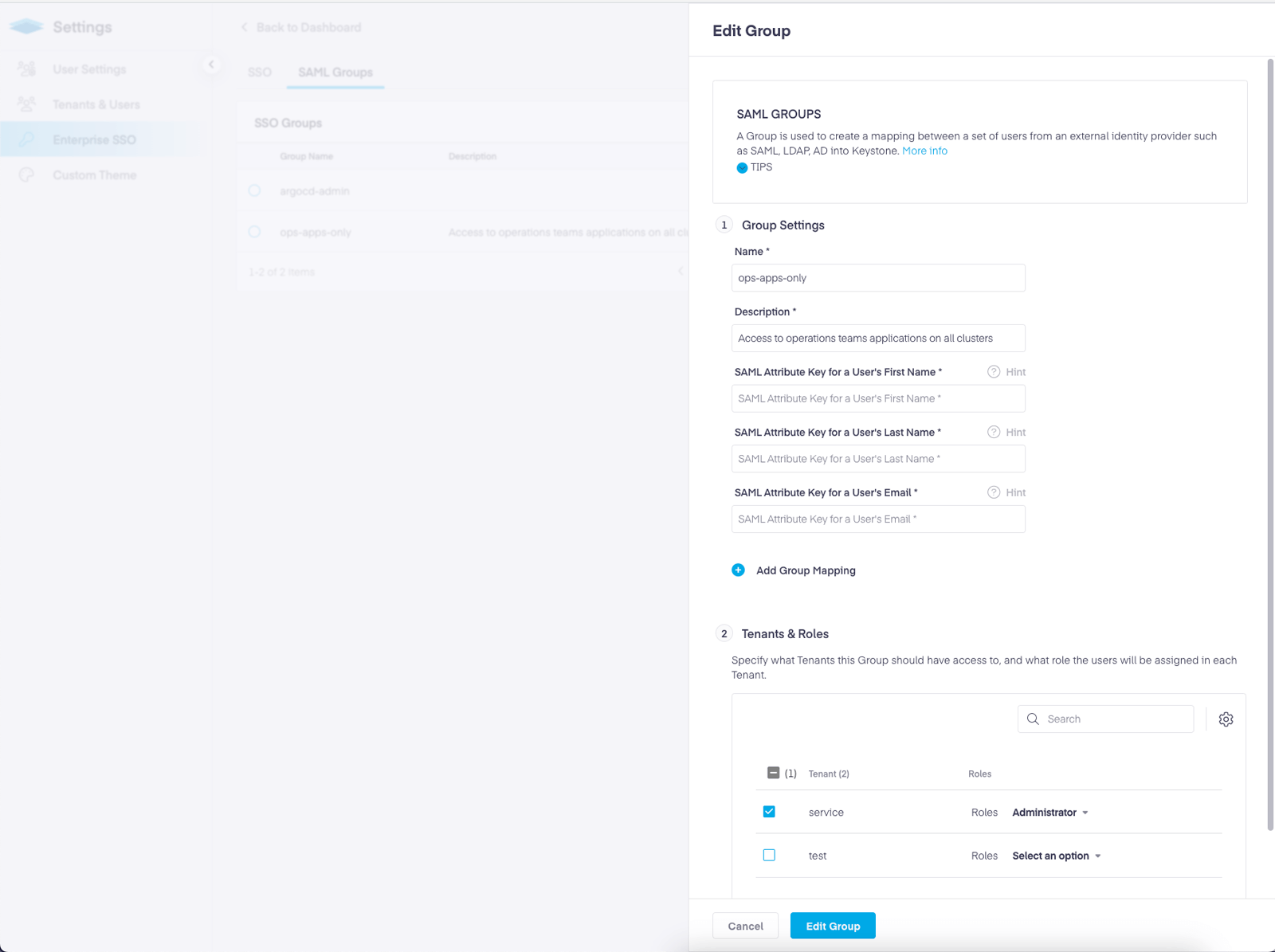

For DevOps and PlatformOps teams we have greatly simplified identity and access management by . To configure SSO and map groups, admin’s need to simply configure SAML SSO and then optionally set up one or more groups, where the groups are federated from your enterprise IDP. Below are the steps.

Step 1 – Setup SAML

Step 2 – Create Group Mapping

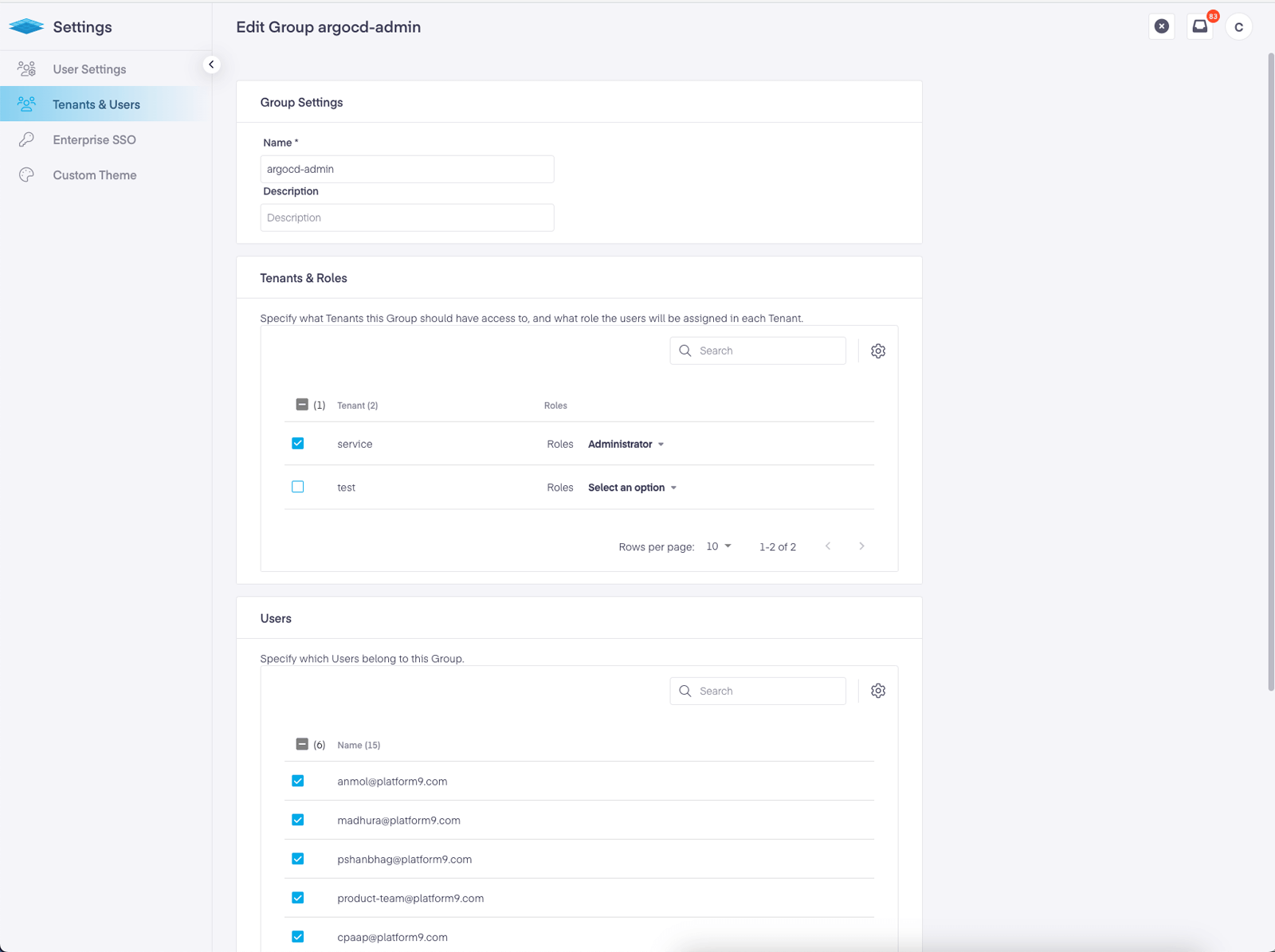

If you like you can also create local users and groups within Platform9.

To provision access to a cluster, a user, or a group needs to be added to the cluster’s Role or ClusterRole Binding. This can be done using RBAC edit in the UI, through the Profile Engine’s RBAC policy controls, or set up automatically by using the Profile Engine to create a Cluster, during which Platform9 will apply your preconfigured RBAC Policy.

To leverage the RBAC editor, follow the steps below.

NOTE: This step can be completed once, then, using the Profile Engine, the policy can be applied as a profile to any cluster.

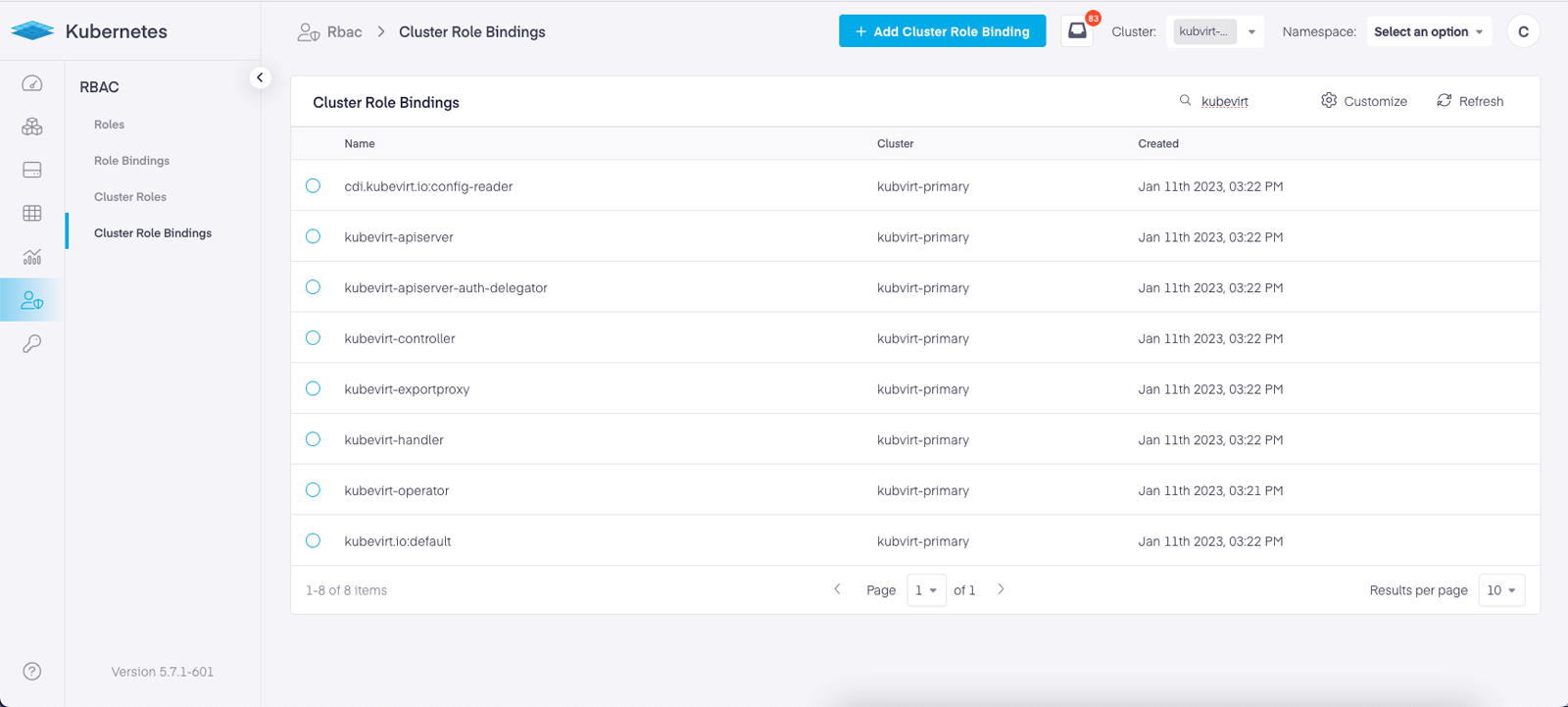

Step 3: Navigate to Infrastructure and then select your cluster from the dropdown menu in the header (This can be changed at any time).

Then select RBAC -> ClusterRole Binding from the left hand navigation.

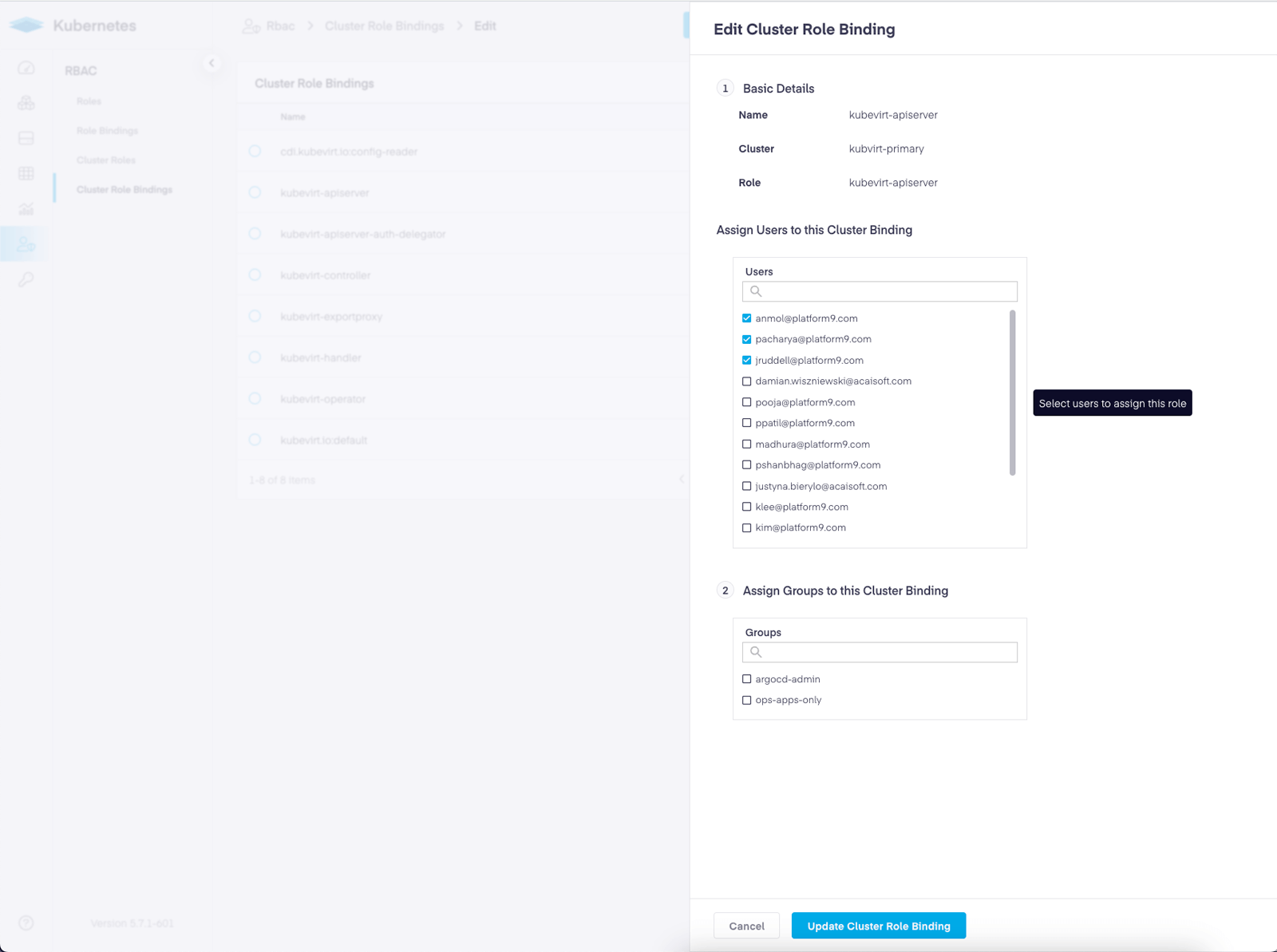

Select an existing policy to edit, or create a new one. The slide out panel will allow you to choose Users or Groups. Add your desired groups and click ‘Save’.

That’s it. Now, any user who downloads the kubeconfig and connects to the cluster will have access based on the Policy and their enterprise group membership.

How is this achieved?

Platform9 uses 100% Kubernetes native and open-source configurations. First, your Platform9 instance is configured using SAML. This allows your enterprise’s IDP group membership to be federated.

Then, for each cluster that is built, EKS, AWS, Azure, and BareOS, the Kubernetes API server is configured to communicate via webhook with Platform9. Each user’s individual session is then assessed against the SAML Group and User assertion, and once authenticated, Kubernetes’s native RBAC policies are assessed. Our goal is to maintain a native, unchanged authentication and authorization workflow that is simple to use and prevents lock-in.

If you’re interested in learning more about how Platform9 supports and enhances cluster security while improving the developer experience, we’d love to hear from you. Contact us, or feel free to set up a free consultation with one of our experts.