Operators of 5G mobile broadband networks deal with large-scale, complex, dynamic, and highly distributed infrastructure requirements. They have to deploy and operate thousands of radio towers and networks while managing software applications across the access layer, aggregate layer, and core data centers. Furthermore, they have to satisfy stringent specifications for latency and network performance of their applications and infrastructure. And finally, operators need the flexibility to dynamically relocate services to optimize network performance, improve latency, and reduce costs of operations.

As a result, 5G architectures need to be services-based with hundreds and thousands of network services in the form of VNFs (Virtual Network Functions) or CNFs (Container Network Functions) that are deployed in geographically distributed remote environments. Kubernetes addresses a part of this challenge being able to manage CNFs. However, it has several limitations when it comes to managing 5G services across distributed locations with stringent latency and performance requirements. Let us look at the top 5 challenges and what needs to be done to optimize Kubernetes for 5G deployments.

Challenge #1: Inefficient and expensive siloed Management of VNFs, CNFs, and 5G sites

It took a better part of a couple of decades for telcos to transition over from physical big iron network deployments to Virtual Networking Functions (VNFs). This improved operational flexibility and dramatically accelerated the speed of deployments and management for the current 3G/4G environments.

But 5G rolls outs are an order-of-magnitude larger scale due to the need for more towers and need much higher levels of flexibility to deliver dynamic networking provisioning and management. For example, providing on-demand bandwidth and variable pricing requires the agility and the speed of Container Network Functions (CNFs).

By 2024, 5G is expected to handle 25 percent of all mobile traffic which will, in turn, drive faster adoption and deployment of CNF’s. However, a vast majority of current networks will continue to rely on VNFs. The fact is VNFs and CNFs will have to coexist. This means telco operators need to maintain two siloed management stacks to run this 5G and the older networks adding to the operational burden and cost. Multiply this by the number of sites that need to be managed, you end up with control plane proliferation and inefficiency of siloed management.

One elegant solution is to run both VNFs and CNFs using Kubernetes as the infrastructure control fabric. This then can function as the VIM layer in the MANO stack. Using KubeVirt, an open-source project, that enables VMs to be managed by Kubernetes alongside containers, operators can standardize on the Kubernetes VIM layer eliminating the operational silos. This also eliminates the need to port all of the applications to containers or managing two entirely separate stacks. You can now get the best of both worlds.

For a detailed discussion on KubeVirt, read this Platform9 white paper on running VMs on Kubernetes.

Challenge #2: Bare Metal Orchestration is Manual and Error-prone

A large-scale 5G network roll-out involves thousands of access layer sites, hundreds of aggregate sites, and possibly dozens of core data centers. All of these sites have bare-metal servers that need to provisioned, configured, and managed throughout their lifecycle.

End-to-end bare-metal orchestration requires a number of steps, most of which are manual:

- Provisioning bare-metal servers that just expose an IPMI interface over a network

- Deploying OS images on them, running applications on them

- Updating those applications based on network demands

- Upgrading software on the servers to keep them up-to-date with security patches and bug fixes

- Guaranteeing the availability of those servers in case there is an outage

- Re-provisioning servers when there are performance glitches or other issues

The sheer number of manual steps involved, the complexity of prerequisite knowledge required, and the risks associated with server downtime, and the large number of 5G sites, make it difficult to manage and operate bare metal servers efficiently. Flexibility and agility are impacted when bare metal servers need to be manually provisioned, upgraded, and scaled. 5G telco operators running large environments with combinations of bare metal, VNFs, and CNFs need a simpler, self-service, automated, remote operating model.

Platform9 brings cloud agility to your bare metal infrastructure providing a centralized pane of management for all of your distributed 5G locations.Using a unique SaaS delivery model, Platform9 automates and offloads all of your manual bare metal life-cycle management tasks. This enables 5G operators and developers to unleash the full performance of the physical hosts — no matter where they are located in a 5G network — as an elastic and flexible bare metal cloud, where they can rapidly deploy and redeploy CNFs or VNFs at moment’s notice.

For more details, check out Platform9 Bare Metal product details.

Challenge #3: High-Performance Networking Options are difficult to configure and operate

The number of end points (mobile devices, IoT sensors, nodes etc) that 5G will inter-connect will exceed hundreds of billions in the next few years: there are simply not enough IP addresses to go around with the current IPv4 standard. IPv6 fundamentally solves this problem and is a must-have for 5G deployments. Infrastructure stacks driven by Kubernetes must handle IPv6 from the ground-up and must support API-driven automated IP address management (IPAM) out of the box.

Another major requirement in 5G deployments is high-performance networking. Performance-driven VNFs and CNFs require near line-rate for network packets. Hypervisors and Docker bridge/networks introduce overhead and performance bottlenecks. Depending on the use case, one or more of these options would be needed: SR-IOV, DPDK, PCI-passthrough, MACvLan, IPvLAN etc.

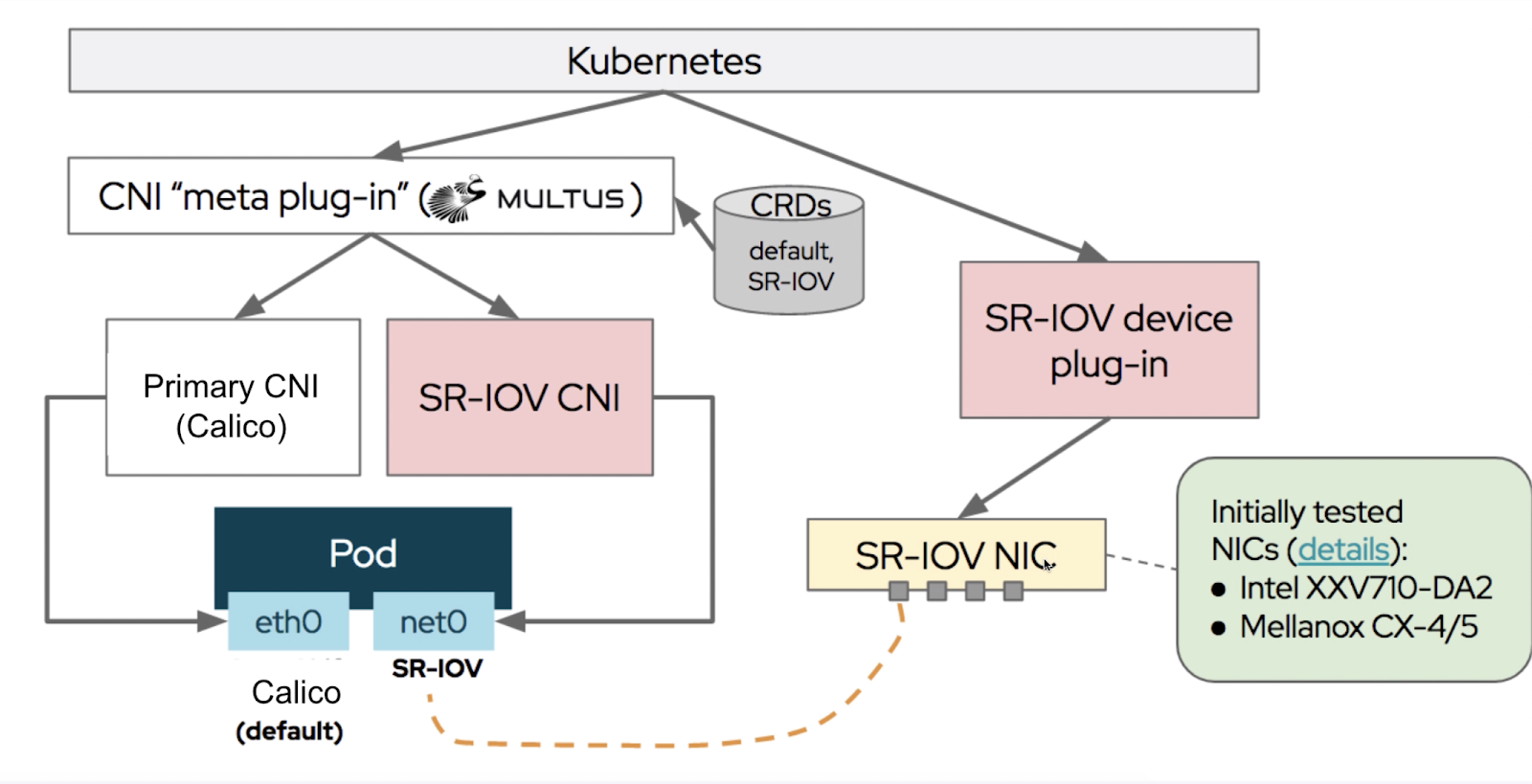

However, configuring a host and its physical network and “plumbing” all the necessary components and configuring them within a Kubernetes cluster for a particular use case and particular network requirement can be very time-consuming and complicated. The following diagram illustrates the complexity of this network configuration: configuring two CNI plug-ins using Multus, deploying a SR-IOV plug-ion, creating SR-IOV virtual functions, configuring the physical NICs, and connecting them to the appropriate eth interfaces on the pod etc.

The solution is to provide dynamic and automated ways to remotely configure these type of advanced networking settings based on use case and business requirements.

Watch this technical deep-dive video on how Platform9 simplifies host configuration and automates creation of SRIOV virtual functions that are seamlessly integrated with Kubernetes.

Challenge #4: Standard Resource Scheduling Is Not Suitable for Latency Sensitive CNFs

Latency-critical CNFs need guaranteed access to CPU, memory, and network resources. Pod scheduling algorithms in Kubernetes are based on enabling efficient CPU resource utilization and muti-tasking. However, the negative consequence of this is non-deterministic performance, making it unsuitable for latency-sensitive CNFs. A solution to this problem is to “isolate” or “pin” a CPU core or a set of CPU cores such that the scheduler can provide pods exclusive access to those CPU resources, resulting in more deterministic behavior and ability to meet latency requirements.

CPU-pinning, NUMA-aware scheduling, HugePages, Topology Manager, CPU manager, and many other open source solutions are starting to emerge. However, many of these are still early in their maturity and are not entirely ready for large-scale production use.

Platform9 provides these features as part of their SaaS delivery model enabling many of these open-source and advanced resource management capabilities in 5G implementations. Telco providers and operators can offload the overhead of deployment, support, upgrades, monitoring, and general production-readiness to Platform9. The result will be a cloud-native, self-service, declarative model of operations that will be consistent across CNFs, VNFs, and the variety of performance and latency-sensitive requirements

Challenge #5: Lack of consistent central management of 5G sites

It’s quite a challenge to deploy, manage, and upgrade hundreds or thousands of distributed 5G sites that need to be managed with low or no touch, usually with no staff and little access. Given the large distributed scale, traditional data center management processes won’t apply. The edge deployments should support heterogeneity of location, remote management, and autonomy at scale; enable developers; integrate well with public cloud and/or core data centers. While Kubernetes is great for orchestrating microservices in a cluster, managing thousands of such clusters requires another layer of management and DevOps style API-driven automation.

This management plane is where DevOps engineers manage the entire operation. There, they store container images and inventory caches of remote locations. Synchronization ensures eventual consistency to regional and edge locations automatically, regardless of the number of locations.

Yet another need is for fleet management by grouping sites so that similar configurations can be managed centrally through a single policy known as a profile. This relieves operators from managing each data center individually. Instead, the staff just defines a small number of profiles and indicates exceptions to policies where needed for a particular site. Each 5G site, such as radio towers, access layer, or core data centers runs its own worker nodes and containers.

Additionally, troubleshooting issues and keeping all the services up to date is an ongoing operational nightmare, especially when there are hundreds of these services deployed at each site.

Platform9 provides SaaS-based centralized management that provides the following capabilities:

- Single sign-on for distributed infrastructure locations

- Cluster profiles to ensure consistency of deployment across large number of clusters and customers

- Centralized management of tooling, APIs, and app catalog to simplify application management at scale

- Cluster monitoring and fully-automated Day-2 operations such as upgrades, security patching, and troubleshooting

Watch this video walkthrough of Platform9 automation and ease of use across all layers of the cloud-native infrastructure stack: bare-metal, Kubernetes, advanced networking, central management, and application deployment.

For More Information, check-out our Kubernetes and 5G Telco page