I’m delighted to announce the launch of a new open source project named Arlon at DeveloperWeek Cloud 2022 last week in Austin. Arlon is a tool that helps manage Kubernetes clusters, configurations, and applications lifecycle in a more structured and scalable way. What does that mean? We’ll get right into it!

Experiencing DeveloperWeek Cloud 2022

But first, let me share my experience with the DeveloperWeek Cloud 2022 conference. It’s hard to believe, but this was my first in-person conference since the beginning of the pandemic. It was delightful to walk, talk, and mingle with real, breathing human beings sharing similar professional interests and passions, once again. This is one of the first developer-focused conferences I’ve attended, and I was pleasantly surprised by the breadth and depth of the talks and topics. Cloud native and container management were definitely hot topics, but not the only ones. I had the chance to attend several insightful presentations in areas I’ve been less exposed to, such as secure coding, authorization, and threat mitigation. The conference was also innovative in its two-week structure, with the first being in-person, and the second fully virtual. I believe DeveloperWeek Cloud will continue to grow, and I look forward to next year’s incarnation.

Arlon: An Open Source Project

So, back to Arlon. This tool is designed for members of Platform Engineering, DevOps, or IT teams that manage multiple Kubernetes clusters to be consumed by internal developers, in dev/test and/or production environments. It is advantageous when the organization has reached a certain level of scale (dozens of clusters, hundreds of applications, developers, and customers). To understand how Arlon contributes to the field, it helps to understand recent trends and innovations in the domain of container orchestration, cluster lifecycle, and distributed applications deployment.

Arlon is a tool that helps manage Kubernetes clusters, configurations, and applications lifecycle in a more structured and scalable way

Of course, it all starts with Kubernetes, the de-facto “operating system” for modeling and hosting distributed workloads. One of Kubernetes’ main innovations is its set of declarative APIs for expressing applications and configurations. It’s the “fire and forget” principle: describe the final state you desire using resource manifests, and controllers running within the system will automatically reconcile cloud resources to accomplish your desired state.

But thanks to Kubernetes’ extensibility via constructs like custom resources and controllers. It is also becoming a platform of choice for hosting declarative infrastructure APIs that let you provision infrastructure directly, such as compute, networking, storage, and Kubernetes clusters themselves.

Two prominent extension projects come to mind:

- Cluster API lets you create, upgrade, and teardown Kubernetes clusters

- Crossplane exposes thousands of Public Cloud APIs as a set of consistent Kubernetes style resources.

Coinciding with the rise of declarative APIs is the GitOps methodology for managing and deploying resource manifests. By decoupling manifest storage from manifest deployment, GitOps tools such as Flux and ArgoCD give platform/devops teams a mechanism to enforce a more predictable and governable deployment process.

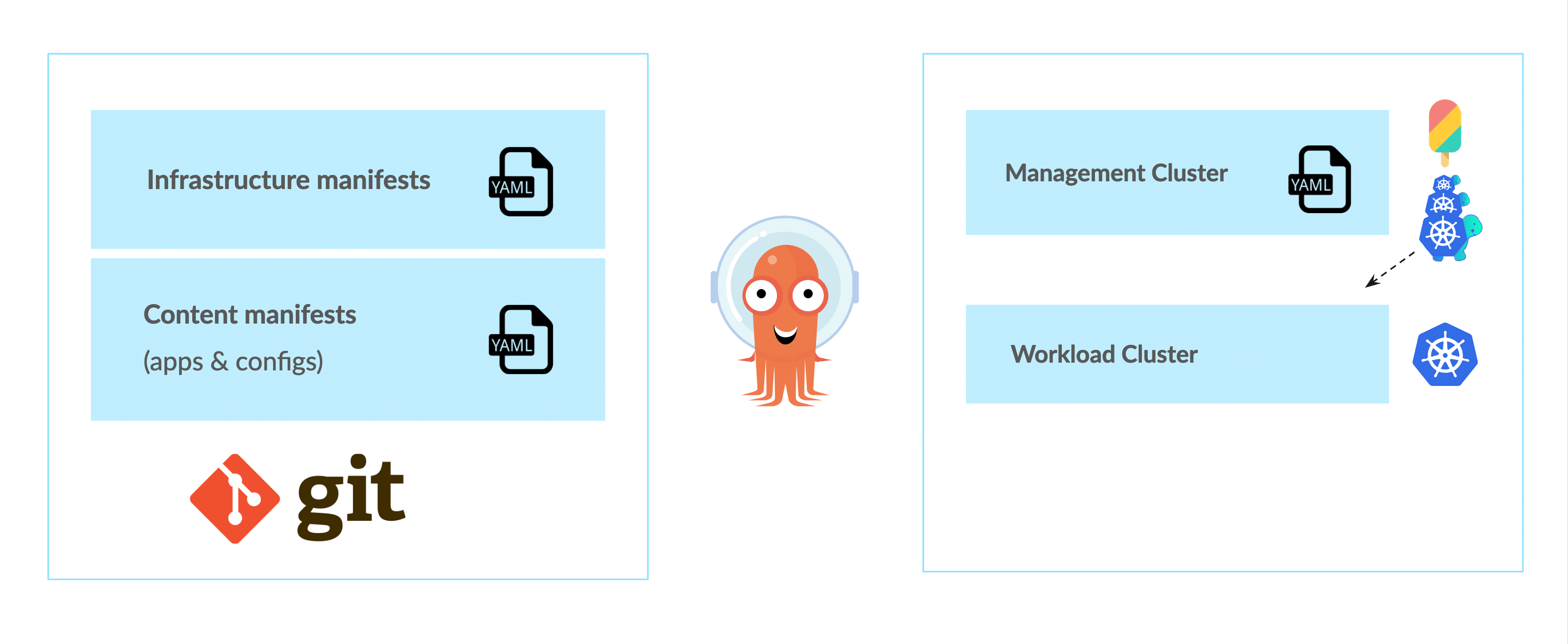

What happens when you combine declarative workload APIs, declarative infrastructure APIs, and GitOps? The answer is the potential for a powerful, unified architecture for managing both infrastructure (clusters + underlying resources) and content (applications and configurations that go inside of clusters). It uses a consistent configuration methodology (declarative manifests + GitOps) and toolset (Kubernetes with extensions + GitOps).

This diagram from my DeveloperWeek Cloud talk illustrates it. A dedicated management cluster hosts infrastructure API providers like ClusterAPI and/or Crossplane. A git repo hosts both infrastructure and content manifests. A gitops tool e.g. ArgoCD applies manifests to target clusters. To create a new workload cluster and deploy workloads to it, an administrator first applies an infrastructure manifest to the management cluster using ArgoCD. In response, the ClusterAPI (or Crossplane) controller(s) provisions a new workload cluster that complies with the specifications in the manifest.

A dedicated management cluster hosts infrastructure API providers like ClusterAPI and/or Crossplane. A git repo hosts both infrastructure and content manifests. A gitops tool e.g. ArgoCD applies manifests to target clusters. To create a new workload cluster and deploy workloads to it, an administrator first applies an infrastructure manifest to the management cluster using ArgoCD. In response, the ClusterAPI (or Crossplane) controller(s) provisions a new workload cluster that complies with the specifications in the manifest.

Before the workload cluster can receive content manifests, we must first register it with ArgoCD so that it knows about the cluster as a potential target. Once this is done, applications and configurations can finally be deployed to the new cluster by applying the content manifests. In summary, this architecture promises to unify infrastructure and content deployment, thereby simplifying management and facilitating integration with technologies like CI/CD pipelines.

Challenges faced while implementing the architecture

- Disjoint deployment of infrastructure and content manifests, caused by the intermediate cluster registration step that usually must be done manually. It interrupts the overall flow, and violates the “fire and forget” principle, meaning that infrastructure and content manifests cannot be applied together in one shot.

- Scale problems when the numbers of clusters, configurations or applications exceed a certain threshold (e.g. hundreds). Imagine having to check in hundreds or thousands of manifests into git. What if many clusters are mostly identical? Do developers copy and paste manifests? What happens when an update to a cluster property or an application is necessary?

- Production-ready clusters: projects like Cluster API and Crossplane create bare-bones Kubernetes clusters, but in reality a cluster needs a minimal set of “middleware” components (often called add-ons) to make them useful and reliable. Examples include cluster autoscaler, logging stack, and monitoring stack. A team operating this architecture would typically need to research multiple open source projects solving those problems and assemble, configure, test and deploy a large number of additional manifests. This is a daunting task.

Arlon is here to help address these challenges

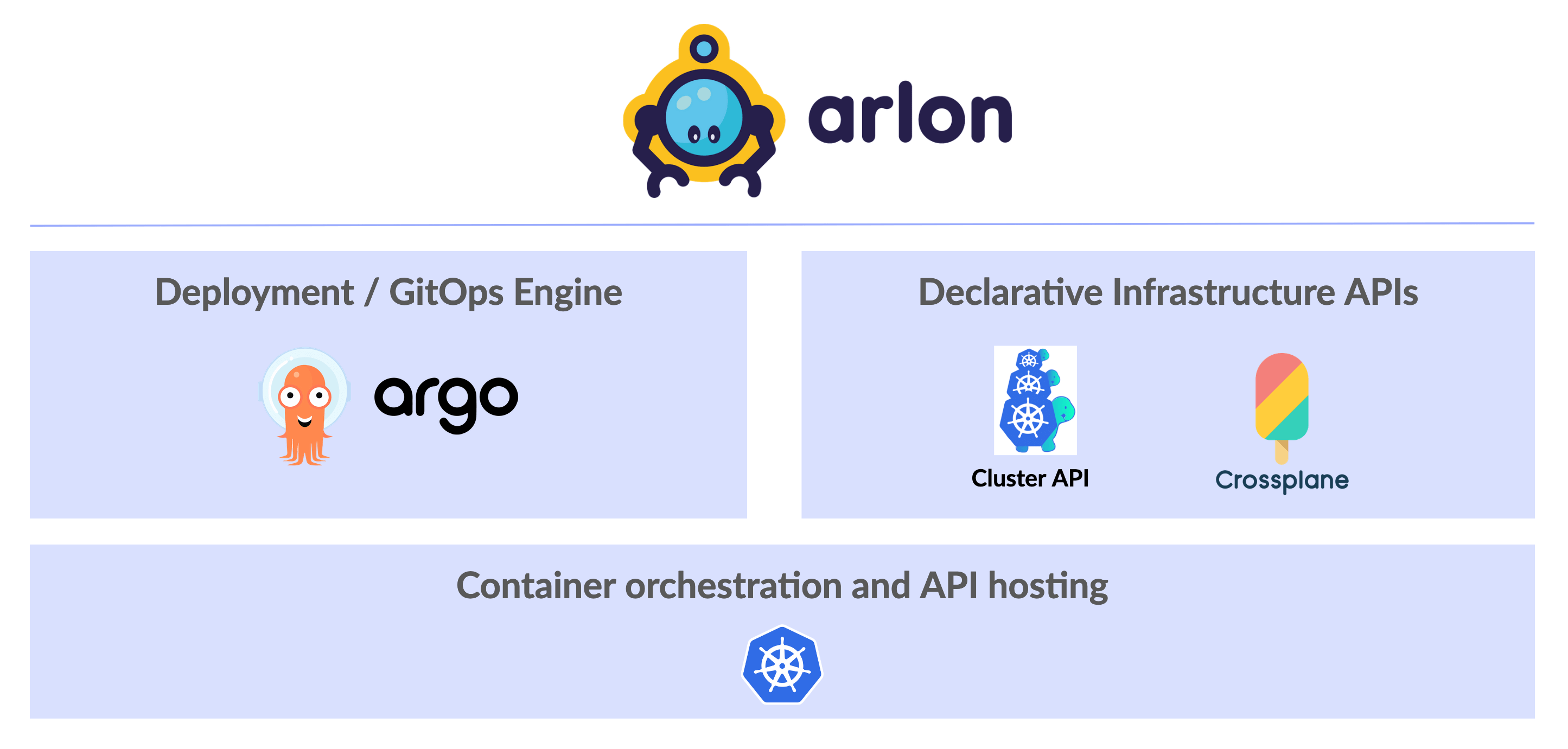

We created Arlon to help address those challenges, because we believe that the architecture described above is the right one going forward for cluster and application lifecycle management. Arlon is built on top of ArgoCD, Cluster API, Crossplane, and Kubernetes. We believe that those projects are best-in-class in their domains, and so we designed Arlon to rest on the shoulders of those giants.

Arlon provides noteworthy benefits

- Truly unifies infrastructure and content management by automating the end-to-end deployment of all types of manifests.

- Enables scale by

- Allowing you to organize manifests into concise, reusable and flexible groupings called BaseClusters (for infrastructure) and Profiles (for content).

- Supporting structured, predictable change management via Linked Updates.

- Provides a library of “included but optional batteries” for making Kubernetes clusters more production ready.

To illustrate those benefits, let’s study this example of a typical invocation of the arlon CLI to create a new workload cluster to be named mycluster:

arlon cluster create mycluster –repo-url {repourl} –repo-path {repopath} –profile prof1 -oyaml

The options highlighted in blue specify the base cluster that defines the shape of the new cluster. By shape, I mean properties such as Kubernetes version, number of worker nodes, number of node groups, networking technology, etc. A base cluster is a ClusterAPI or Crossplane manifest that you (or someone in your team) define, test, certify and store in a git directory. The options tell arlon where to find that manifest in git. If you routinely deploy, configure (and teardown) hundreds of clusters, but they all fall into a handful of “shapes”, then the base clusters construct will significantly simplify your life.

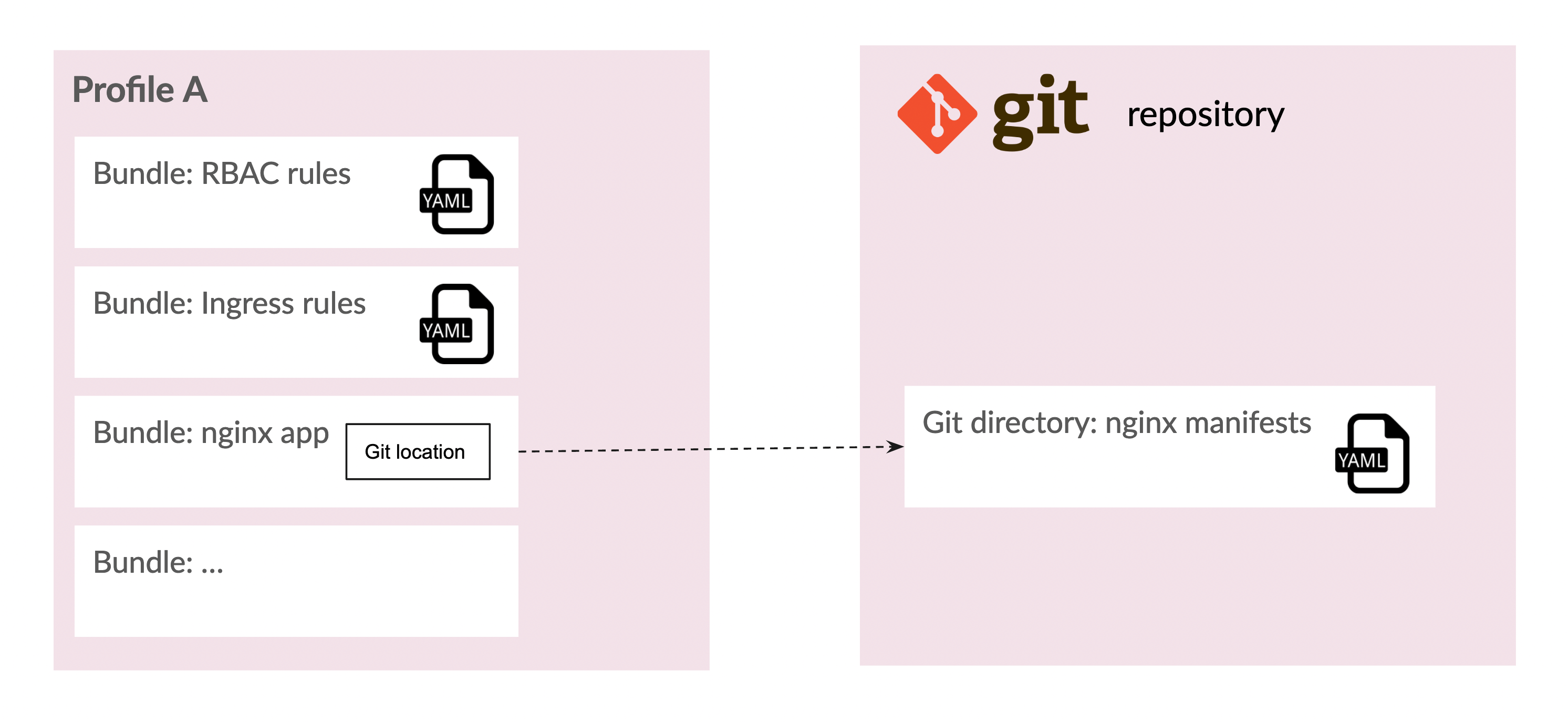

The profile construct, illustrated by the option highlighted in green, leads to a similar simplification, but for content meant to be deployed inside of the new cluster. Profiles let you organize applications and configurations into reusable groupings that you can test and certify independently. A profile is a collection of bundles. A bundle is simply a unit of content that can directly embed manifest YAML, or a container pointer to a manifest stored somewhere in git. The following picture illustrates a profile with two bundles containing embedded YAML (RBAC rules and Ingress resources), and one containing a reference to a manifest stored in git for the nginx application.

The “arlon cluster create” command generates a large(-ish) manifest containing many YAML resources that together automate the deployment of the new cluster and its content. By default, this manifest is applied immediately to your management cluster. The -oyaml option highlighted in brown lets you optionally capture that output and save it, so you can apply it later. The bottom line is that this manifest is all you need to complete the overall deployment in one shot: it truly satisfies the “fire and forget” principle discussed earlier.

So how does Arlon automate the end-to-end deployment of infrastructure together with content?

The manifest that “arlon cluster create” generates is composed of many ArgoCD Application Resources (AARs). An AAR basically tells ArgoCD where in git to read manifests (the source), and what cluster to apply them to (the target). The AARs of the generated manifest fall into 3 groups:

- Infrastructure manifests, sourced from the Arlon “base cluster”. The target is the management cluster, so that the ClusterAPI or Crossplane controller(s) can detect and react to them.

- Content manifests, sourced from the Arlon profile. The target is the new workload cluster which doesn’t exist yet.

- “Glue” manifests supplied by Arlon, including the ClusterRegistration resource.

The second group of manifests cannot be applied until ArgoCD knows about the new cluster: they will initially fail to apply, but ArgoCD will keep retrying.

How does the new cluster get registered? This is where the ClusterRegistration from the third group comes into play. It instructs the Arlon controller running in the management cluster (and inserted during Arlon installation) to wait for the workload cluster to become available, and then automatically register it with ArgoCD, thereby unblocking the manifests of the second group. This is how Arlon automates the end-to-end unification of infrastructure and content deployment behind the scenes.

Linked Updates

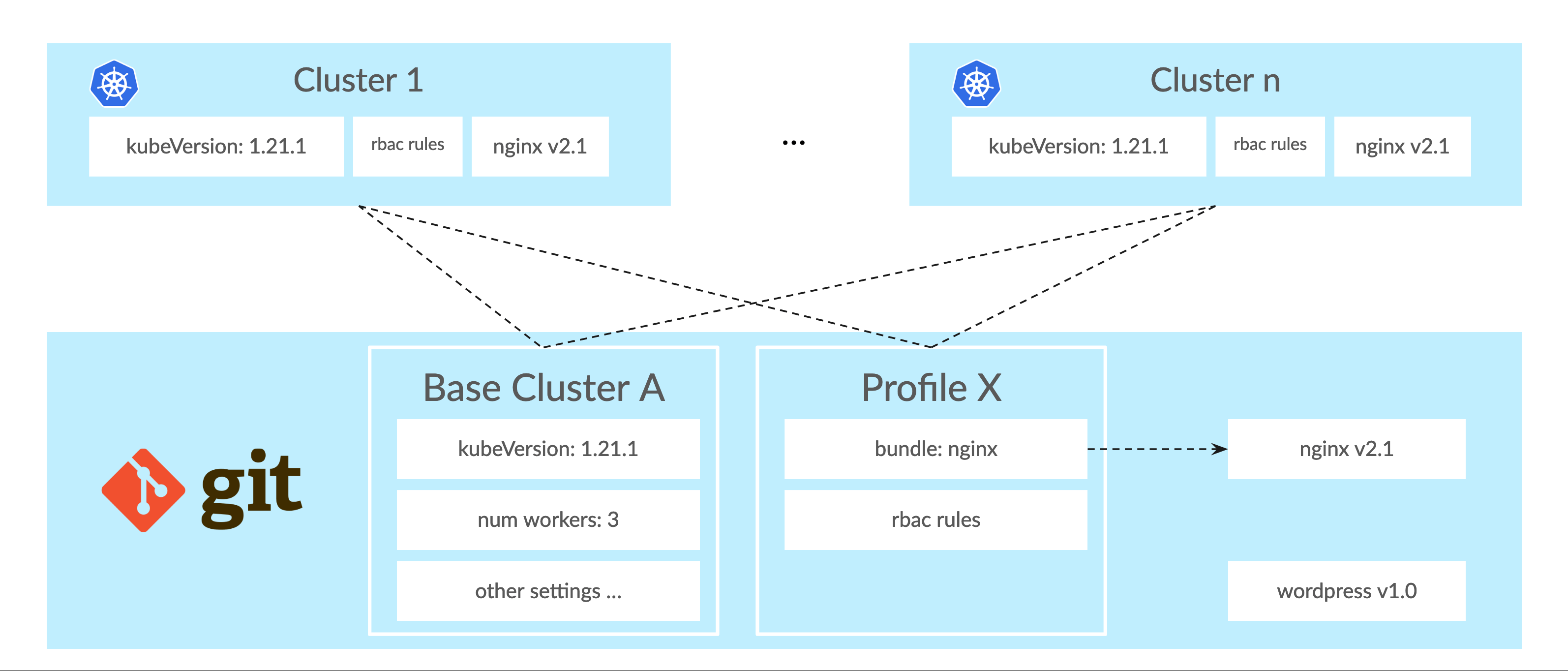

We’ve seen how base clusters and profiles help you manage manifests at scale. The Linked Updates helps you with change management when dealing with large numbers of clusters. Remember that an Arlon workload cluster is created from a base cluster and a profile. By default, base clusters and profiles are shared, or linked, to all clusters created from them. The following diagram illustrates n clusters created from base cluster A and profile X.

Base clusters and profiles live in git as well. This means that they can be modified in a version-controlled way. When you modify a base cluster, the change will propagate to all workload clusters originally created from it. In the illustrated example, if you, for example, modified the kubernetes version of base profile A from 1.21.1 to 1.22.0, this will automatically cause the ClusterAPI or Crossplane controller(s) to initiate a Kubernetes upgrade of all n clusters to the new version.

You can also modify the bundle composition of a profile. If, for example, you add a bundle to X, and this bundle contains a reference to the wordpress application manifest in git, the application will automatically be deployed to all n clusters.

Finally, if you modify an application manifest already present or referenced in a profile, the change will propagate to the clusters. For example, if you changed the nginx manifest to use version v3.0 of the application, nginx will get upgraded in the n clusters.

The Arlon Middleware Library

I previously mentioned the challenge of equipping your Kubernetes clusters with the necessary add-ons to make them more usable, reliable and production-ready. Given that Arlon is providing a unified platform for cluster and content lifecycle management, it made sense for the project to assist with this task by providing a library of optional but useful (and often critical) “middleware” content for your clusters.

This is the newest and least mature portion of the project, so we initially focused on components that were particularly essential and difficult to package and configure. They currently include:

- Cluster Autoscaler (CAS)

This is essential for horizontally scalable applications, and to minimize costs. CAS is notoriously difficult to configure for clusters managed by ClusterAPI due to specialized configuration required on the management cluster as well as the workload clusters. We are glad to announce that Arlon comes with out of the box support for CAS for ClusterAPI clusters.

- Monitoring stack

This deploys resources that enable shipping of metrics to a central server utilizing Prometheus and Grafana.

The library will grow over time, hopefully via contributions from you!

Conclusion

With Arlon, we hope to start a new journey in the domain of infrastructure and application lifecycle management. We believe that standardizing the solution on the pillars of GitOps, declarative APIs and Kubernetes will lead to simpler management of large-scale deployments. We’d love to hear your feedback, and hope for your contributions in the future.

For more information on the project:

- The project repository: https://github.com/arlonproj/arlon

- The #arlon channel at https://slack.platform9.io/

- Arlon website

- The DeveloperWeek Cloud 2022 talk (link to be posted soon)