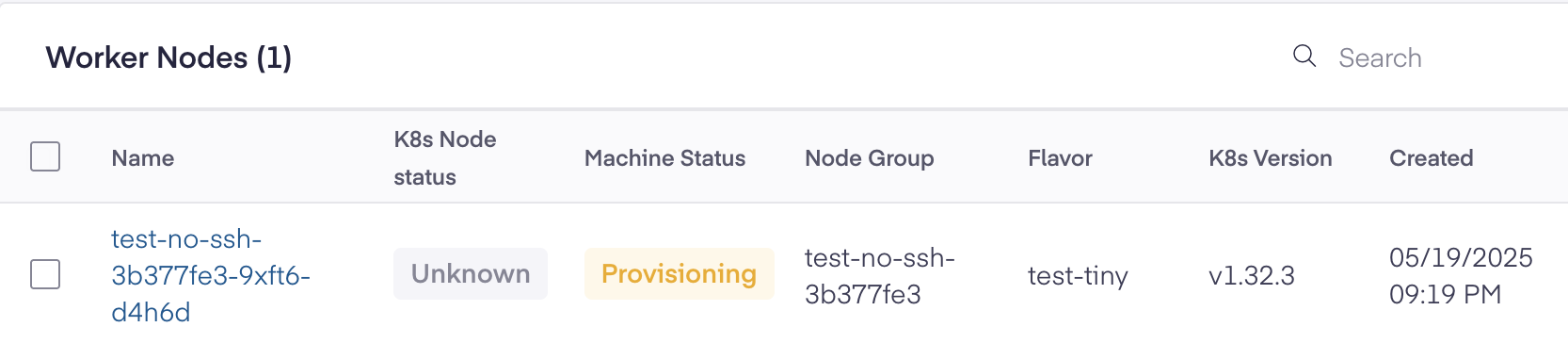

Kubernetes Worker VM Stuck in Provisioning State

Problem

- Kubernetes cluster is stuck in

ScalingUpstate & Machine Status is stuck inProvisioningstate.

Environment

- Private Cloud Director Kubernetes - v2025.4 and Higher

- Component - Kubernetes

Cause

- Review the

machinedeploymentsfrom the<SHORTNAME_DEFAULT_SERVICE>namespace. There are no Ready replicas.

$ kubectl get machinedeployments -n <SHORTNAME_DEFAULT_SERVICE>NAME CLUSTER REPLICAS READY UPDATED UNAVAILABLE PHASE AGE VERSIONtest-no-ssh-3b377fe3 test-no-ssh 1 1 1 ScalingUp 22m v1.32.3- Further checking the

machinesobject, the VM is infailedstate. In the yaml output of the machine resource, it complains about a missing key.

$ kubectl get machines -n <SHORTNAME_DEFAULT_SERVICE>NAME CLUSTER NODENAME PROVIDERID PHASE AGE VERSIONtest-no-ssh-3b377fe3-9xft6-d4h6d test-no-ssh Failed 25m v1.32.3 Message: error creating Openstack instance: Bad request with: [POST https://example1.platform9.com/nova/v2.1/<id>/servers], error message: {"badRequest": {"code": 400, "message": "Invalid key_name provided."}}Reason: InstanceCreateFailedSeverity: ErrorStatus: FalseResolution

- As a prerequisite, public SSH key needs to be added in the PCD Virtualization before creating a Kubernetes cluster. The key name has to be

defaultonly, any other name must not be used. This key is used to bootstrapped into the worker nodes and will be used to access the worker nodes later. - This can be created via UI

Networks and Security SSH Keys. - All users need to have individual SSH Key created.

Was this page helpful?