Security-Enhanced Linux (SELinux) is a swiss army knife for Linux security, which is as relevant in commodity Kubernetes deployments as it was in yesterdays era of artisanally crafted linux server silos.

Whether or not you are using cloud native technology, some of the lessons we’ve learned around being transparent, disciplined in your security practices, and deliberate when creating an SELinux policy will be valuable to a broad audience of SaaS vendors and developers alike.

In this article, we’ll give you a quick example of how to reverse-engineer your SELinux security policies, along with a tutorial for how to use SElinux Modules to define your applications security portholes. This enables you to ship your Kubernetes-based application with a security policy that your clients can edit and run wherever they want – including on on-premises, secured environments.

Why would you want to do that? The fact is that you have customers with unique compliance and security requirements, some of which may be government mandated and legally enforced. These policies can be codified as SELinux rules, which can give developers flexibility to build different types of apps that don’t require manual auditing, while still serving as evidence of due-diligence from a security perspective, often required by large organizations.

For those of you not experienced with building your own SELinux policies, we’ll show you how to use audit2allow to quickly get your application working on an SELinux system.

Glossary

- Audit2Allow: Is the original SELinux PLP tool for making policies that dont unblock applications in Mandatory access control environments.

- Audit2RBAC: Is analog to Audit2Allow for Kubernetes, available here https://github.com/liggitt/audit2rbac .

(Quick Note: Have you ever hit restrictions deploying Kubernetes apps in a secure environment? If so – check out audit2rbac.) - MAC: Refers to Mandatory Access Control, where permission to access any resource is always explicitly granted, rather than granted by default (denials being the default).

- RBAC: Is the MAC security model that Kubernetes implements

- SELinux: Is … well… you probably already know, and if not, you should google it before reading further (also check out this link)

SELinux Rules and Kubernetes RBAC policies

The hard part about reverse engineering SELinux rules from a running application is not unique to SELinux at all, and is in fact also a problem in secured Kubernetes environments.

Since many of you reading this article may be wondering whether it’s applicable to cloud native deployments of apps which might not need SELinux considerations, the answer is yes!

You can use these same concepts to reverse engineer Kubernetes application policies using the lovely https://github.com/liggitt/audit2rbac tool, which allows you to do the exact same procedure for your Kubernetes-based RBAC restrictions. RBAC, just like SELinux, uses a Mandatory Access Control policy – meaning that access is only allowed explicitly, rather then defaulted, thus the same families of tools that you use when fixing SELinux restrictions can be used in Fixing Kubernetes API server restrictions.

Anyway, once you go through this tutorial, you’ll also have plenty of intuition into the zen of how audit2rbac works as well. Huge thanks to jordan ligget for this great tool!

What Does SELinux Mean For You

Now, without further ado, let’s dive in to what SELinux provides:

My cloud is locked down – So why do I care about SELinux?

Securing the perimeter of a system isn’t the same as securing the system on the inside. Systems have a variety of users who can perform different actions, and there are trusted users on any computer system who can inadvertently compromise data or security of a system, even if they are correctly logged in and authenticated. Thus part of securing a distributed system is protecting itself from itself. There’s plenty of articles explaining SELinux and why its important. But one worth noting from recent history is the latest Kubernetes CVE, which is prevented by SELinux.

But I’m also running a SaaS – do I still need SELinux?

In cloud native environments you might think you can get away with less internal security since “Bob” and “Alice” aren’t SSH’ing into your machines every 5 minutes. However, imagine now that Bob and Alice are, instead, running hundreds or thousands of processes on a distributed cluster of 100s of nodes, as root – writing data to various temporary directories. That’s actually the default security story for Docker. So… you’re still really in need of an internal threat model and security solution, even if your applications are containerized and your users are decoupled from the scheduling of processes.

How does SELinux work in a Cloud Native world?

The same way as it always has: By labeling stuff in the kernel. This ensures its relevance in the shifting tides of hipster application environments, which continue changing deployment models without, oftentimes, embedding threat models as part of the application stack.

Thankfully SELinux continues to watch over all activity at the operating system. As mentioned earlier, SELinux protects you at the core, and the way it does so is by labeling processes and resources inside of the kernel, and forces interactions to be authenticated before, rather than after, they happen.

How SELinux protects your Kubernetes cluster from itself

SELinux prevents unforeseen security compromises (“unknown unknowns”) from causing problems that you never would have been able to imagine beforehand.

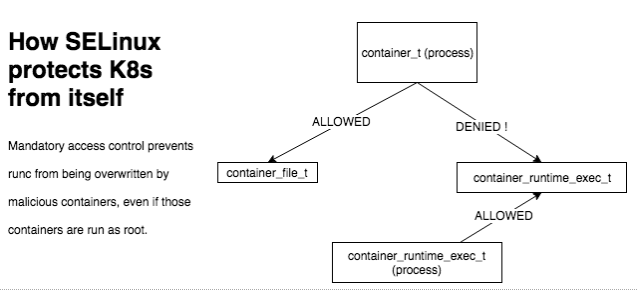

For example, it prevents the aforementioned Kubernetes CVE – which involves using RunC to maliciously gain root privileges on the host running the container, enabling unlimited access to the server as well as any other containers on that server.

The below diagram shows how this security vulnerability works and how SELinux stops it is.

In short: The container_t labeled processes (which are what you run in your pods) aren’t able to write to files that are labeled as container_runtime_exec_t. Since RunC is indeed labelled as container_runtime_exec_t, it can’t be overwritten, even by a process running as root, if the underlying container runtime is running with the –selinux-enabled flag.

If you look closer with the SELinux management utilities, you can see that the container_file_t label is associated with the kubelet:

[root@vm1 vagrant]# semanage fcontext -l | grep kub | grep container_file

/var/lib/kubelet(/.*)? all files system_u:object_r:container_file_t:s0

[root@vm1 vagrant]# semanage fcontext -l | grep docker | grep container_file

/var/lib/docker/vfs(/.*)? all files system_u:object_r:container_file_t:s0

Delivering SaaS Applications On-prem with SELinux

A huge benefit of first-generation SaaS applications was the fact that installation and security were managed for you by a third party, allowing you to focus on your business. However, as we all know:

- SaaS services are an awesome gateway drug to your product.

- SaaS services often eventually hit a cost vs. scalability bottleneck, and at that point, an on-prem solution provides your customers with an escape hatch from paying you for the cloud infrastructure costs as well as for the SaaS licenses.

- Also – for some customers, SaaS is not an option, and they will require your application to be installed and run on their on-premises datacenter

- Your clients’ datacenters probably run some kind of security software, and often its SELinux.

Thus, if you want to sell your software anywhere, and allow your large customers to be able to install it on any infrastructure – including their on-premises data centers – you’ll probably need to know how to work in secure environments. That means your apps need to work with SETENFORCE 1. This means that you set SELinux so that it enforces security policies so that things which haven’t been explicitly allowed are blocked.

Can you ship software that works on SELinux-enabled environments, even if the defaults break you?

YES! Telling some customers to disable SELinux is like a 16-year-old asking their parents to buy them beer. They understand why you want them to do it, but they might not listen, and they probably trust you a little less just for asking.

So stop asking (if you can), and embrace their security model – before they find another vendor that does…. How? Using SELinux modules.

Using SELinux modules you can ship modular SELinux subunits which define how to set up and deploy your specific application’s security portholes, without asking your clients to disable (or make permissive) SELinux. This will make your clients very happy because they likely don’t have an easy way to disable SELinux on their existing platforms.

Show me how!

Tutorial: How to use SELinux Modules to define your application’s security portholes

It’s super easy.

- The first thing you need to do is run your app on an Enforcing (or permissive) system. I prefer Enforcing because I can watch my app break, giving me insight into where its potential security issues are.

- We have an example of how to easily spin up a fresh enforcing centos box up on your laptop right here https://github.com/platform9/selinuxmodules (just use vagrant up). This example also installs a lot of SELinux utilities for you. Note that any modern Linux distro will likely be able to be run with Setenforce 1, so you don’t have to use our example at all.

- While running your app, tail

/var/log/audit/audit.log

– this will give you detailed information about what secure actions are being restricted because of your enforcing logic.

- Copy your AVC logs, and use them to make a policy file. This is the magic step! You can see https://jayunit100.blogspot.com/2019/07/iterative-hardening-of-kubernetes-and.html for details, but in short, just do this:

audit2allow -a -M myselinuxmodule.te < AVC

- At this point, you’ll have a .te file, which has your SELinux policy defined in it. This single file tells any SELinux system how to open up security for the specific action which you need.For example, at Platform9, we recently found this module as necessary for one of our scripts to run (this file is called “pf9r.te”):

module pf9 1.0; require { type usr_t; type useradd_t; class dir write; class file { getattr open read }; } #============= useradd_t ============== #!!!! This avc is allowed in the current policy allow useradd_t usr_t:dir write; - Now what? Now you can share this with your customer. Or better yet, you can give them a script which applies this policy for them:

checkmodule -M -m -o ./pf9.mod ./pf9.te semodule_package -o pf9.pp -m pf9.mod semodule -i pf9.ppHINT: MAKE SURE YOU NAME THE MODULE FILE THE SAME AS WHAT IS DEFINED IN THE “module 1.0” line, that you see in the .te file. Otherwise, you’ll get an error like this “checkmodule: Module name pf9 is different than the output base filename pf9.”

- Anyone should now be able to run these 3 commands on any SELinux system to compile your .te file, package it into an SELinux local kernel loadable module, and then apply it right away! At that point, your application should be able to run just fine. Securely.

Note that there are a lot of other intricacies to how to work around client SELinux systems when you ship your application to on-premises deployments.

For example, they may not want to apply a particular module you provide to them. Don’t worry – the fact that you at least provide SELinux modules as a starting point in and of itself is going to increase your trust capital with the security team at your client’s data center. So, don’t be afraid – and don’t forget : SETENFORCE 1.

We encourage folks to try to ship their own SELinux functionality as required by your customers by contributing to, borrowing, reusing, or flat out forking and copy/pasting from our GitHub repository: https://github.com/platform9/selinuxmodules/ .