The cloud is evolving. As the “standard” cloud has become pervasive, we see specialized clouds emerge. ML clouds, Fin clouds, Telco clouds, and Edge clouds, to name a few. All share a few common goals: simplification of operation, ease of integration with a DevOps pipeline, and infrastructure flexibility. But differences in focus emerge with specialized networking or other hardware assistance for specific workloads, the ability to run VMs and containers side-by-side, and migration support.

Platform9 has hard-won experience building, operating, and supporting most of these types of clouds. For nearly a decade, we have supported customers with

- virtualizing their workloads

- onboarding their applications

- supporting their re-design to cloud native

- running Kubernetes and VMs side-by-side

As Kubernetes clusters grew, we helped customers:

- scale to new levels

- upgrade and supported increasing services complexity

- provide better observability

- improved their ability to manage their CI/CD better.

In the past two years, we saw a combination of use-cases pushed further to the edge. We had fun debates with customers, team members, and analysts as we all tried to define where the edge might be. The edge is the location close enough to the workload to satisfy the latency, data protection/security, cost, and manageability requirements.

You can think about scaling along two dimensions, both of which have unique challenges:

- scaling of the edge (# of locations)

- scaling of the compute (# of nodes in a location)

Traditional clouds were created to solve the compute scale problem. If you have few locations with a very high scale of infrastructure, you can

- achieve a high degree of flexibility in operations

- require little management plane overhead

- create high efficiency use of the compute

- offer a very flexible and cost-effective access

However, as you get closer to the user and the edge, the problem expands to a new dimension: management of the number of sites becomes more difficult. Also, the scaling of the number of locations AND the number of nodes becomes key to the solution.

Another concern in a hybrid cloud that includes edge nodes and clusters is the footprint size. The solution must reduce the overall footprint and remove the “waste” of compute used for management at the customer site.

Platform9 runs the management plane in the cloud and does not consume any local compute. But is that enough?

In the past few years, we considered creating a special distro to achieve a small footprint for the edge. Will such a point specialized solution provide a better solution? After looking at the needs of the edge, we concluded that the answer is NO. Conclusively. Allow me to explain.

In the recent past, some buzz was created around K3S. The initial setup of such clusters can be done faster than our full Kubernetes clusters, but the saving is measured in minutes only.

The performance impact on the vCPUs is marginal. Further, we discovered that the full stack memory usage is higher (~200MB). However, adding monitoring (Prometheus, Grafana, Alert manager, and integration with Customer Support) will close this gap. Disk space and memory footprints can be reduced if monitoring is NOT included. Given that Platform9 customers expect always-on assurance, we believe that the small sacrifice of the footprint will pay dividends in active monitoring.

For most of our customers, the Kubernetes cluster is not enough. Given use-cases in Telco, Development, Retail, and other Distributed Systems, we see customers asking for supporting capabilities and services:

| User authentication/authorization layer |

|

| Keepalived for K8s API endpoint |

|

| KubeVirt |

|

| Metal3 |

|

| ArgoCD |

|

| Monitoring |

|

| Addons Management |

|

| Platform9 Advanced Networking |

|

| Qbert, ClusterAPI |

|

| Upgrade UX |

|

Platform9 is deploying distributed edge clouds. These scale from tens of thousands of locations, each hosting anywhere between a handful of nodes in small sites to several gear racks. We have years of experience with these types of deployments. Customers express their delight in the implementation ease, onboarding expertise, our ability to help them with competent people, and an ever-expanding feature set. I invite you to read actual customer feedback posted publicly on the independent G2 website. Platform9 was also ranked #1, Leader in the GigaOM Radar report for managed Kubernetes.

Industry Endorsements

Platform9 named the Leader & Outperformer in GigaOm Radar report 2022

Platform9 is the #1 user ranked container management service, with user rating of 4.8/5

Platform9 named a “Representative Vendor” in 2021 Gartner Market Guide for Container Management

Platform9 named a “Strong Performer ” in 2022 Forrester Multi-Cloud Container Management Platforms Wave report.

The production environments for these solutions require ingress controllers ( for many cases), CSI out of the box, zero trust policies, DR solutions. As the number of clusters grows, we see fleet management requirements via CAPI and Arlon (a separate post on that will be available soon).

Platform9 invested in certifying solutions to achieve a high level of scale per site. Some of our customers are running at 100s of locations with specific plans to reach close to 3000 locations shortly. Our designers and engineers have been working with them to achieve the required scale. Others have been running 100’s of regions with over 600 nodes per region. There is no limit to the number of regions, and the customer expects to extend scaling in the number of regions and nodes per region. Platform9 is doing actual testing to certify scale in a region. Our platform is proven to scale to 7000 nodes. I want to emphasize that the number is a testing limit, and the future will see the scale certifications.

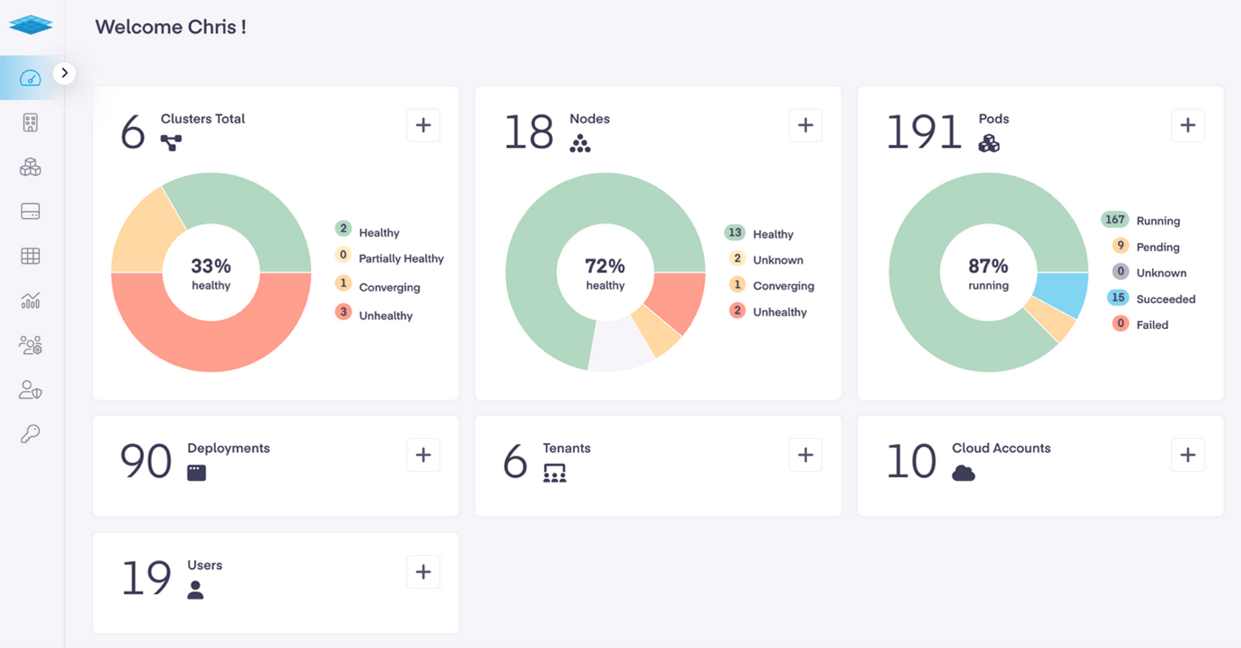

Platform9 runs a free-tier service. As such, customers, developers, and Kubernetes enthusiasts can download and run a cluster to get a sense of what the system is capable of. We see ~1,000 customers and clusters (with 10,000 nodes) continuously churning as more developers and enterprises test our systems. This operational experience maintaining load and providing scaling-in/out capabilities provides insight into how growth and enterprise tier customers will experience their private instances.

This puts Platform9 in the unique position of running a multi-tenant and distributed Kubernetes environment we host. It allows us to get first-hand experience testing an ever-growing live set of clusters for our customers.

Platform9 runs live diverse enterprise systems ranging from a small single node to thousands of regions and nodes. We provide any scale for any workload in both OpenStack and Kubernetes. Our clusters span private and public clouds, forming true hybrid solutions and supporting our customers in their transformation to cloud native.

Related Resource

Case Study