The vast majority of Kubernetes clusters are used to host containers that process incoming requests from microservices to full web applications. Having these incoming requests come into a central location, then get handed out via services in Kubernetes, is the most secure way to configure a cluster. That central incoming point is an ingress controller.

The most common product used as an ingress controller for privately-hosted Kubernetes clusters is NGINX. NGINX has most of the features enterprises are looking for, and will work as an ingress controller for Kubernetes regardless of which cloud, virtualization platform, or Linux operating system Kubernetes is running on.

In this blog post we will go over how to set up an NGINX Ingress Controller using two different methods.

First Steps

The first step required to use NGINX as an Ingress controller on a Platform9 Managed Kubernetes cluster, is to have a running Kubernetes cluster. If you do not currently have a Platform9 managed Kubernetes account, you cant create one HERE.

There are a couple of ways to setup a BareOS Cluster. To take advantage of the LoadBalancer service we recommend deploying with MetalLB configured.

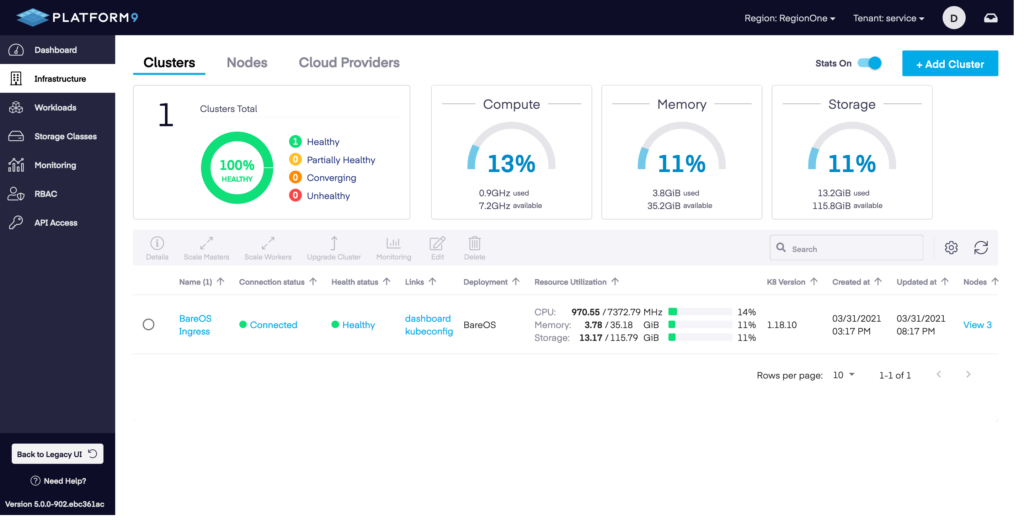

In this case the cluster we will be using is called BareOS Ingress and it is listed as healthy. It is a multi-master node cluster running on Ubuntu 18.04.

$ kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

192.168.86.71 Ready master 10d v1.20.5 192.168.86.71 <none> Ubuntu 20.04.2 LTS 5.4.0-84-generic docker://19.3.11

192.168.86.72 Ready worker 10d v1.20.5 192.168.86.72 <none> Ubuntu 20.04.2 LTS 5.4.0-84-generic docker://19.3.11

192.168.86.73 Ready worker 10d v1.20.5 192.168.86.73 <none> Ubuntu 20.04.2 LTS 5.4.0-84-generic docker://19.3.11

192.168.86.74 Ready worker 10d v1.20.5 192.168.86.74 <none> Ubuntu 20.04.2 LTS 5.4.0-84-generic docker://19.3.11$ kubectl get namespaces

NAME STATUS AGE

default Active 10d

kube-node-lease Active 10d

kube-public Active 10d

kube-system Active 10d

kubernetes-dashboard Active 10d

metallb-system Active 10d

pf9-addons Active 10d

pf9-monitoring Active 10d

pf9-olm Active 10d

pf9-operators Active 10d

platform9-system Active 10dRunning kubectl get nodes and kubectl get namespaces confirms that authentication is working, the cluster nodes are ready, and there are no NGINX Ingress controllers configured.

Mandatory Components for an NGINX Ingress Controller

An ingress controller, because it is a core component of Kubernetes, requires configuration of more moving parts of the cluster than just deploying a pod and a route.

In the case of NGINX, its recommended configuration has three ConfigMaps:

- Base Deployment

- TCP configuration

- UDP configuration

A service account to run the service is within the cluster, and that service account will be assigned a couple roles.

A cluster role is assigned to the service account, which allows it to get, list, and read the configuration of all services and events. This could be limited if you were to have multiple ingress controllers. But in most cases, that is overkill.

A namespace-specific role is assigned to the service account to read and update all the ConfigMaps and other items that are specific to the NGINX Ingress controller’s own configuration.

The last piece is the actual pod deployment into its own namespace to make it easy to draw boundaries around it for security and resource quotas.

The deployment specifies which ConfigMaps will be referenced, the container image and command line that will be used, and any other specific information around how to run the actual NGINX Ingress controller.

NGINX has a single file they maintain in GitHub linked to from the Kubernetes documentation that has all this configuration spelled out in YAML and ready to deploy.

Installing via Helm is the recommended method. We will explore a Helm installation as well as a manual installation via the CLI.

Install via Helm

Platform9 supports Helm3 and is available to anyone who wants to deploy using that method, which is often much easier to manage.

To install an NGINX Ingress controller using Helm, use the chart nginx-stable/nginx-ingress, which is available in the official repository.

helm repo add nginx-stable https://helm.nginx.com/stable

helm repo update

To install the chart with the release name ingress-nginx:

helm install ingress-nginx nginx-stable/nginx-ingress

If the Kubernetes cluster has RBAC enabled, then run:

helm install ingress-nginx nginx-stable/nginx-ingress --set rbac.create=true

Install via CLI

kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v1.0.0/deploy/static/provider/cloud/deploy.yaml

Which will generate the following output:

namespace/ingress-nginx created

serviceaccount/ingress-nginx created

configmap/ingress-nginx-controller created

clusterrole.rbac.authorization.k8s.io/ingress-nginx created

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx created

role.rbac.authorization.k8s.io/ingress-nginx created

rolebinding.rbac.authorization.k8s.io/ingress-nginx created

service/ingress-nginx-controller-admission created

service/ingress-nginx-controller created

deployment.apps/ingress-nginx-controller created

ingressclass.networking.k8s.io/nginx created

validatingwebhookconfiguration.admissionregistration.k8s.io/ingress-nginx-admission created

serviceaccount/ingress-nginx-admission created

clusterrole.rbac.authorization.k8s.io/ingress-nginx-admission created

clusterrolebinding.rbac.authorization.k8s.io/ingress-nginx-admission created

role.rbac.authorization.k8s.io/ingress-nginx-admission created

rolebinding.rbac.authorization.k8s.io/ingress-nginx-admission created

job.batch/ingress-nginx-admission-create created

job.batch/ingress-nginx-admission-patch createdExposing the NGINX Ingress Controller

Once the base configuration is in place, the next step is to expose the NGINX Ingress Controller to the outside world to allow it to start receiving connections. This could be through a load-balancer like on AWS, GCP, Azure, or BareOS with MetalLB. The other option when deploying on your own infrastructure without MetalLB, or a cloud provider with less capabilities, is to create a service with a NodePort to allow access to the Ingress Controller.

LoadBalancer

kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v1.0.0/deploy/static/provider/cloud/deploy.yaml

NodePort

Using the NGINX-provided service-nodeport.yaml file, which is located on GitHub, will define a service that runs on ports 80 and 443. It can be applied using a single command line, as done before.

kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v1.0.0/deploy/static/provider/baremetal/deploy.yaml

Validate the NGINX Ingress Controller

The final step is to make sure the Ingress controller is running.

$ kubectl get pods --all-namespaces -l app.kubernetes.io/name=ingress-nginx

NAMESPACE NAME READY STATUS RESTARTS AGE

ingress-nginx ingress-nginx-admission-create-wb4rm 0/1 Completed 0 17m

ingress-nginx ingress-nginx-admission-patch-dqsnv 0/1 Completed 2 17m

ingress-nginx ingress-nginx-controller-74fd5565fb-lw6nq 1/1 Running 0 17m

$ kubectl get services ingress-nginx-controller --namespace=ingress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller LoadBalancer 10.21.1.110 10.0.0.3 80:32495/TCP,443:30703/TCP 17mExposing Services using NGINX Ingress Controller

Now that an ingress controller is running in the cluster, you will need to create services that leverage it using either host, URI mapping, or even both.

First we will create a deployment and a service that the ingress resource can point to.

Deployment:

apiVersion: apps/v1

kind: Deployment

metadata:

name: hello-world

labels:

app: hello-world

spec:

replicas: 1

selector:

matchLabels:

app: hello-world

template:

metadata:

labels:

app: hello-world

spec:

containers:

- name: hello-world

image: gcr.io/google-samples/node-hello:1.0

ports:

- containerPort: 8080Service:

apiVersion: v1

kind: Service

metadata:

name: hello-world

spec:

ports:

- port: 80

targetPort: 8080

selector:

app: hello-worldThe example hostnames will need to be updated to a host that you have own or can modify DNS records for.

Sample of a host-based service mapping through an ingress controller using the type “Ingress”:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: hello-world

annotations:

spec:

ingressClassName: nginx

rules:

- host: host1.domain.ext

http:

paths:

- pathType: Prefix

path: "/"

backend:

service:

name: hello-world

port:

number: 80Using a URI involves the same basic layout, but specifying more details in the “paths” section of the yaml file. When TLS encryption is required, then you will need to have certificates stored as secrets inside Kubernetes. This can be done manually or with an open source tool like cert-manager. The yaml file needs a little extra information to enable TLS (mapping from port 443 to port 80 is done in the ingress controller):

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: hello-world

annotations:

cert-manager.io/cluster-issuer: letsencrypt-prod

spec:

ingressClassName: nginx

tls:

- hosts:

- host1.domain.ext

- host2.domain.ext

secretName: hello-kubernetes-tls

rules:

- host: host1.domain.ext

http:

paths:

- pathType: Prefix

path: "/"

backend:

service:

name: hello-world

port:

number: 80Next Steps

With a fully-functioning cluster and ingress controller, even a single node one, you are ready to start building and testing applications just like you would in your production environment, with the same ability to test your configuration files and application traffic routing. You just have some capacity limitations that won’t happen on true multi-node clusters.