Over the past 12 months, the team at Platform9 has been working hard to create a completely new way to build, manage, and scale Kubernetes clusters. We call it Profile Engine Stage 2.

Built using Arlon – a new open source project that integrates ArgoCD with Cluster API that anyone can use. Profile Engine Stage 2 extends our cloud native platform, delivering simple, Git-based cluster lifecycle and governance at scale, and is available now in our latest release version 5.6.

Platform9 version 5.6 transforms how we manage Kubernetes clusters. Specifically, users in AWS can now choose either Kubernetes native clusters running Platform9 Managed Control Planes for a bespoke experience, or leverage Amazon AWS EKS for a more standard AWS experience. In addition to EKS lifecycle support, 5.6 introduces Node Pools to help control costs, Assume Role for enhanced security, OS upgrades to ensure compliance, and the industry’s most flexible upgrade experience.

In addition to transforming our AWS capabilities, we continued to simplify how developers and DevOps Engineers interact with live workloads by creating a dedicated space for cluster management that lives outside of the developer experience.

Profile Engine Stage 2

Profile Engine was first introduced in 5.4 and brought with it the ability to snapshot a cluster’s RBAC configuration, customize the snapshot, then save the objects as profiles that are then rolled out to govern a fleet of clusters. The 5.4 release also included the ability to run a comparative ‘diff’ between a given cluster’s running state and a profile, removing the enormous challenge of attempting to find why and how users, service accounts, or applications had access on one cluster and not another.

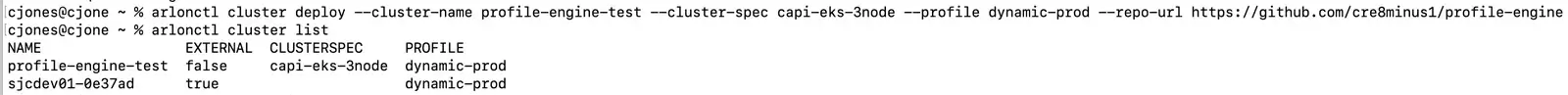

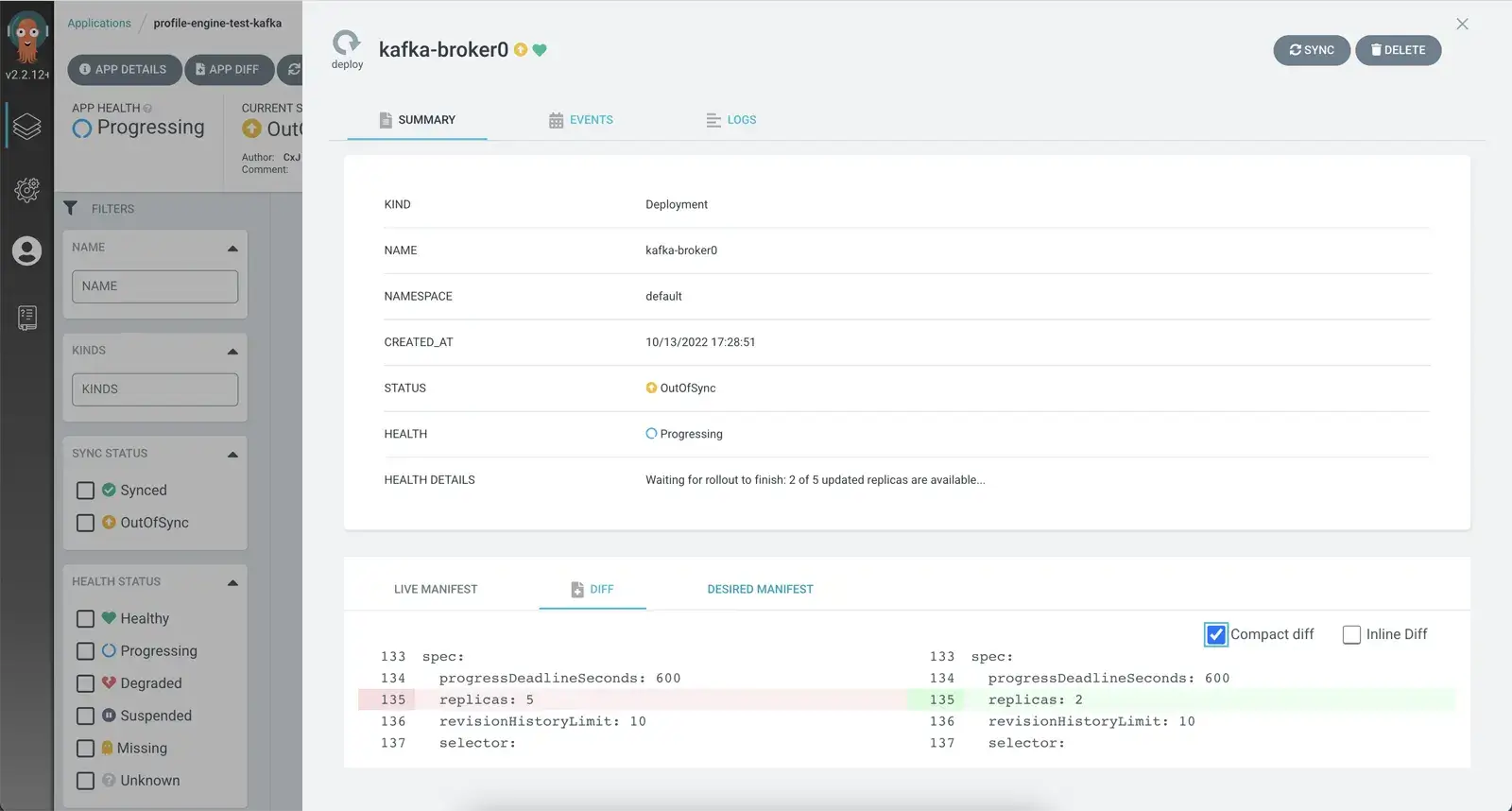

Stage 2 focuses on templating ‘Complete Clusters’, to enable whole cloud native environments to be created in an instant. Gone is the need to leverage Terraform to first build a cluster, wait for it to come online, and then manually connect it to a continuous deployment tool. Only after that is done can you you lay down your cluster policies and then finally install your applications. Profile Engine Stage 2 wraps this entire workflow into a single step!

Leveraging a single system to build complete clusters solves multiple issues simultaneously. First is scale. More clusters result in more singular, unrelated objects to manage. Second is resiliency. And third is developer productivity.

Scale

Profile Engine Stage 2 resolves scale as all clusters or a subset can be managed from a single template, meaning changes can be rolled out instantly across all clusters. By ensuring ‘like’ clusters all operate from a single defined state, the burden of managing individual clusters is removed.

Resiliency

Resiliency is improved by targeting two specific factors – time to recover and reduction in change-related outages. Recovery in the cloud native world is a key construct, as environments should all be stateless. Recovery should be defined prior to creating a cluster – this, however, is not always the case.

Profile Engine Stage 2 helps by ensuring that the defined state can be created without the manual overhead, helping to get you started and then making the act of recovery a single command. This means if you’re running in a public cloud region that goes offline you can quickly redeploy without needing to worry.

Developer Productivity

Lastly, productivity is boosted as engineering teams can be allotted specific controlled templates to build out their own environments, removing the need to manage by namespace or limit their access to clusters. Profile Engine Stage 2 untethers cluster lifecycle from governance. By templating the complete cluster, engineering teams can be allowed to create their own clusters and DevOps teams no longer need to worry about what may change as everything can be pre-defined and kept conformant in real-time.

What exactly is a complete cluster?

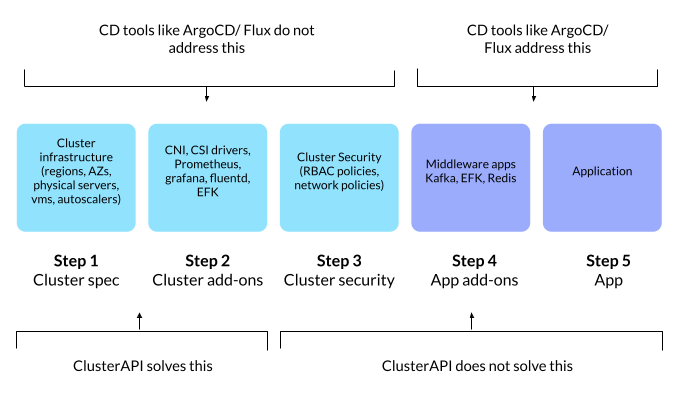

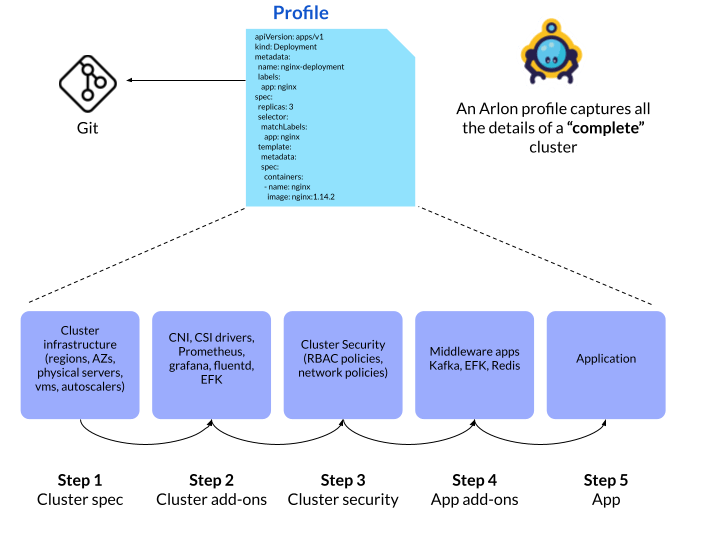

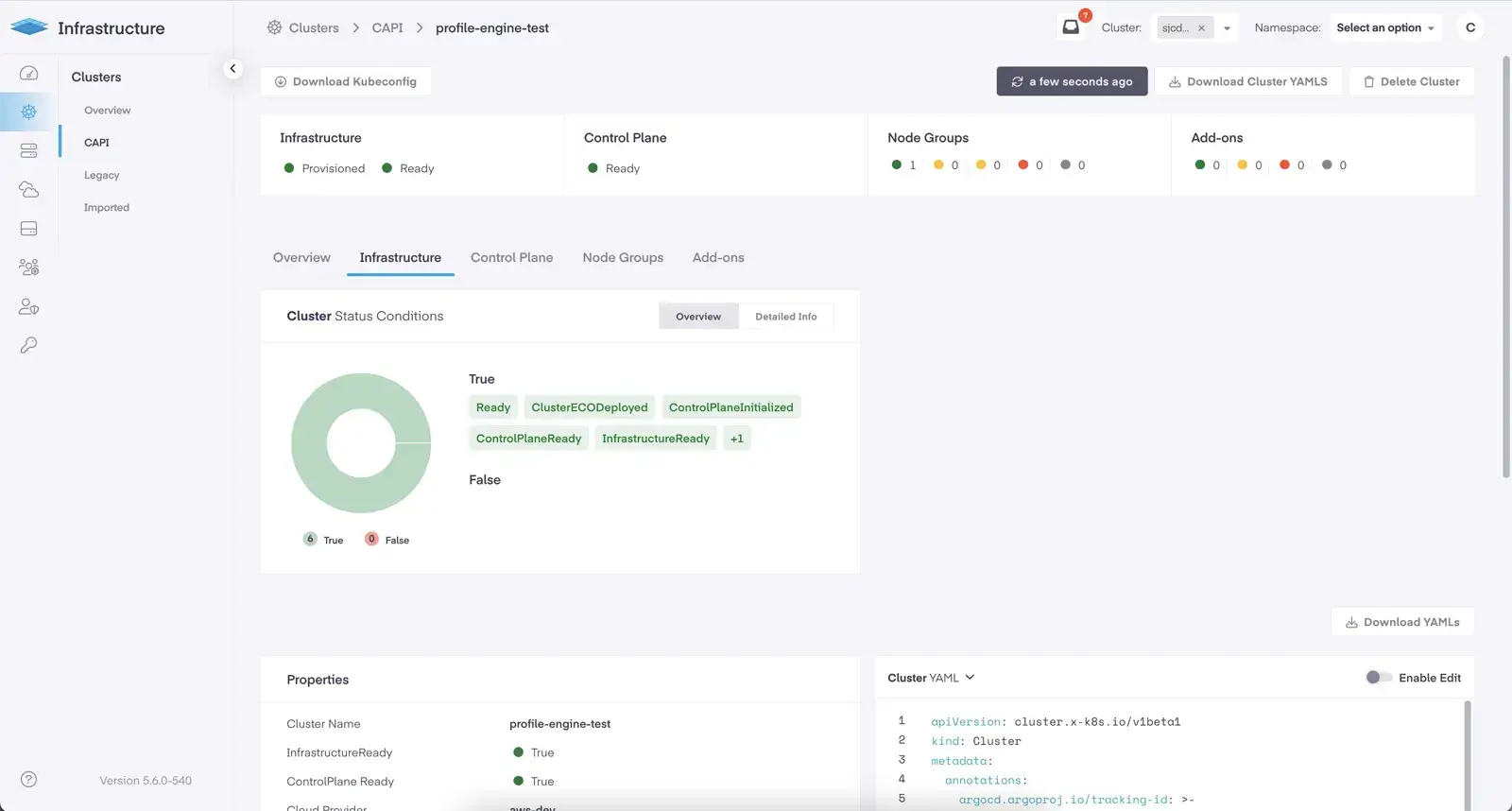

When we started talking to users about employing a Git-based workflow to build clusters, the first piece of feedback we heard was, “I wish you could install our applications too, not our business services, but the apps we add to clusters that make them usable.”. This is exactly what a complete cluster is. It is your cluster, configured to your environment, with your applications.To build a complete cluster, Profile Engine Stage 2 implements two elements, a ClusterSpec and a Profile.

ClusterSpec: The cluster’s configuration which includes the cloud’s infrastructure, cloud region, K8s version, nodes/node pools, spot instances, & CNI. Each ClusterSpec captures how and where a cluster will run and is then paired with a Profile.

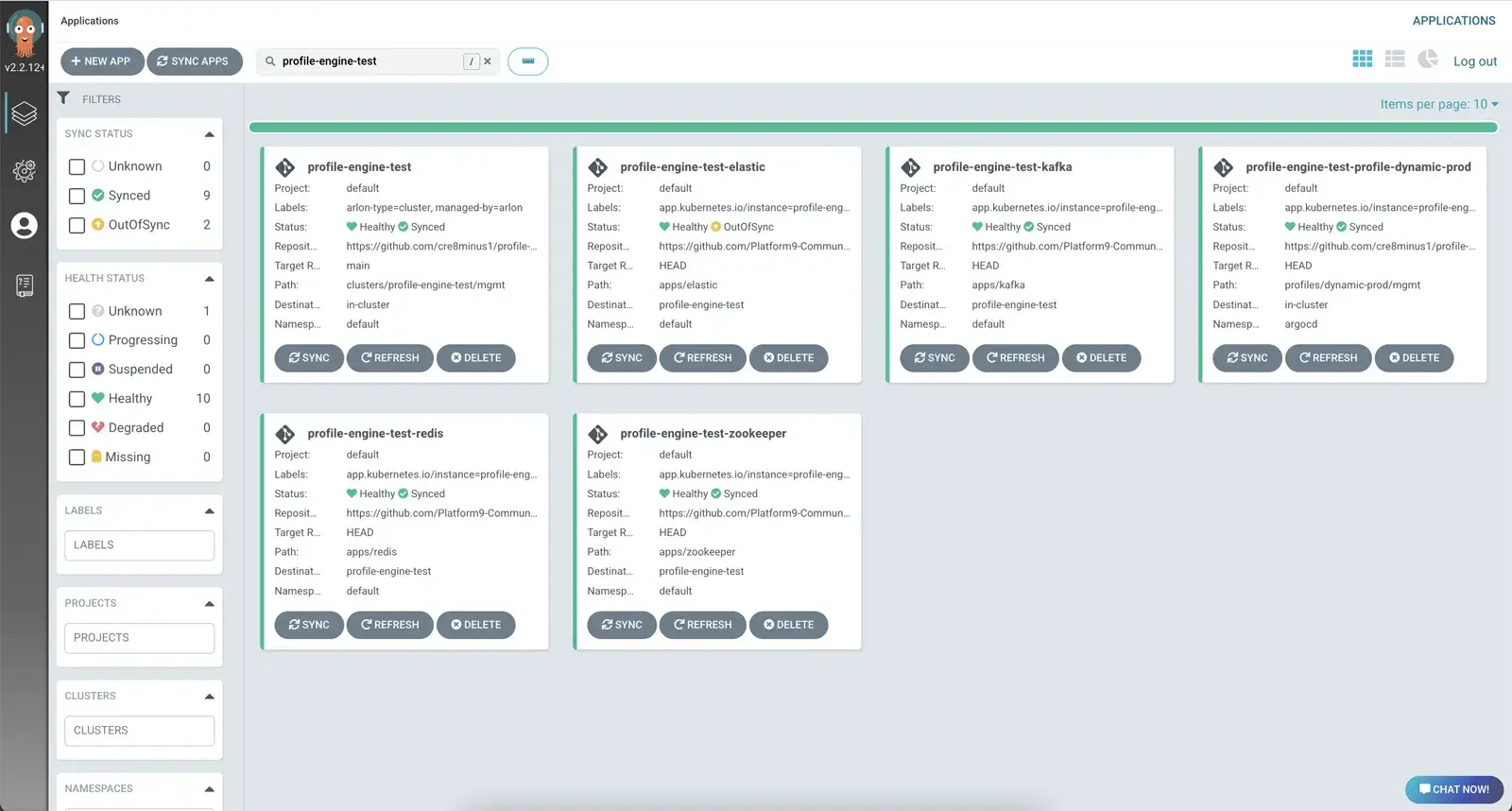

Profile: A profile is made up of any Kubernetes object. If it can be defined in YAML, it can be added to a profile. Profiles capture what needs to be added to a cluster once it’s running – things like RBAC policies and infrastructure applications such as Redis or Kafka are great examples. To capture each distinct object and separate unrelated items we leverage Bundles to ensure nothing overlaps.

Bundles: A bundle can be an application manifest, a helm chart, or a YAML file containing RBAC. It’s a unit of objects that you need to be added to a cluster. A Bundle can be shared across profiles.

When combined, a ClusterSpec and Profile are used to create your complete cluster, meaning you can build identical clusters in an instant across clouds, regions, and even data centers.

Where are ClusterSpecs, Profiles, and bundles stored? Git! Further, a Bundle can reference your own Git repositories, delivering a fully GitOps-compliant cluster without the hassle of manually creating the entire git structure cluster by cluster.

How did we do this?

Profile Engine stage two runs using Arlon, a new open source project from Platform9. Arlon was built to integrate Cluster API (CAPI) with ArgoCD, automatically registering clusters into ArgoCD and providing a continuous reconciliation service to enforce compliance. You can learn more about Arlon and how to run it yourself at arlon.io

How does ArgoCD help?

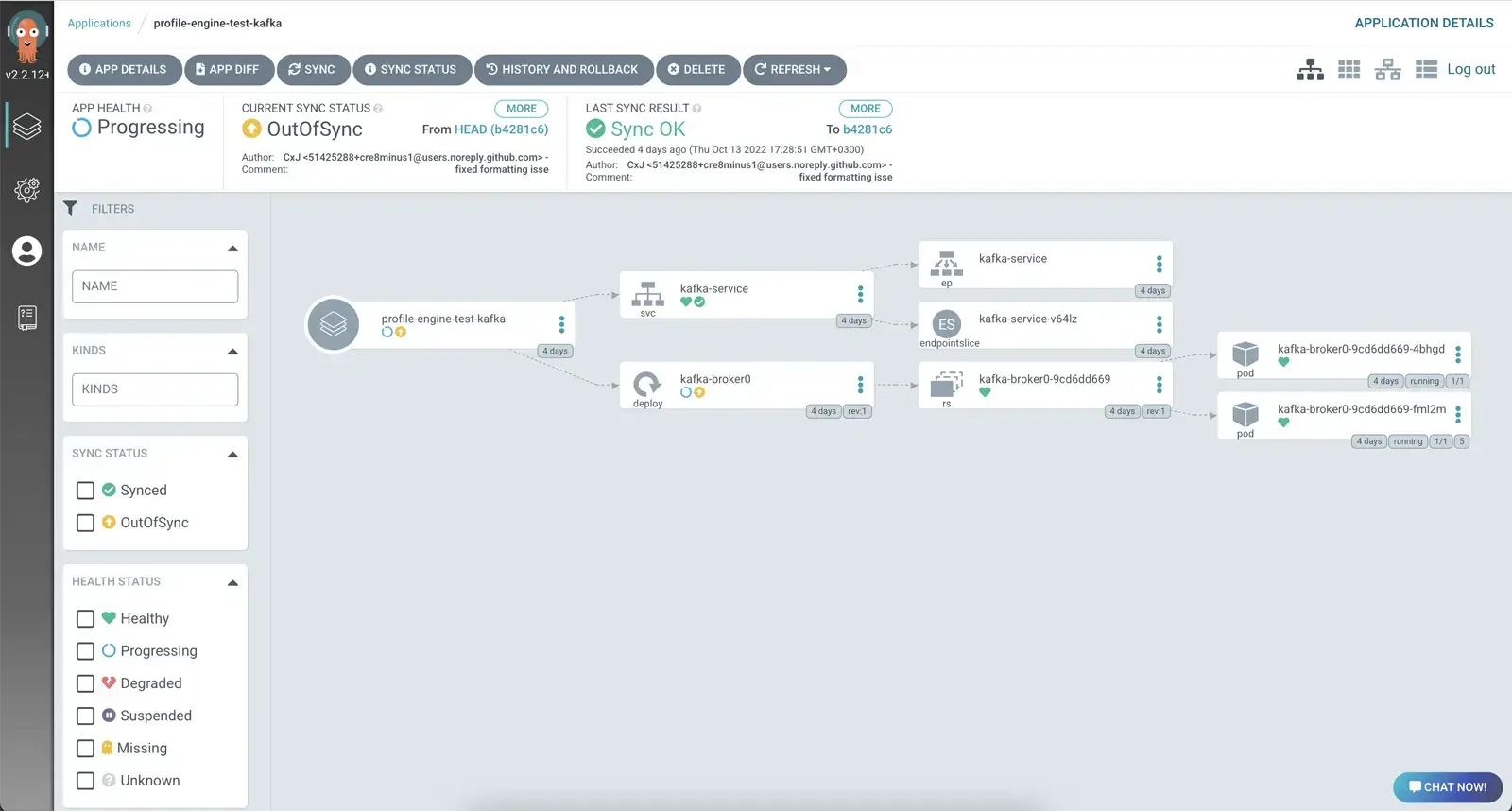

ArgoCD is a critical part of Platform9. It’s available as a standalone service and as part of Profile Engine, providing continuous deployment to any managed cluster.

Integrated into Profile Engine Stage 2, ArgoCD is the central service that creates the cluster and deploys policies plus applications. ArgoCD continuously ‘watches’ the deployed resources, including the cluster’s Custom Resources inside the Platform9 SaaS Management Plane and every deployed resource in each managed cluster. This two-layer approach means that any change to the cluster itself is reverted to the state defined in Git, plus any cluster side change to a policy (a user changes their access to include ‘delete’) or a change to an application’s manifest (resource limit is increased to 30G) are also reverted.

ArgoCD is the watchdog, and Arlon is what keeps ArgoCD on task ensuring that it knows what to manage and what should be running.

EKS Support and AWS Native Clusters

5.6 adds support for EKS and expands our capabilities for our native clusters in AWS, proving two options for building and operating clusters.

Why two? We believe it’s important to provide choice and flexibility across environments, as well as the ability to configure the Kubernetes API Server EKS doesn’t provide this, whereas native clusters do.

Native Clusters in AWS: Native clusters run upstream Kubernetes, expose the control plane nodes and etcd and provide the most flexible experience possible no matter where you choose to deploy. If you want to customize your cluster and ensure your multi-cloud or hybrid deployments are identical, then native clusters are the right choice.

EKS Clusters: EKS clusters offer a more curated experience. AWS builds, defines, and provides their Kubernetes distribution as a service. If you’re only running in AWS this may be the right choice. On top of that, Platform9 provides Always-on Assurance(tm), greatly simplifies IAM, streamlines upgrades, offers a single entry point for clusters across regions, and delivers an integrated and simple way for your developers to work with their containerized applications as they run in real-time.

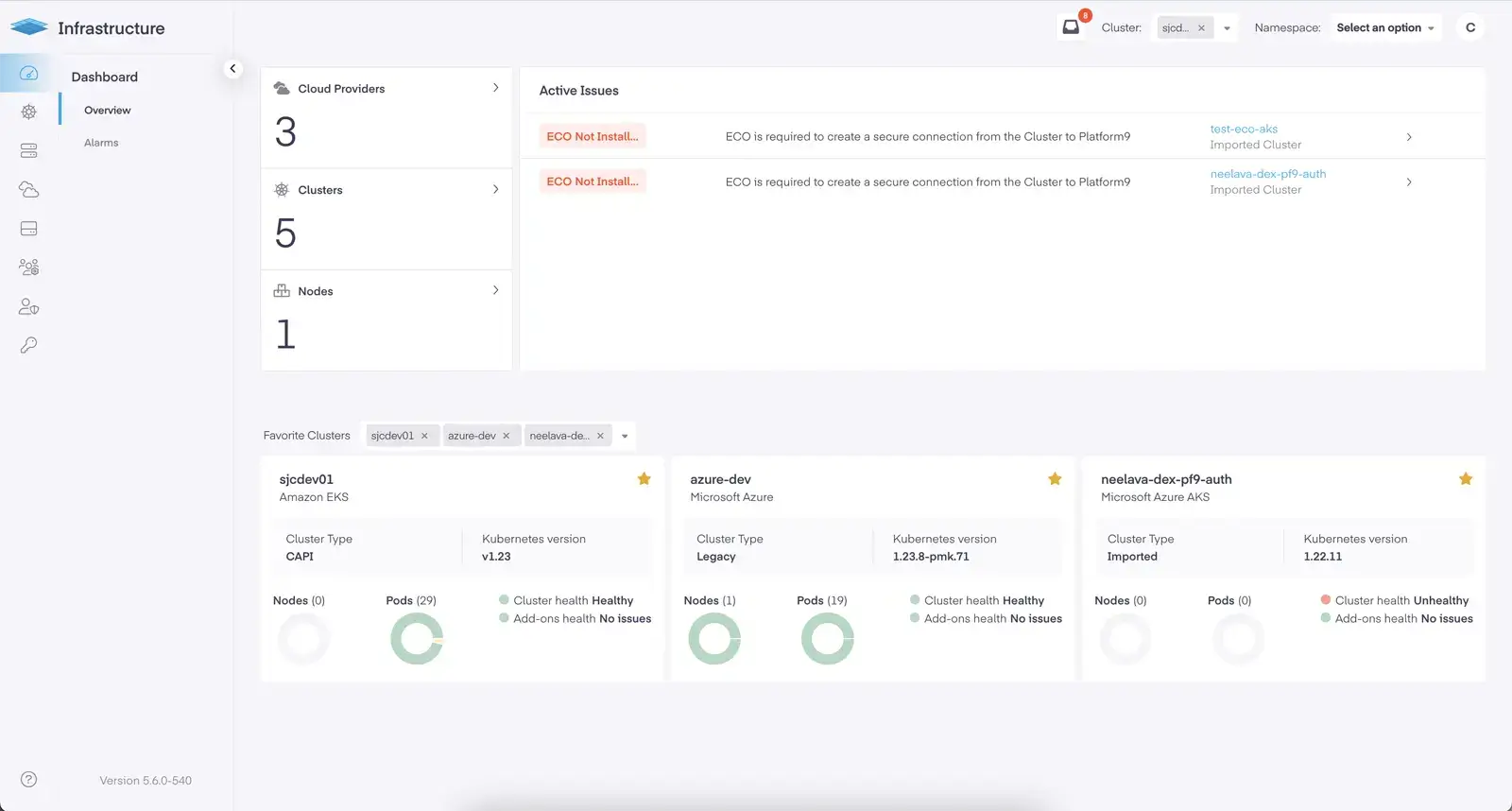

Enhancing the Developer Experience

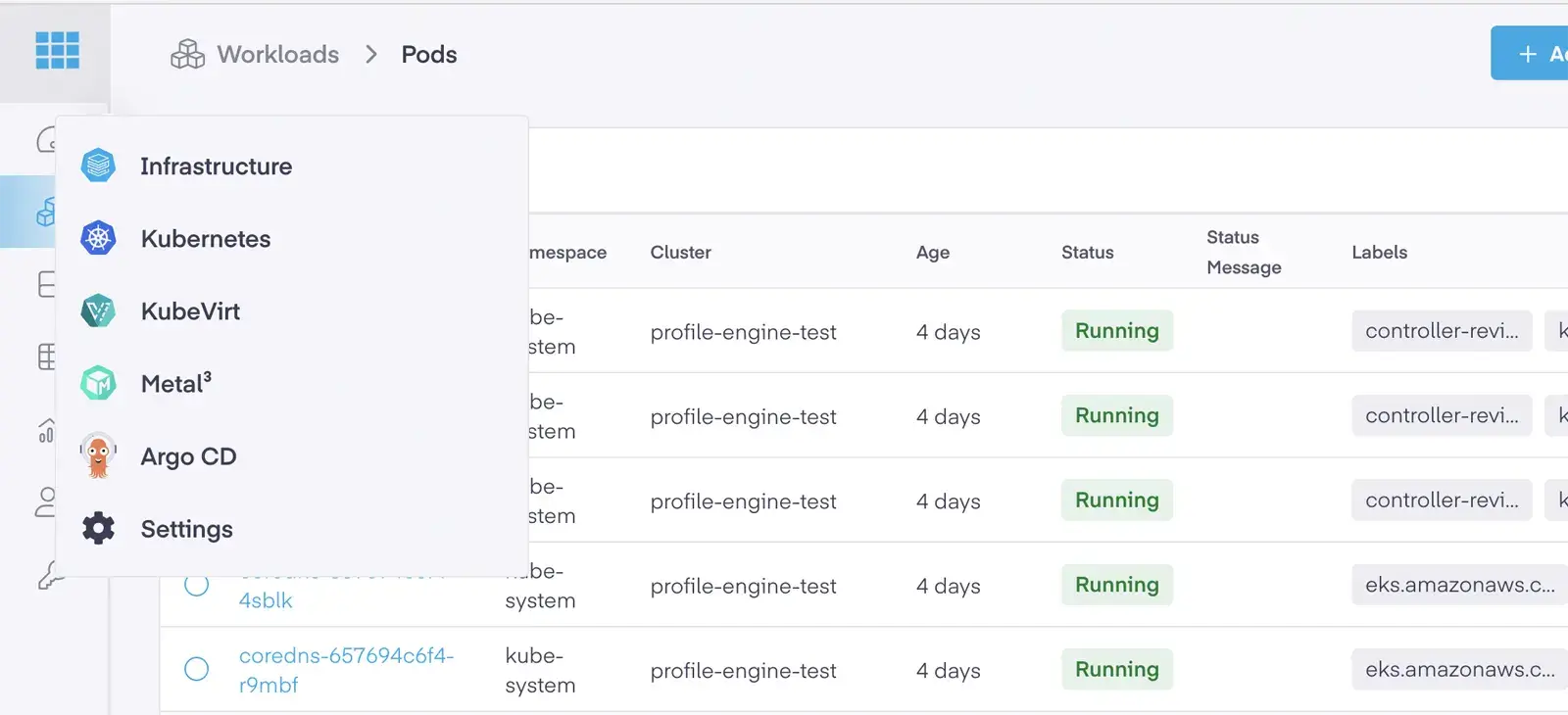

For the last three releases we have focused on making it easier to work with containerized applications on Kubernetes, and 5.6 continues this. The significant change we have introduced is to separate out all cluster management features into their own space – the “Infrastructure” App. By separating cluster management from application operations, we allow developers to work within a dedicated experience where they’re not hindered by features that are unrelated. The new Infrastructure application can only be accessed by platform administrators, further separating duties.

Within the Platform9 Web App users will now see the following applications:

- Infrastructure: For cluster administration, storage management, RBAC management, Profile Engine, & Monitoring.

- Kubernetes: Used to interact with one or more clusters simultaneously.

- KubeVirt: A dedicated app for working with virtual machines in clusters that have KubeVirt deployed.

- Metal3: A dedicated app for working with virtual machines in clusters that have Metal3 deployed.

- ArgoCD: The launch space to access ArgoCD.

Kubernetes 1.22 and 1.23

As the community pushes forward, we work to test, adapt, and integrate each new Kubernetes release with Platform9, and importantly, this includes our managed add-ons. Within the 5.6 release we have included support for K8s 1.22 and 1.23, along with upgrades to Grafana, Prometheus, CoreDNS, MetalLB, Calico, Kubernetes Dashboard, and Metrics Server. When upgrading, Platform9 will seamlessly upgrade each of these managed add-ons along with the cluster’s Kubernetes version without losing customizations.

KubeVirt

Kubevirt continues to prove itself as one of Kubernetes greatest strengths, allowing users that are transforming their applications to quickly consolidate onto a single infrastructure, reduce their hypervisor footprint and leverage the benefits of cloud native.

In version 5.6, Kubevirt has been upgraded to the 0.55.0 release and we have expanded our supported set of features to include Live Migration as well as new features within the Platform9 web app. Users of Kubevirt are now able to invoke a live migration via the UI, access each VMs console, and experience a new workflow for creating VMs.

Our Future is Open Source

To deliver 5.6 we made significant contributions to the open source community and continued progress to completely open source Platform9. The most significant piece of our open source efforts is Arlon, which can be leveraged by any user and is in no way reliant on Platform9. Adjacent to Arlon is Nodelet, the Platform9 cluster bootstrap provider which is working its way to becoming the second alternative for users of Cluster API. Alongside these efforts are our continued work on releasing our web apps as open source desktop applications that will work with upstream Cluster API, KubeVirt, and Kubernetes Cluster!

In addition to releasing new open source projects, we are excited to announce that we are adopting Cluster API as our core cluster management interface, bringing all of the power of Cluster API to our SaaS platform. Cluster API brings real and impactful change that allows for declarative, Git-based management of clusters. Furthermore, Cluster API is fast becoming the standard for multi-cloud management of Kubernetes and adopting Cluster API allows for all users of Platform9 to benefit from each release.

The current list of supported clouds can be found here https://cluster-api.sigs.k8s.io/reference/providers.html and includes over 20 unique provides all built by the community and public cloud providers.

100% of the Platform9 cloud native platform can be installed and operated in any environment, this is how we deliver on our commitment to open source.

To learn more about Platform9 5.6 head over to our release notes.