The Platform9 Managed Kubernetes (PMK) version 5.7 release is now available with support for Kubernetes 1.24. The 5.7 release promotes the new Cluster API(CAPI) based AWS and EKS cluster lifecycle management features to Limited Availability. It also brings new functionality such as cluster upgrades for Cluster API based clusters via UI, Assume Role support for EKS clusters, EKS add-on upgrades, support for static pods etc.

All clusters must be upgraded to at least Kubernetes 1.22 prior to upgrading from PMK 5.6 to PMK 5.7

Kubernetes 1.22 has reached End of Life as of 2021-10-28. New clusters should be built on 1.24.

December 31, 2022 - No new EC2 features or new EC2 instance types will be added to launch configurations after this date. AWS Launch Templates are recommended to be used.

Existing PMK clusters and AWS accounts will not be impacted due to this.

March 31, 2023 - New accounts created after this date will not be able to create new launch configurations via the console. API and CLI access will remain available to support customers with automation use cases.

Existing PMK Qbert based cluster should not be impacted and will continue to be supported. New cluster creation will continue to be supported.

December 31, 2023 - New AWS accounts created after this date will not be able to create new launch configurations.

PMK Cluster API based AWS clusters come with a default support for AWS Launch Templates and are recommended to use.

Platform9 5.7 release promotes AWS and EKS cluster lifecycle management using Cluster API to Limited Availability. With this release, you get ability to create, manage, update and upgrade Kubernetes clusters, either using PMK's Kubernetes distribution on top of Amazon EC2, or by consuming AWS native Elastic Kubernetes Service (EKS) clusters.

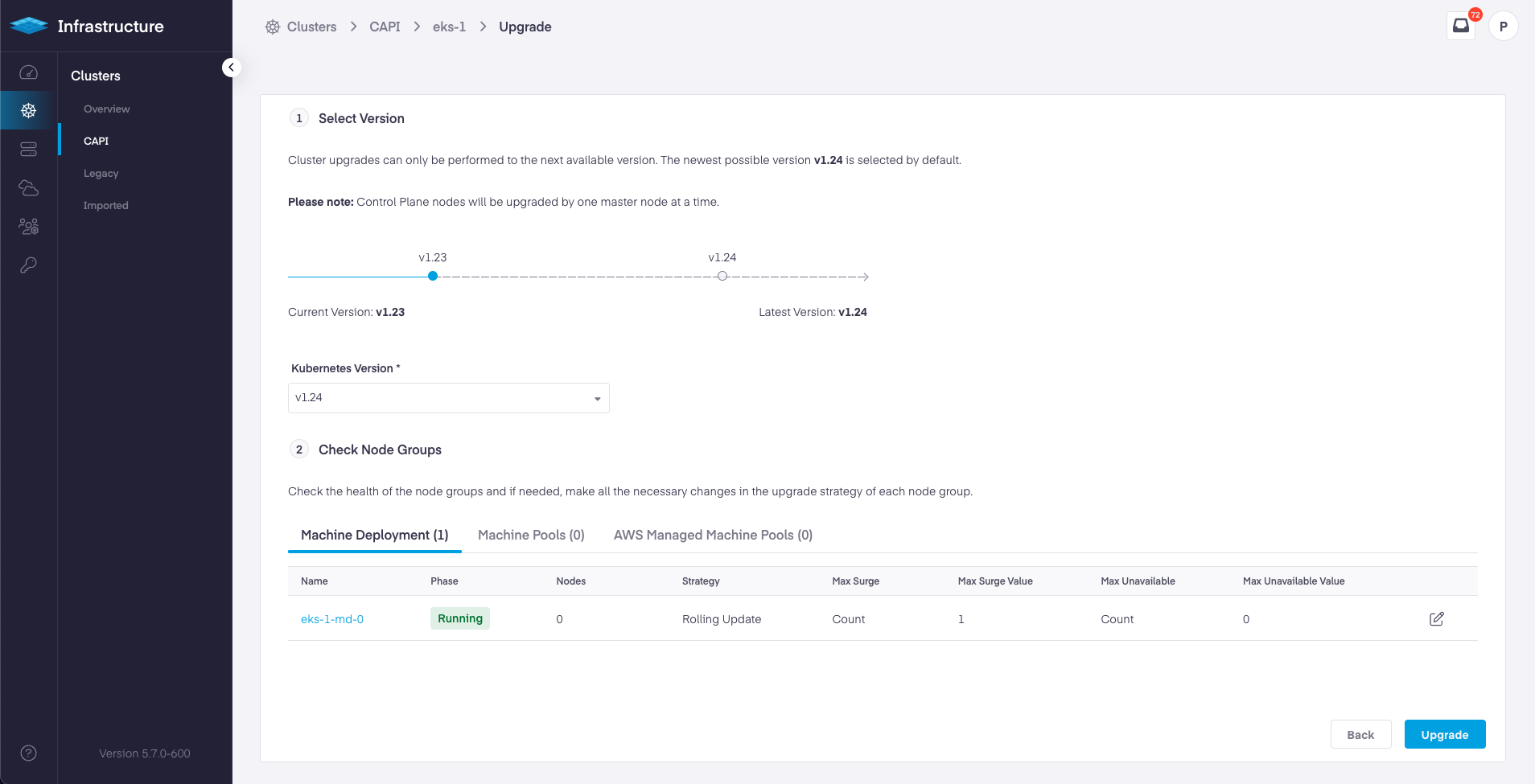

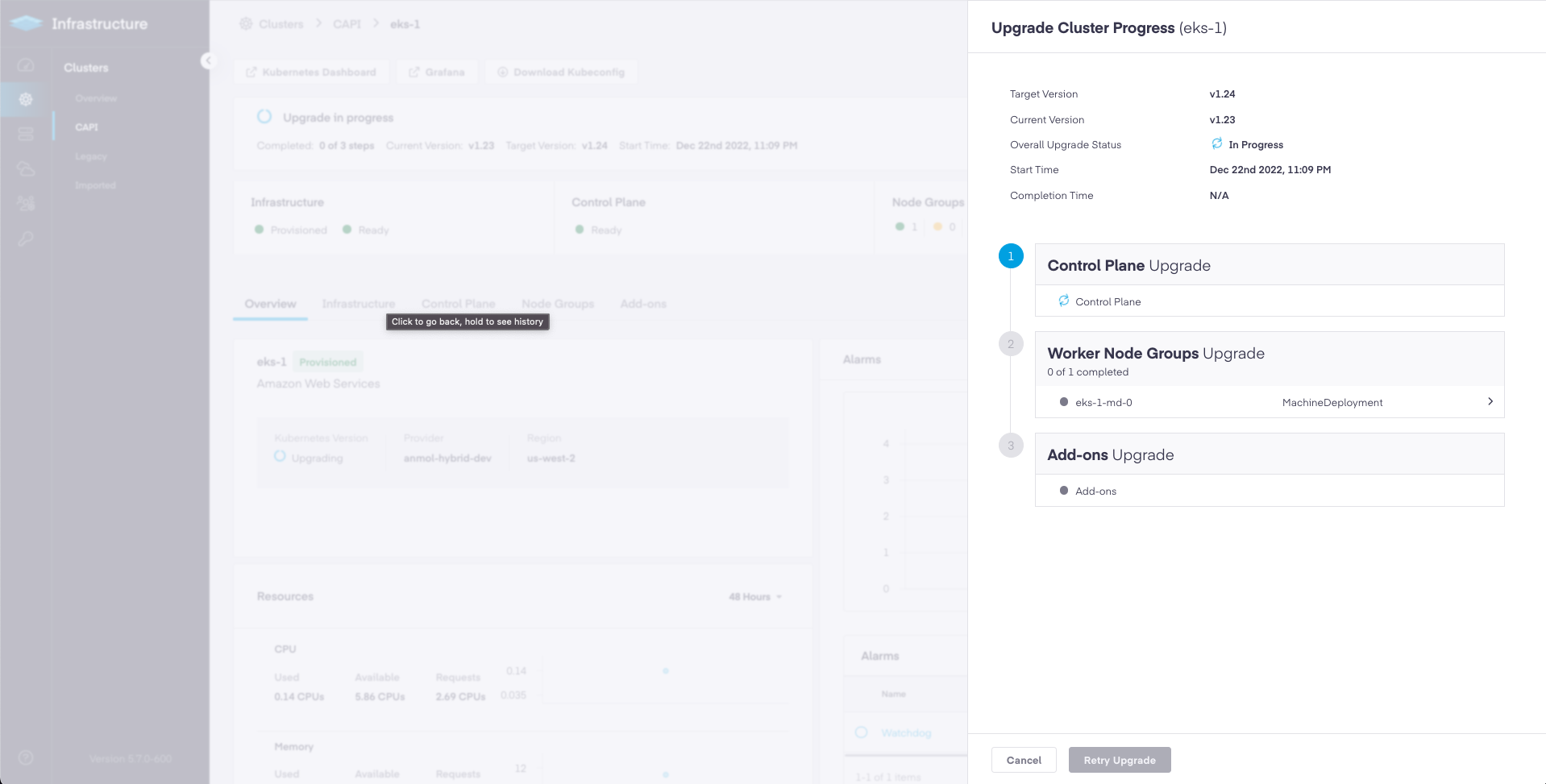

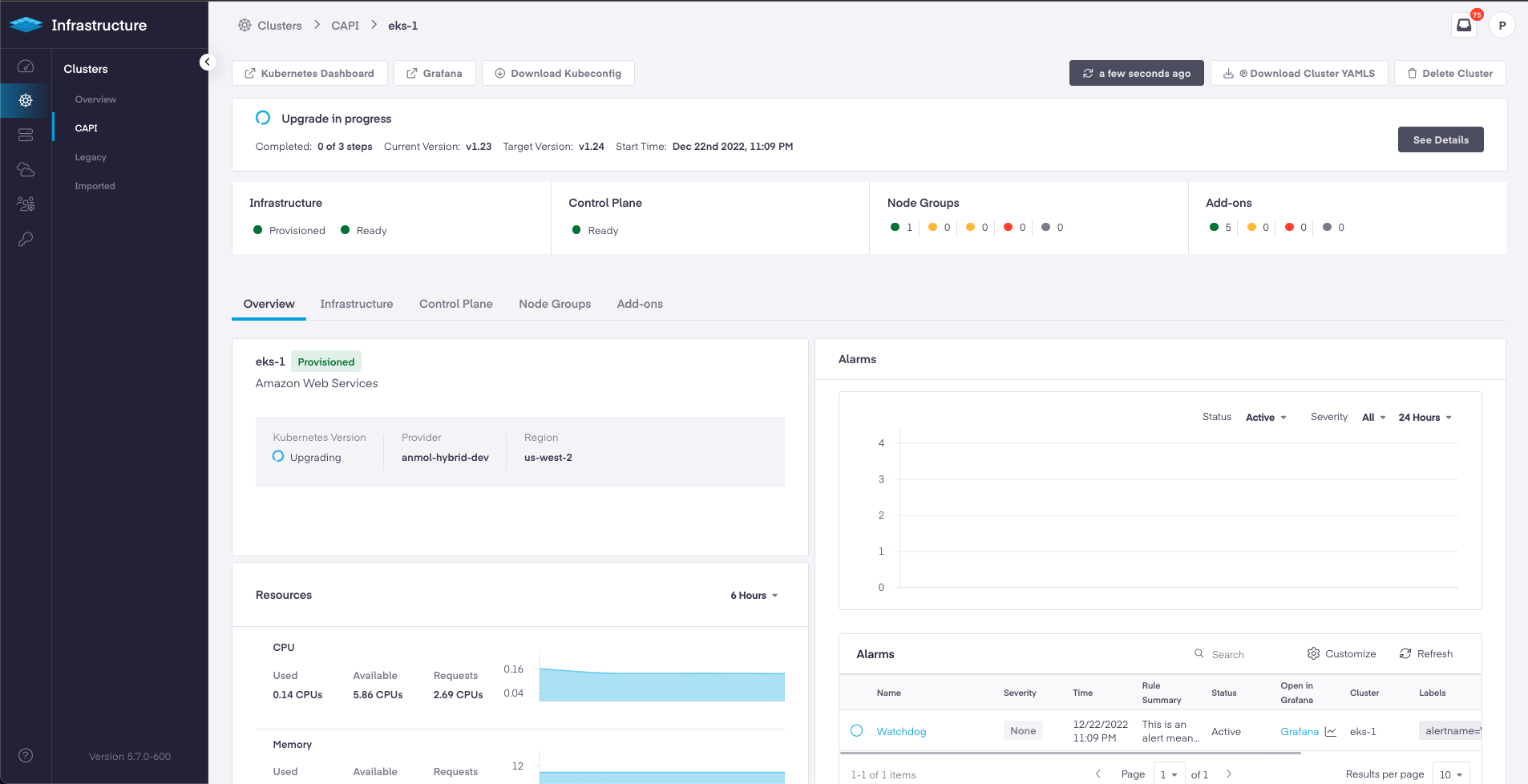

With 5.7 release PMK has added support for cluster upgrades for AWS Clusters and EKS Clusters using the PMK UI.

Following are some import changes introduced with Kubernetes 1.24 support.

Dockershim Removed from Kubelet

After its deprecation in v1.20, the dockershim component has been removed from the kubelet in Kubernetes v1.24. From v1.24 onwards, PMK will support containerd as the default container runtime.

Dynamic Kubelet Configuration Removed from the Kubelet

With Kubernetes 1.24 release, support for Dynamic Kubelet Configuration has been dropped. To address requirement for custom Kubelet configuration, PMK provides an alternate functionality using sunpike host to provide this functionality. Please reach out to Platform9 support team for the cluster configuration.

Static Pod support for Master and Worker nodes

Static pods are pods that are observed directly by the kubelet and not by the API server. Till PMK 5.6, this functionality was only available on the master nodes as only kubelet on master could be configured with “staticPodPath” option. With PMK 5.7, the feature will be available on master as well as worker nodes. This functionality has been back-ported to Kubernetes 1.23 as well. Please reach out to Platform9 support team for the cluster configuration.

The 1.19 pf9ctl release is now available and can be installed by running the following command.

Added

Added Static Pod support for Master and Worker nodes.

Added

Added support for Rolling Update for master nodes CAPI multi-master clusters.

Added

Added Catapult Alerts for CAPI clusters.

Added

Added Profile Engine support for CAPI based AWS clusters.

Added

Added Assume Role support for EKS clusters.

Added

Added support for EKS add-on upgrades with Cluster upgrades.

Enhanced

Added Advanced Remote Support for CAPI cluster nodes.

Enhanced

Added time series metrics on Cluster Dashboards for CAPI clusters..

Fixed

Fixed the issue of allowing hosts to be downgraded, which is not a supported action.

Fixed

Fixed the issue of CAPI Cluster status not reflecting properly in the UI.

Fixed

Fixed issue with Calico pod restarts in case of clusters with containerd runtime. The issue created problems with scaling up in few multi-node master environments.

Fixed

Fixed issue with cert generation from Sunpike CA which is close to expiry which impacted ArgoCD and Arlon.

Fixed

Fixed issue with the CAPI based AWS clusters failing to be provisioned(control plane not ready after 2 hours of creation) due to AWS Cloud-init issues.

Fixed

Fixed issue with add-on operator failing when proxy certs were in use.

Fixed

Fixed the issue of having orphaned nodes even after deleting the machine pool in CAPI based clusters.

Fixed

Fixed the issue of nodes getting disconnected during batch API based cluster upgrades.

Fixed

Fixed the issue where etcd backup count value was not honoured and more backups were created on the masters.

Fixed

Fixed the issue with etcd backups when both interval and snapshot based backups are enabled.

Fixed

Fixed a bug which allowed minor upgrades when a patch upgrade is already in progress, for Qbert based clusters.

Fixed

Fixed a bug UI which allowed password based kubeconfig download when MFA is enabled.

Fixed

Fixed a bug in UI where led to SSO XAML Metadata contents having '</' and '/>'replaced with up and down arrows.

The following packed components have been upgraded in latest v1.24 Kubernetes version:

| Component | Version |

|---|

| CALICO | 3.24.2 |

| CORE-DNS | 1.8.6 |

| METRICS SERVER | 0.5.2 |

| METAL LB | 0.12.1 |

| KUBERNETES DASHBOARD | 2.4.0 |

| CLUSTER AUTO-SCALER AWS | 1.24.0 |

| CLUSTER AUTO-SCALER AZURE | 1.13.8 |

| CLUSTER AUTO-SCALER CAPI | 1.24.0 |

| FLANNEL | 0.14.0 |

| ETCD | 3.5.5 |

| CNI PLUGINS | 0.9.0 |

| KUBEVIRT | 0.58.0 |

| KUBEVIRT CDI | 1.54.0 |

| KUBEVIRT ADDON | 0.58.0 |

| LUIGI | 0.4.1 |

| MONITORING | 0.57.1 |

| PROFILE AGENT | 2.0.1 |

| METAL3 | 1.1.1 |

Please refer to the Managed Kubernetes Support Matrix for v5.7 to view all currently deployed or supported upstream component versions.

Known Issue

For CAPI based clusters, labels with special characters like - cannot be associated to the cluster. A known bug leads to only one part of the label being used when applying it to the cluster.

Known Issue

For CAPI based clusters, downloading multiple kubeconfigs for a given user will invalidate the previous kubeconfigs of that user. Only the latest downloaded kubeconfig will be valid for that user account to use. User are recommended to use caution when sharing user accounts for such clusters.

Known Issue

Users should not make changes to EKS clusters created using PMK, from AWS Console. Platform9 manages the lifecycle of the EKS control-plane and EKS nodes. Making any changes from AWS Console might result in undesired effects or render cluster in non-functional state.

Known Issue

The Kubernetes dashboard is not accessible for CAPI based clusters by uploading the kubeconfig, because of an upstream issue where dashboard does not support OIDC-based kubeconfigs.

As a workaround, authenticate with the ID Token.

- Download and open the kubeconfig of a cluster.

- Copy the value of the id_token field.

- In the dashboard, select "token" authentication and paste the value in the form.

Note: refreshing is not supported by the dashboard, this means you lose access after the token expires (10-20 min)

To refresh the ID token, simply run a kubectl command with it. kubectl will replace the ID token in the kubeconfig with a valid one if it has expired. Then afterwards follow the steps above again.

Known Issue

EKS cluster created with with MachinePool / MachineDeployment type of worker node groups with desired node count can sometimes get stuck in “provisioned” state, for more than an hour after control plane is ready.

Current workarounds:

- Machine Deployment type Node Group: Scale down the affected node group to 0 and then scale it back up to desired count.

- Machine Pool type Node Group: Delete the affected node group and add new node group to the cluster.

- Create an EKS cluster with MachinePool / MachineDeployment with replica count as 0. Once the cluster is healthy change the replica count to desired value.

Known Issue

In some instances a CAPI cluster when deleted during provisioning phase can get stuck into deleting phase. Please contact Platform9 support for possible options of resolution.

Known Issue

CAPI Cluster creation in EKS with Private API Server Endpoint access is not supported. Will be added in next release.

Known Issue

External Clusters with name more than 63 characters will not be discoverable for Import operation. This is due to the limitation from K8s specs.

Known Issue

A cluster that is renamed in PMK will not reflect the new name in ArgoCD. However, the cluster is still available under the older name in ArgoCD.

Known Issue

Calico IPAM is only supported when using Calico CNI.

Known Issue

EKS, AKS, or GKE Cluster Import “401 Unauthorized” Notification and Empty Dashboards.

If an AWS Cloud Provider is configured to import clusters without the correct identity being added to the target cluster, Platform9 will be unable to access the cluster.

It's important to note that if you have used a Cloud Provider to register an EKS, AKS, or GKE cluster that was created with IAM user credentials, which no longer have access to the EKS, AKS, or GKE K8s clusters, Platform9 will fail with an 401 unauthorized error until that IAM user is given access to the K8s cluster.

View the EKS documentation here to ensure the correct access has been provisioned at for each imported cluster. https://aws.amazon.com/premiumsupport/knowledge-center/amazon-eks-cluster-access/

Known Issue

Platform9 monitoring won't work on ARM-based nodes on EKS, AKS, or GKE.

Known Issue

Cluster upgrade attempt is blocked on UI post a cluster upgrade failure due to nodes being in a converging/not converged state.

Known Issue

Hostpath-csi-driver installs to to default namespace only.

Known Issue

Kubelet authorization mode is marked set to AlwaysAllow instead of Webhook.

Known Issue

UI throws error when using SSO with Azure AD and passwordless logins.

Known Issue

PMK Cloud provider created directly in Sunpike cannot be used to create qbert clusters. Qbert cloud providers will work to create both qbert and sunpike clusters. But cloud providers created directly in sunpike CANNOT be used to create qbert clusters. Please use the appropriate one based on your needs.

Known Issue

The Arlon command 'arlonctl clusterspec update' supports updating the 'node count' or 'kube version' for a clusterspec. However, the update for kube version will not trigger an application sync in ArgoCD to update the clusters, since ArgoCD is configured to ignore the difference in values of the 'kube version' field between the desired manifest and live manifest of an app. The workaround is to manually sync the app from ArgoCD UI.

ArgoCD 5.7 Release Notes

Platform9 Managed KubeVirt 5.7 Release Notes

Platform9 Edge Cloud LTS2 Patch#1 Release Notes

Added

Added the support for GP3 type EBS Volumes with IOPS & Throughput configuration on AWS Qbert Clusters. With this change in place, all new AWS clusters will have a default volume type as gp3 with default Throughput: 125 and Iops: 3000. For custom throughout/Iops users can use the API with payload options ebsVolumeThroughput/ebsVolumeIops. Follow the documentation here : https://platform9.com/docs/kubernetes/migrate-aws-qbert-gp2-volumes-to-gp3

Added

Added changes in response to traffic redirection from the older k8s.gcr.io to registry.k8s.io. https://kubernetes.io/blog/2023/03/10/image-registry-redirect/

Upgraded

Calico version to 3.23.5 for k8s v1.23

Upgraded

The Kubernetes Dashboard version to support 2.4 version for v1.23 and v1.24 k8s clusters.

Fixed

The regression of node decommission operation not initiating node drain for non-kubevirt workloads.

Fixed

A bug in UI which prevented OVS interface IP addresses from being shown in the Networks lists in the VM Instance view.

Fixed

An issue preventing the reuse of PVC attached for prometheus after addon upgrade.

Fixed

A bug in UI which prevented the Self-service users with access to a particular namespace from being able to view resources in that namespace.

Fixed

A bug on pf9ctl which caused the check to fail when the package python3-policycoreutils pre-existed on the nodes.

Fixed

An issue due to which certificate generation fails if CA validity is less than the amount of TTL with which the certificate is attempted to be generated in Vault. A DU upgrade to PMK v5.7.2 and cluster upgrade to the latest PMK build version is required for this to take effect.

Fixed

A bug which caused older static pod path to be used after upgrading the cluster from 1.22.9 to 1.23.8 and kubelet restarting continuously.

Fixed

A bug which prevented Sunpike CA rotation if the CA had the expiry of more than a year.

Fixed

A bug in pf9ctl which caused an unintended node to be decommissioned due to wrong choice of node uuid based on IP obtained from hostname -I.

Fixed

An issue that caused some pods to be stuck in a Terminating state on RHEL nodes, due to missing sysctl parameter /proc/sys/fs/may_detach_ mounts set to 1.

Fixed

An issue that caused ETCD backup failure.

Fixed

An issue which caused the calico Typha pods on clusters were getting OOM killed due to resource constraints. Updates are made to current limits and Typha auto scaler ladder.

Fixed

A bug which prevented login to qbert/resmgr database via mysqld-exporter pod.

Fixed

A bug in UI which prevented the kube-apiserver flags to be added to apiserver configuration during cluster creation from UI.

Fixed

An issue that caused calicoctl binary being non executable.