Detach Node From Cluster

Nodes that are attached to on-premise(BareOS) Kubernetes cluster created using PMK can be detached when the nodes are not required to be a part of the cluster.

When you detach a node, the containers running on the node are destroyed. Pods that are associated with the Replication Controller or Deployment are automatically restarted by Kubernetes on another node in the cluster, if sufficient resources are available.

You can detach nodes from the Infrastructure -> Clusters tab.

Detach Nodes from Clusters Tab

Follow the steps below to detach a node from a cluster.

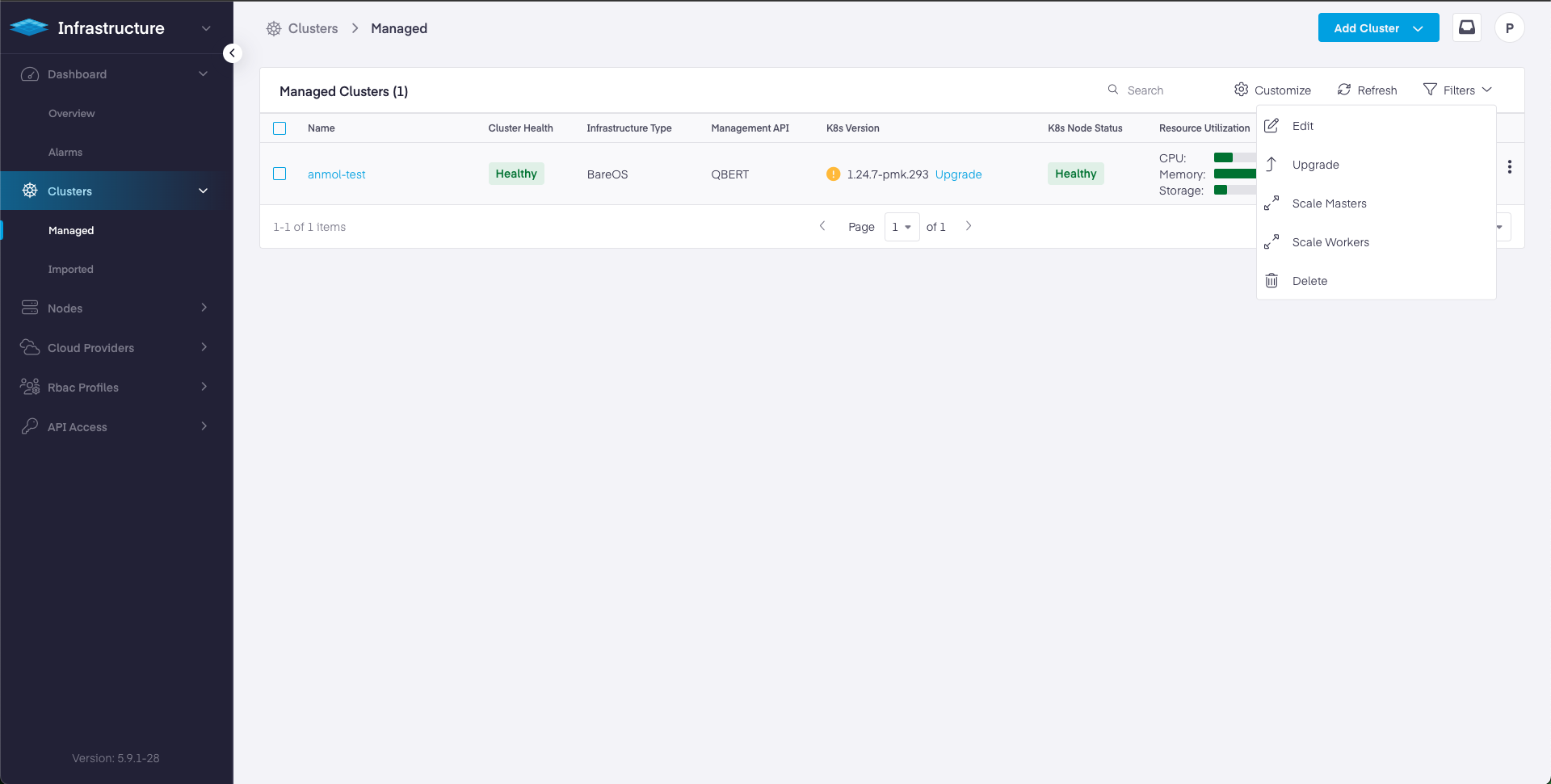

- Navigate to Infrastructure > Clusters > Managed

- Select the cluster from which you wish to detach a node.

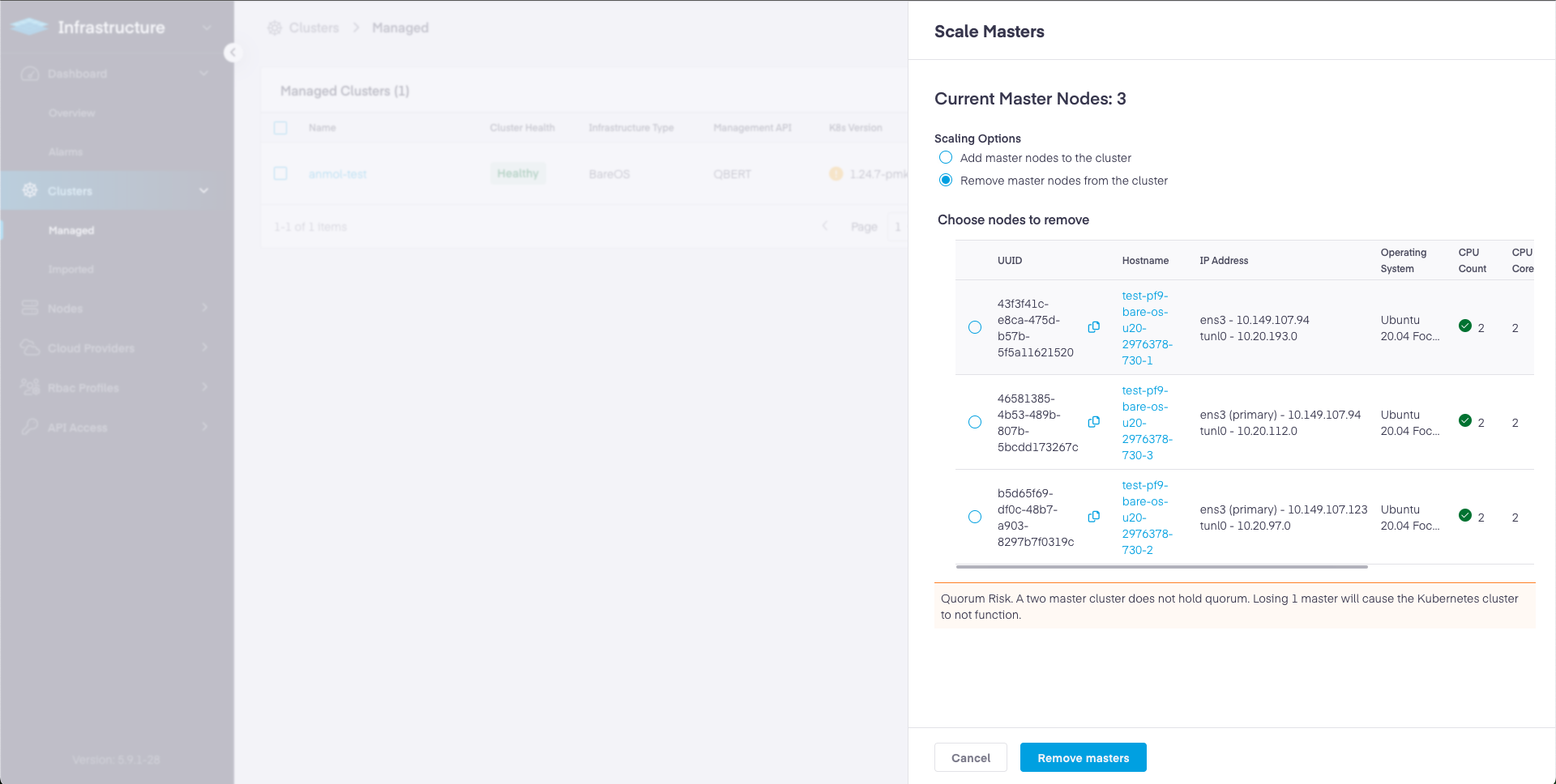

- Select Scale Masters or Scale Workers

- Select the node or nodes to detach from the cluster.

- Click Remove to detach the nodes. This step detaches the nodes from the cluster and the nodes will no longer be part of the Kubernetes cluster. If the removed node is a master node, it is detached as an etcd member with this step.

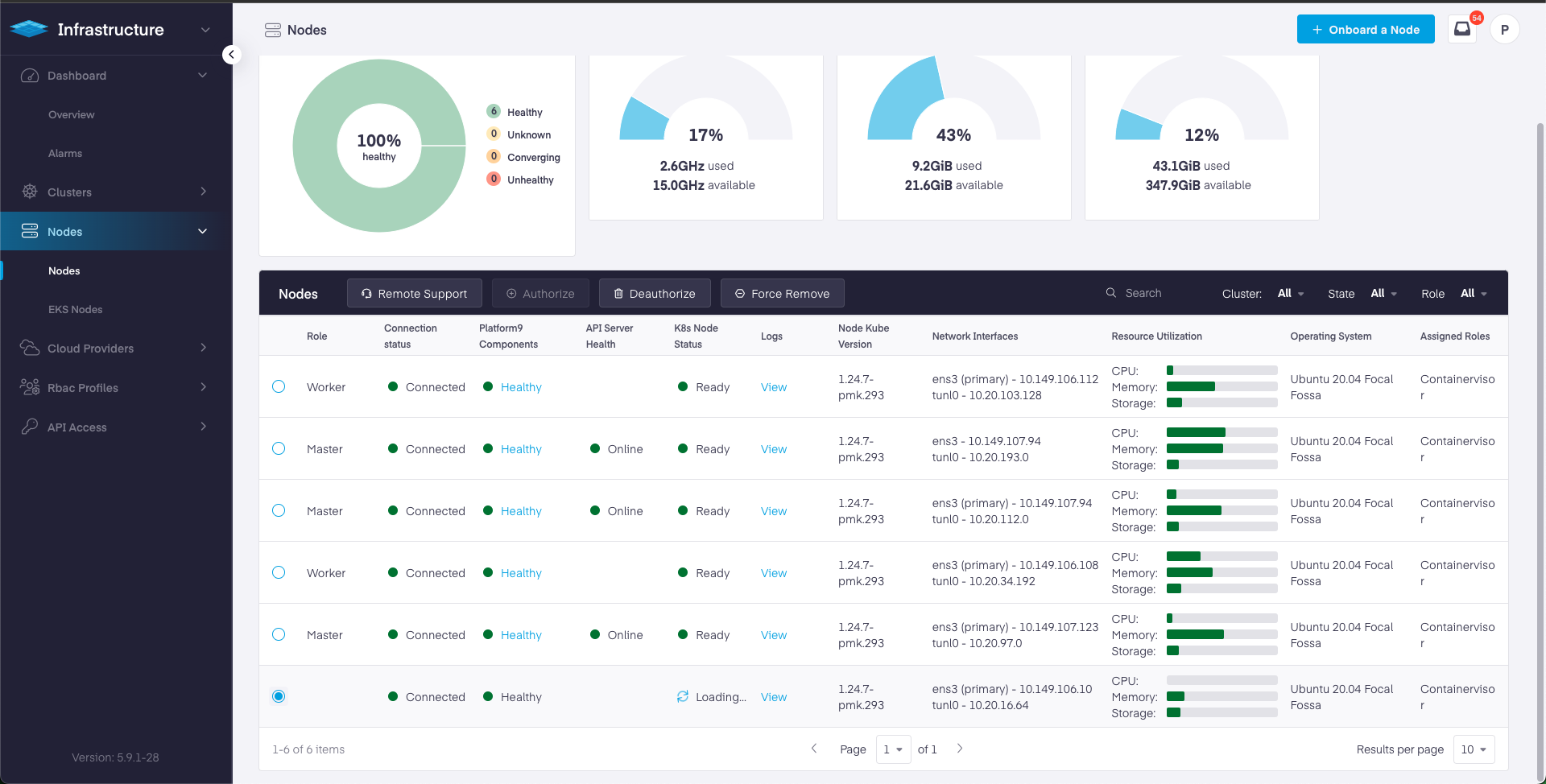

- Now Deauthorize the node by going to Infrastructure > Nodes. The detached node will no longer have a role associated with it as shown. Select the node and click on Deauthorize Node and confirm.

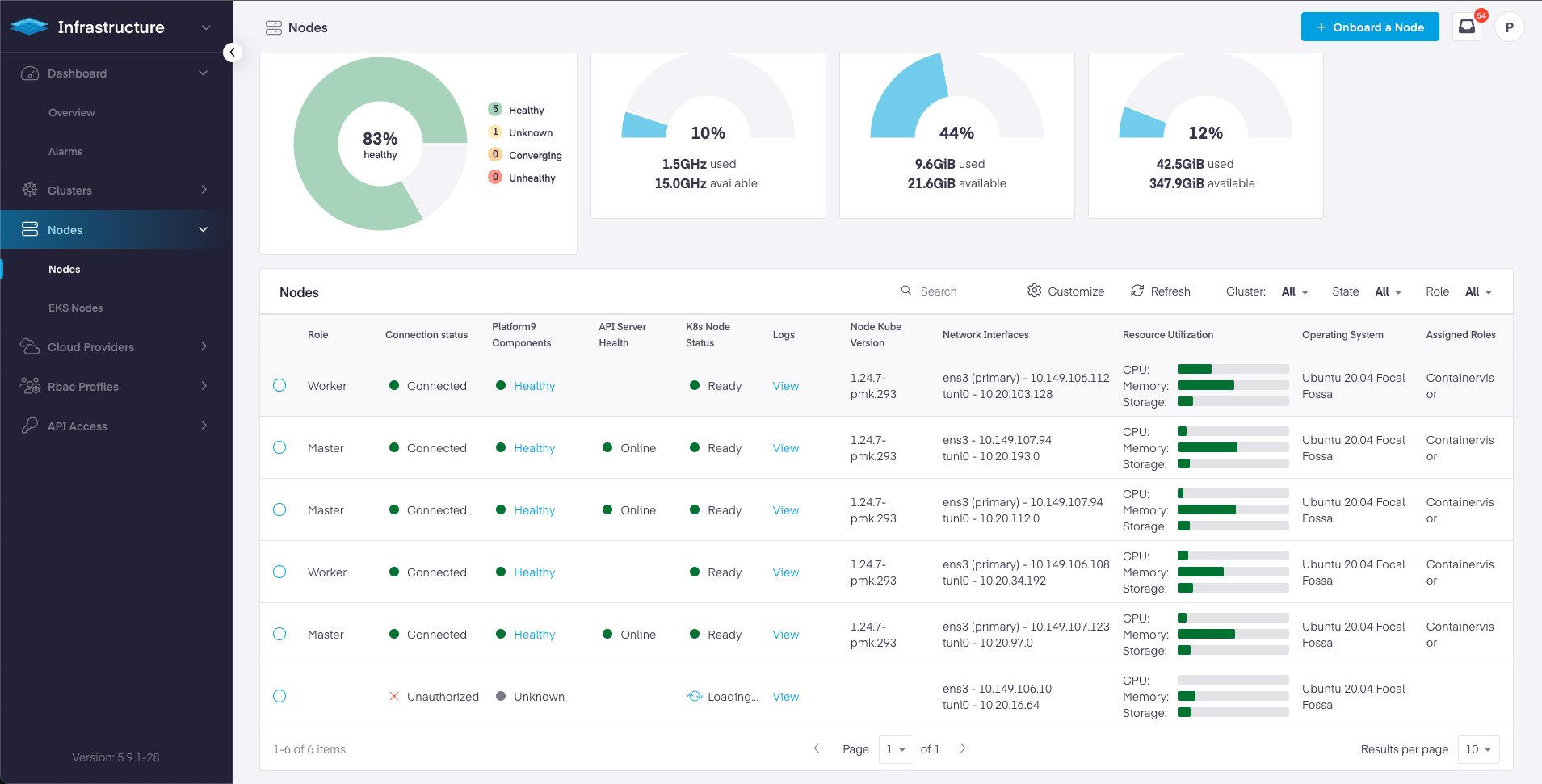

- Wait for the node to get Deauthorized. Once Deauthorized, the connection status will turn to unauthorized and it will look like below:

- Please note that the de-authorized node will keep appearing in the UI until the platform9 packages are cleaned from the node. This also means that the node can no longer be used with another cluster in this Management Plane until it is authorized again.

Node Cleanup to Re-use with Another Management Plane

To clean the nodes completely, so that the nodes stop appearing in the current Management Plane UI and can be re-used with another Platform9 Management Plane, use pf9ctl decommission command on the respective nodes. Once the decommission is successful, perform node on-boarding to register the node with the new Management Plane.

- Make sure that the node is detached from the cluster and deauthorized following the previous section, before performing node decommission.

Managing Node Loss

If a node has lost connectivity to the Management Plane due to any to hardware failure, networking issues etc. and is deemed unfit and is no longer required to be part of the Kubernetes cluster or Management Plane, force remove action can be used to remove this node from the management plane. Go Infrastructure > Nodes > Select the node radio box.

Using Force Remove

Force remove action on a node deletes the entry of the node from the Platform9 Management Plane and it stops appearing on the Management Plane UI. Force Remove can be used in the cases when the node is lost due to hardware failure, networking issues etc and is no longer required to be used with the existing Management Plane.

Please note that if the node comes back up and if pf9ctl host-agent can connect back to the Management Plane, the node will re-appear on the Management Plane UI. In this case, a node cleanup should be performed on the node and followed by Force Remove from Management Plane.

Note: If the lost node was master node, it has to be removed from the list of etcd member on the Kubernetes cluster of which the was a part of. Perform following instruction:

- Run

etcdctl memberlist to see a list of the current members:

$ sudo /opt/pf9/pf9-kube/bin/etcdctl --cacert="/etc/pf9/kube.d/certs/etcdctl/etcd/ca.crt" --cert="/etc/pf9/kube.d/certs/etcdctl/etcd/request.crt" --key="/etc/pf9/kube.d/certs/etcdctl/etcd/request.key" member list511694188e7264c2, started, a1e79a43-6559-4a34-9c0d-23935ec82096, https://192.168.10.233:2380, https://192.168.10.233:2379, false630d956b52d2cefe, started, 8426dd3c-1e77-4b5e-a4d3-0ad31b206d2a, https://192.168.10.125:2380, https://192.168.10.125:2379, false941ffa2c658ad414, started, 5cc7b74b-88c0-45ab-9525-cb9b17812ae6, https://192.168.10.181:2380, https://192.168.10.181:2379, falseThe 3rd column shows the member ID of each node. Find the node you want to remove and use this member- The 3rd column shows the member ID of each node. Find the node that needs to be removed and use this member ID to run

etcdctl member remove:

$ sudo /opt/pf9/pf9-kube/bin/etcdctl --cacert="/etc/pf9/kube.d/certs/etcdctl/etcd/ca.crt" --cert="/etc/pf9/kube.d/certs/etcdctl/etcd/request.crt" --key="/etc/pf9/kube.d/certs/etcdctl/etcd/request.key" member remove a1e79a43-6559-4a34-9c0d-23935ec82096Manual Node Cleanup

Manual node cleanup might be required in situations when pf9ctl decommission fails.

Worker Node

- Perform the complete cleanup and removal of Platform9 dependencies such as host-agent etc. from the node by running the following commands on the nodes:

$ apt remove -y pf9-hostagent && rm -rf /opt/pf9 && rm -rf /etc/pf9 && rm -rf /var/opt/pf9 && rm -rf /var/log/pf9 && rm -rf /tmp/* && rm -rf /var/spool/mail/pf9 && rm -rf /opt/cni/bin/* && rm -rf /etc/cni && rm -rf /var/log/messages-* && rm -rf /var/lib/docker && apt-get clean all && rm -rf /opt/cni && rm -rf /etc/cni$ rm -rf /run/containerd/ && rm -rf /var/lib/containerd/ && rm -rf /var/lib/nerdctlMaster Node

In the event of a master loss, the node used as master node can be cleaned up by performing steps:

- Make sure that the master node is detached the cluster and deauthorized from the cluster, following the previous section.

- If not done already, remove the master node from etcd cluster as an etcd member by running following command on the master node :

/opt/pf9/pf9-kube/bin/etcdctl --cert /etc/pf9/kube.d/certs/etcdctl/etcd/request.crt --cacert /etc/pf9/kube.d/certs/etcdctl/etcd/ca.crt --key /etc/pf9/kube.d/certs/etcdctl/etcd/request.key member remove- Perform the complete cleanup and removal of Platform9 dependencies such as host-agent etc from the node by running the following commands on the nodes:

$ apt remove -y pf9-hostagent && rm -rf /opt/pf9 && rm -rf /etc/pf9 && rm -rf /var/opt/pf9 && rm -rf /var/log/pf9 && rm -rf /tmp/* && rm -rf /var/spool/mail/pf9 && rm -rf /opt/cni/bin/* && rm -rf /etc/cni && rm -rf /var/log/messages-* && rm -rf /var/lib/docker && apt-get clean all && rm -rf /opt/cni && rm -rf /etc/cni$ rm -rf /run/containerd/ && rm -rf /var/lib/containerd/ && rm -rf /var/lib/nerdctl