PMK Release 5.6 Release Notes

The Platform9 Managed Kubernetes (PMK) version 5.6 release is now available with support for Kubernetes 1.22 and 1.23 versions. The 5.6 release aims to bring multifold improvements to the user experience with our brand new Cluster API(CAPI) based lifecycle management for AWS and EKS clusters (in beta). This release also adds our brand new Open-Source project Arlon (also in beta), which bridges the gap between workload and infrastructure management with a single unified architecture. This release continues to upscale Platform9's commitment to Open-Source by contributing new products and features with deep Kubernetes integration and building Platform9 on Open-Source technologies.

All clusters running Kubernetes 1.20 must be upgraded to Kubernetes 1.21(PMK 5.5) prior to upgrading to Kubernetes 1.22(PMK 5.6).

Kubernetes 1.20 has reached End of Life as of 2021-02-28. New clusters should be built on 1.23.

Kubernetes 1.21 has reached End of Life as of 2022-06-28. New clusters should be built on 1.23.

PMK 5.6.0 Release Highlights (Released 2022-09-23)

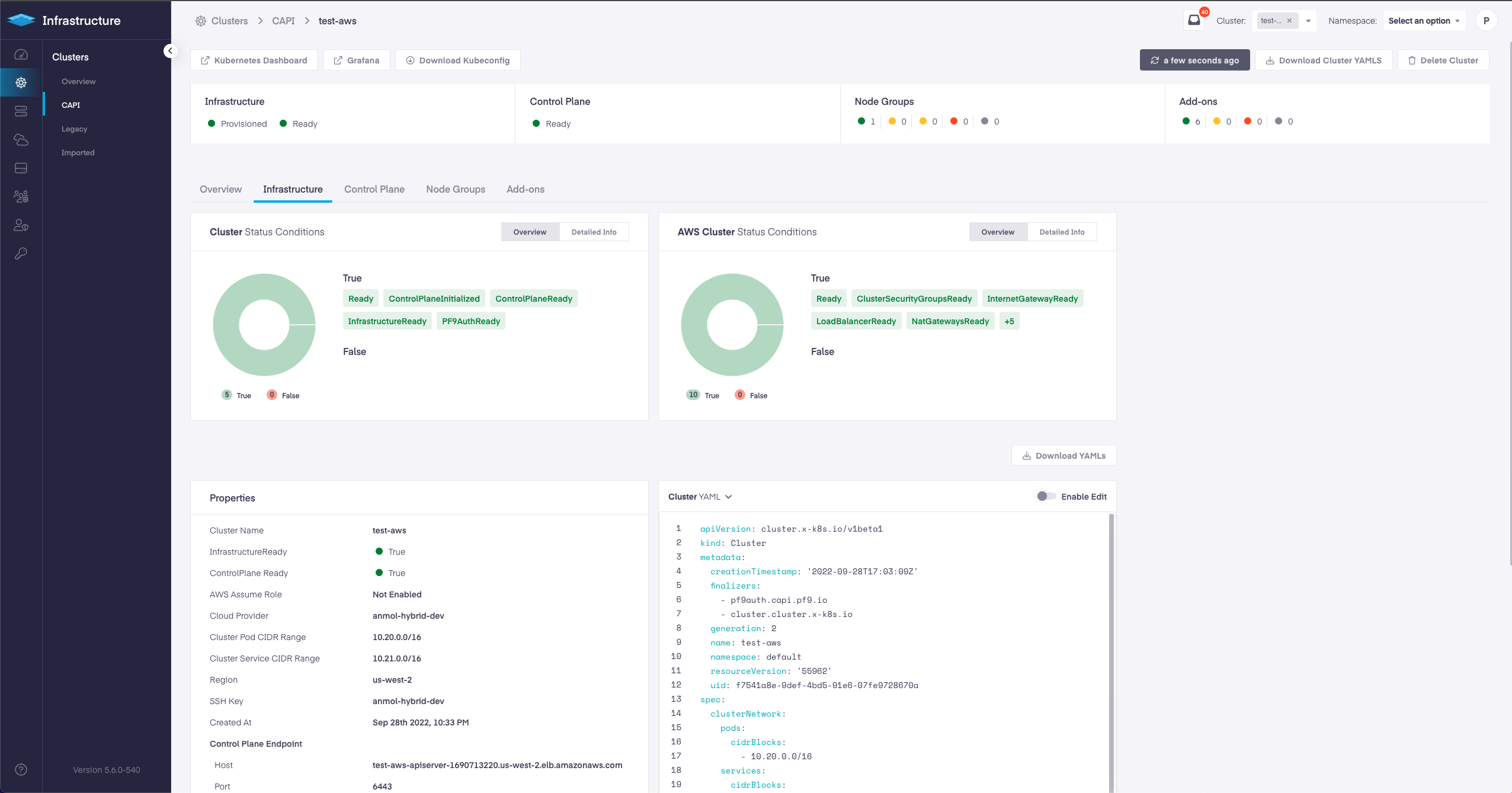

AWS and EKS Cluster Lifecycle management based on Kubernetes Cluster API (In Beta)

We believe open-source is the present and the future and as part of this Platform9 5.6 release brings AWS and EKS cluster lifecycle management using Cluster API. You get a better way to create native Kubernetes clusters on AWS EC2. You can also create, manage, update and upgrade Kubernetes clusters in PMK using Amazon Elastic Kubernetes Service (EKS).

The AWS and EKS Kubernetes cluster creation and management using Cluster API is a beta feature in PMK 5.6. We are actively working on making this feature GA over our next few releases.

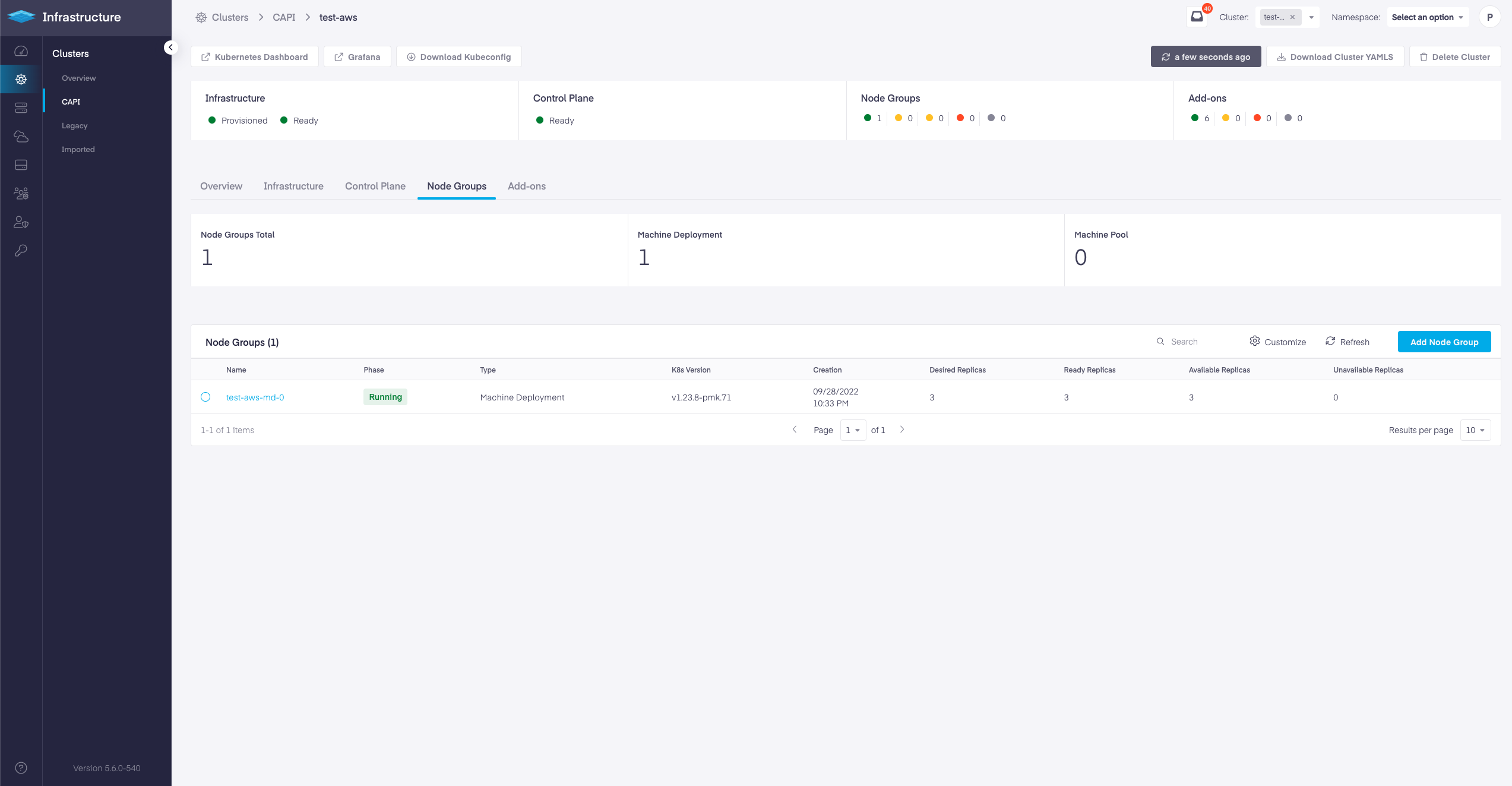

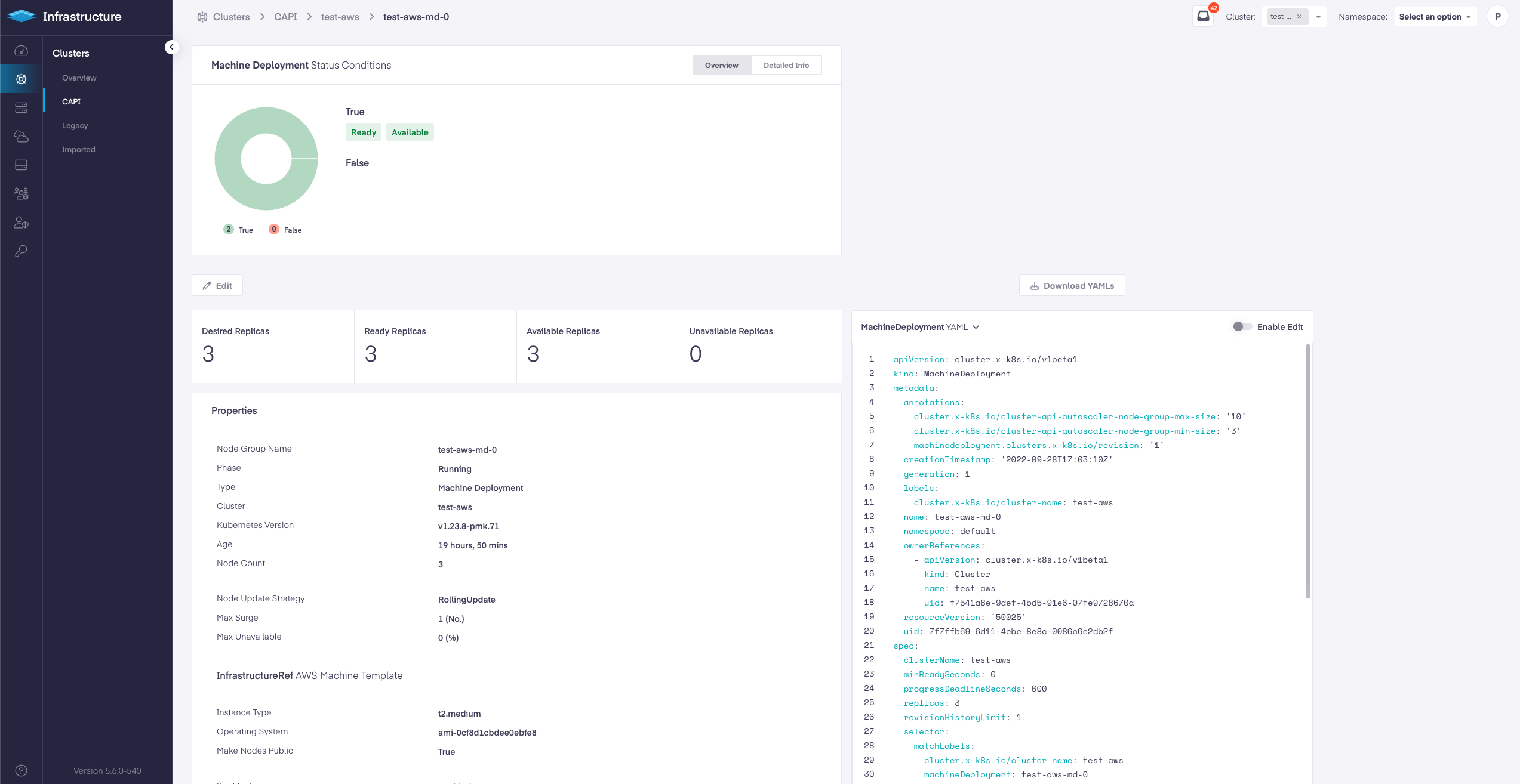

With 5.6 release we have added Worker Node Groups for CAPI based AWS CAPI clusters and EKS CAPI clusters. Some of the features enabled with Node Groups are:

- Create multiple node groups

- Edit node groups.

- Enable Auto-scaling.

- Configure to use Spot Instances.

- Select Availability Zones.

- Add bulk labels and taints.

- Configure node group update strategy.

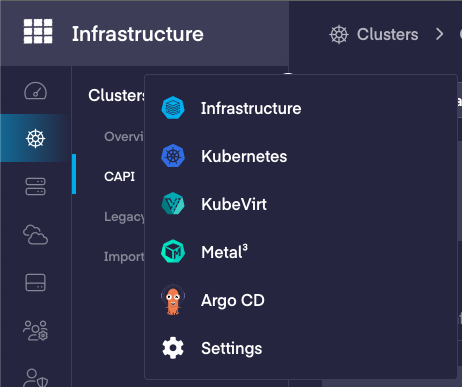

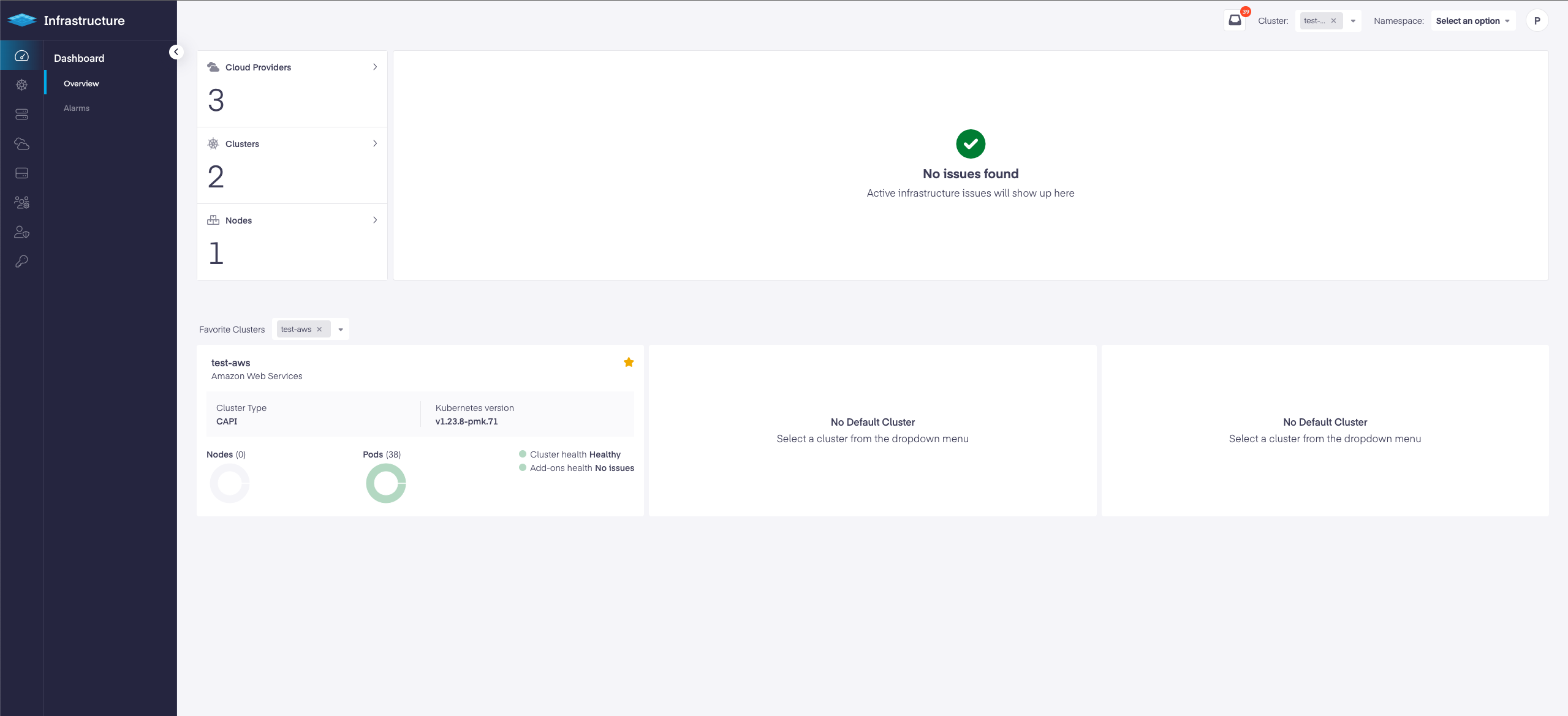

Infrastructure space in App Switcher

The new Infrastructure space in the App Switcher aims to simplify the Infrastructure Management for our users. The new dedicated space is to helps PMK Administrators create and manage different types clusters and associated resources such as Cloud Providers, Nodes, RBAC Profiles etc.

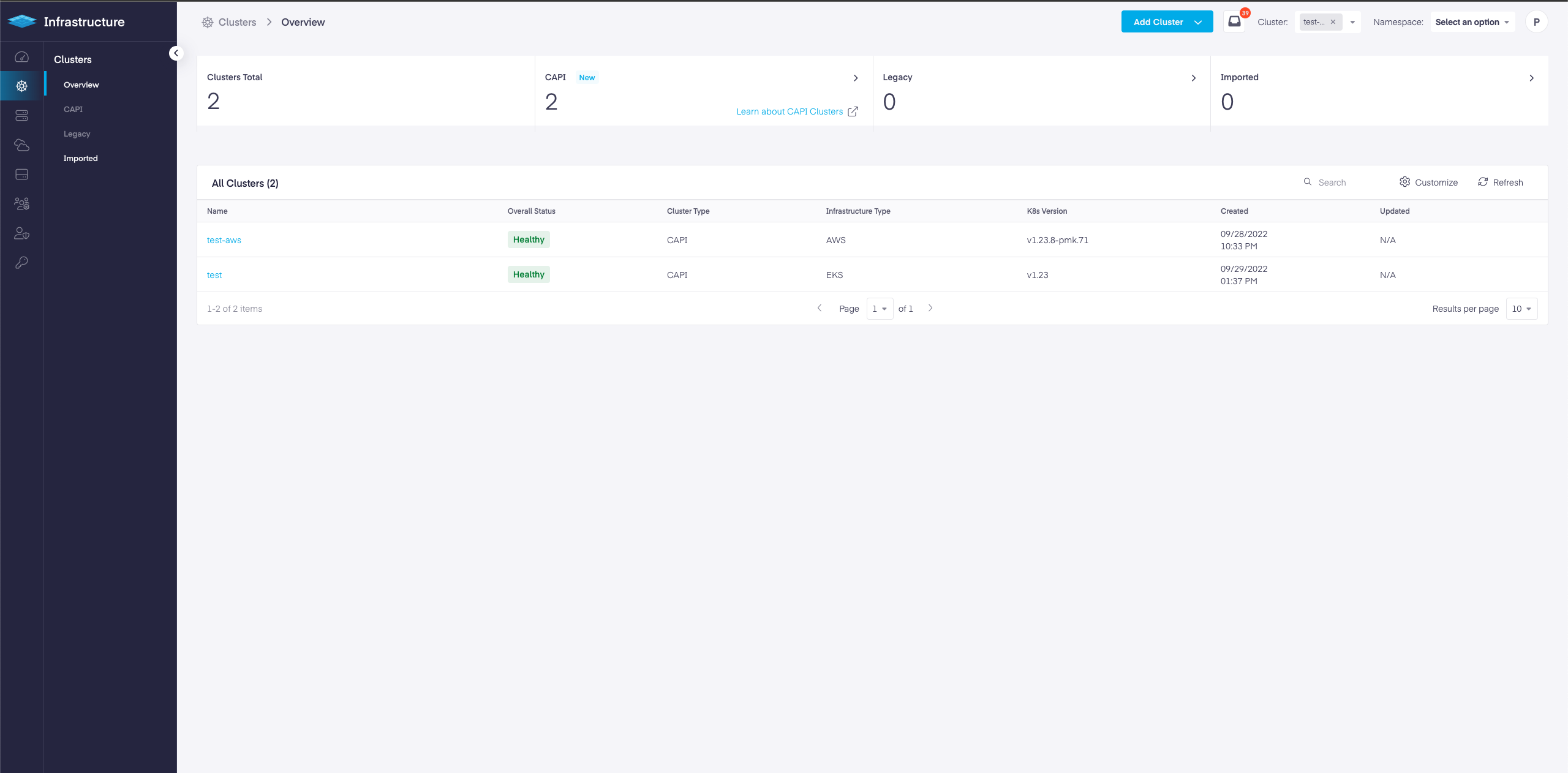

Dedicated Cluster pages for different type of clusters

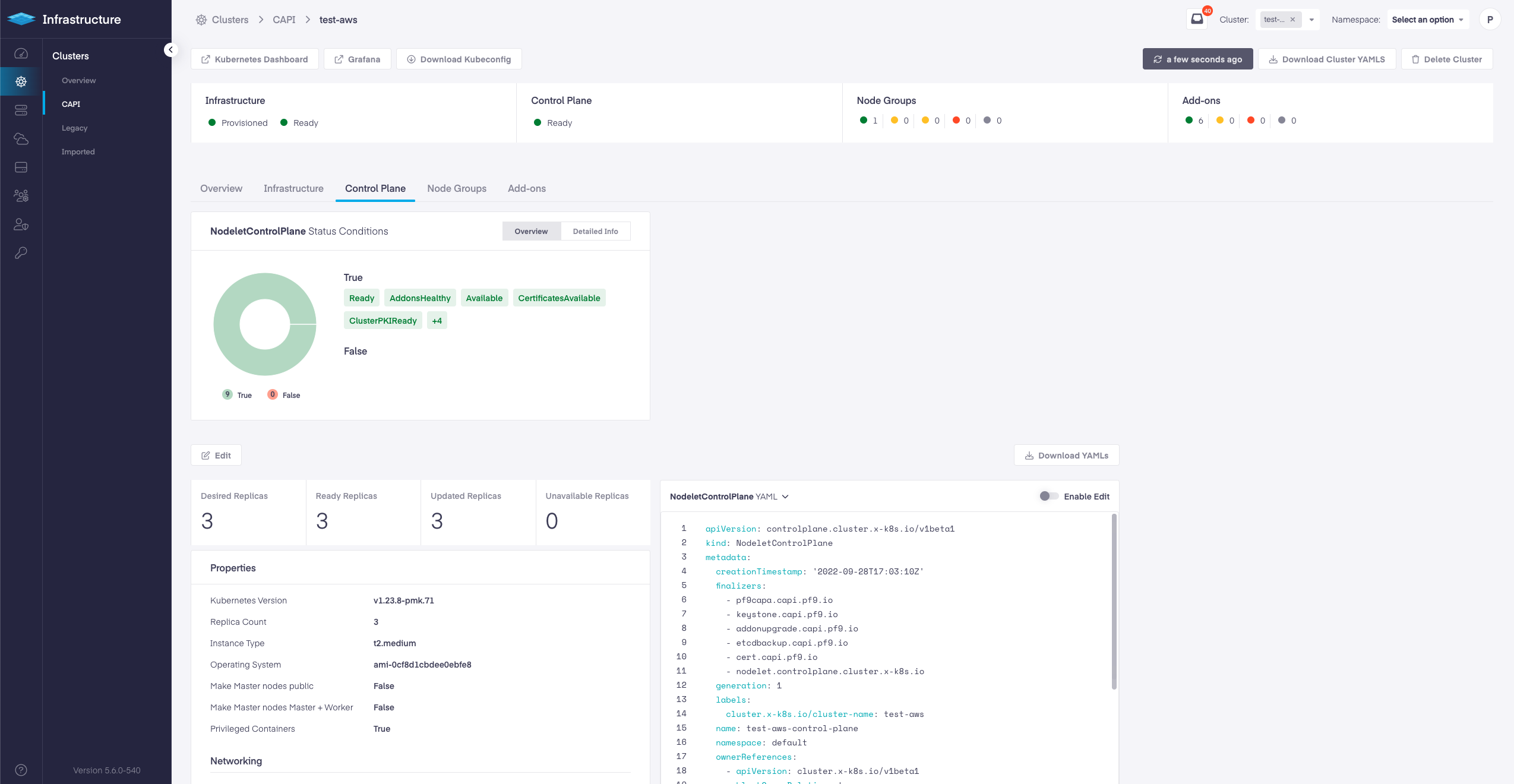

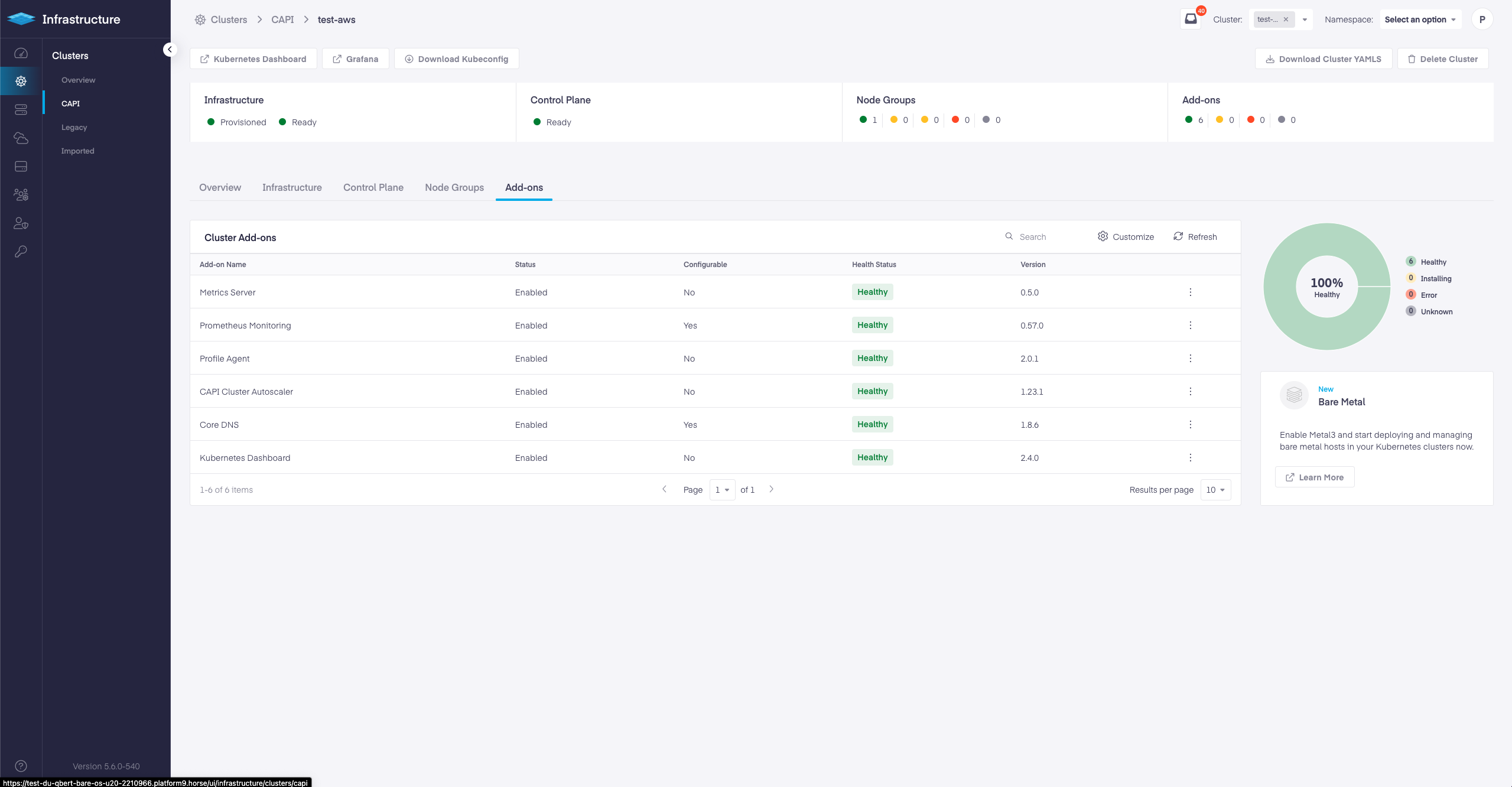

New CAPI Cluster Dashboards

RHEL 8.5 and 8.6 support for BareOS clusters

PMK has added support for Red Hat Enterprise Linux(RHEL) 8.5 and 8.6 for BareOS clusters. This is supported only for 1.22 and 1.23 Kubernetes clusters.

Platform9 CLI

The 1.18 pf9ctl release is now available and can be installed by running the following command.

bash <(curl -sL https://pmkft-assets.s3-us-west-1.amazonaws.com/pf9ctl_setup)Profile Engine with Arlon (In Beta)

Starting the PMK 5.6 release, Platform9 has expanded on it's Profile Engine capabilities by adding support to open source Arlon. Arlon is an open source, declarative, policy driven framework for scalable management of Kubernetes cluster upgrades and security updates using the principles of GitOps and Infrastructure as Code (IaC). Arlon is built using open source ArgoCD and ClusterAPI.

The Arlon integration into PMK is still a beta feature in PMK release 5.6. We are actively working on making this feature GA in upcoming PMK releases.

Arlon integration with PMK in 5.6 does two things:

- Arlon 0.3.0 is deployed as a fully managed add-on along with PMK.

- Platform9 has released ArlonCTL, a new command line utility to create Arlon profiles, and create EKS clusters, using the managed Arlon add-on that ships with PMK.

For more information on Arlon, and PMK integration with Arlon read Profile Engine with Arlon

Enhancements & Updates

- CAPI - Clusters provisioned and managed by Platform9 Cluster API Integration.

- Legacy - Clusters provisioned and managed by Platform9 Qbert API.

- Imported - Clusters provisioned externally from managed service such EKS, AKS & GKE and are imported into Platform9 SaaS Management Plane.

Note that docker runtime will be removed in the upcoming versions and users are strongly advised to move to containerd runtime.

Bug Fixes

Package Updates

The following packed components have been upgraded in latest v1.23.8 Kubernetes version:

| Component | Version |

|---|---|

| CALICO | 3.23.5 |

| CORE-DNS | 1.8.6 |

| METRICS SERVER | 0.5.0 |

| METAL LB | 0.12.1 |

| KUBERNETES DASHBOARD | 0.12.1 |

| CLUSTER AUTO-SCALER AWS | 1.23.1 |

| CLUSTER AUTO-SCALER AZURE | 1.13.8 |

| CLUSTER AUTO-SCALER CAPI | 1.23.1 |

| FLANNEL | 0.14.0 |

| ETCD | 3.4.14 |

| CNI PLUGINS | 0.9.0 |

| KUBEVIRT | 0.55.0 |

| KUBEVIRT CDI | 1.51.0 |

| KUBEVIRT ADDON | 0.55.0 |

| LUIGI | 0.4.0 |

| MONITORING | 0.57.0 |

| ROFILE AGENT | 2.0.1 |

| METAL3 | 1.1.1 |

Please refer to the Managed Kubernetes Support Matrix for v5.6 to view all currently deployed or supported upstream component versions.

Known Issues

CAPI AWS & EKS clusters

Reusing the name of a CAPI cluster after deletion of a previous cluster leads to errors. Users are recommended to use unique names for clusters avoiding even the names which were used for clusters deleted in the past. In case users are unable to avoid such name reuse, contact Platform9 support for possible options of resolution.

kubectl delete node <node_name> to remove such orphaned node records from Kubernetes.

- cannot be associated to the cluster. A known bug leads to only one part of the label being used when applying it to the cluster.

As a workaround, authenticate with the ID Token.

- Download and open the kubeconfig of a cluster.

- Copy the value of the id_token field.

- In the dashboard, select "token" authentication and paste the value in the form.

Note: refreshing is not supported by the dashboard, this means you lose access after the token expires (10-20 min)

To refresh the ID token, simply run a kubectl command with it. kubectl will replace the ID token in the kubeconfig with a valid one if it has expired. Then afterwards follow the steps above again.

Current workarounds:

- Machine Deployment type Node Group: Scale down the affected node group to 0 and then scale it back up to desired count.

- Machine Pool type Node Group: Delete the affected node group and add new node group to the cluster.

- Create an EKS cluster with MachinePool / MachineDeployment with replica count as 0. Once the cluster is healthy change the replica count to desired value.

Other known Issues

If an AWS Cloud Provider is configured to import clusters without the correct identity being added to the target cluster, Platform9 will be unable to access the cluster.

It's important to note that if you have used a Cloud Provider to register an EKS, AKS, or GKE cluster that was created with IAM user credentials, which no longer have access to the EKS, AKS, or GKE K8s clusters, Platform9 will fail with an 401 unauthorized error until that IAM user is given access to the K8s cluster.

View the EKS documentation here to ensure the correct access has been provisioned at for each imported cluster. https://aws.amazon.com/premiumsupport/knowledge-center/amazon-eks-cluster-access/

Current workaround is to delete /var/lib/docker manually.

ArgoCD as a Service

Metal³

Platform9 Managed Bare Metal With Metal³ Release Notes

KubeVirt

Platform9 Managed KubeVirt 5.6 Release Notes

PMK 5.6.8 Patch Update (Released 2023-06-01)

The upgrade path from PMK v5.6.8 to PMK v5.7.1 is not available right now and is going to be delivered via the PMK v5.7.2 patch soon. Users looking to immediately upgrade to PMK v5.7.1 should directly upgrade the DU from PMK v5.6.4 to PMK v5.7.1.

gp3 with default Throughput: 125 and Iops: 3000. For custom throughout/Iops users can use the API with payload options ebsVolumeThroughput/ebsVolumeIops. Follow the documentation here : https://platform9.com/docs/kubernetes/migrate-aws-qbert-gp2-volumes-to-gp3

hostname -I.