Cluster Internals

This article describes several of the service elements that constitute a notable portion of the Platform9 components. In addition to the pf9-hostagent, pf9-comms, and pf9-nodelet services, Platform9 also installs other services that compromise a Kubernetes Cluster. The Host Internals page describes these elements in additional detail.

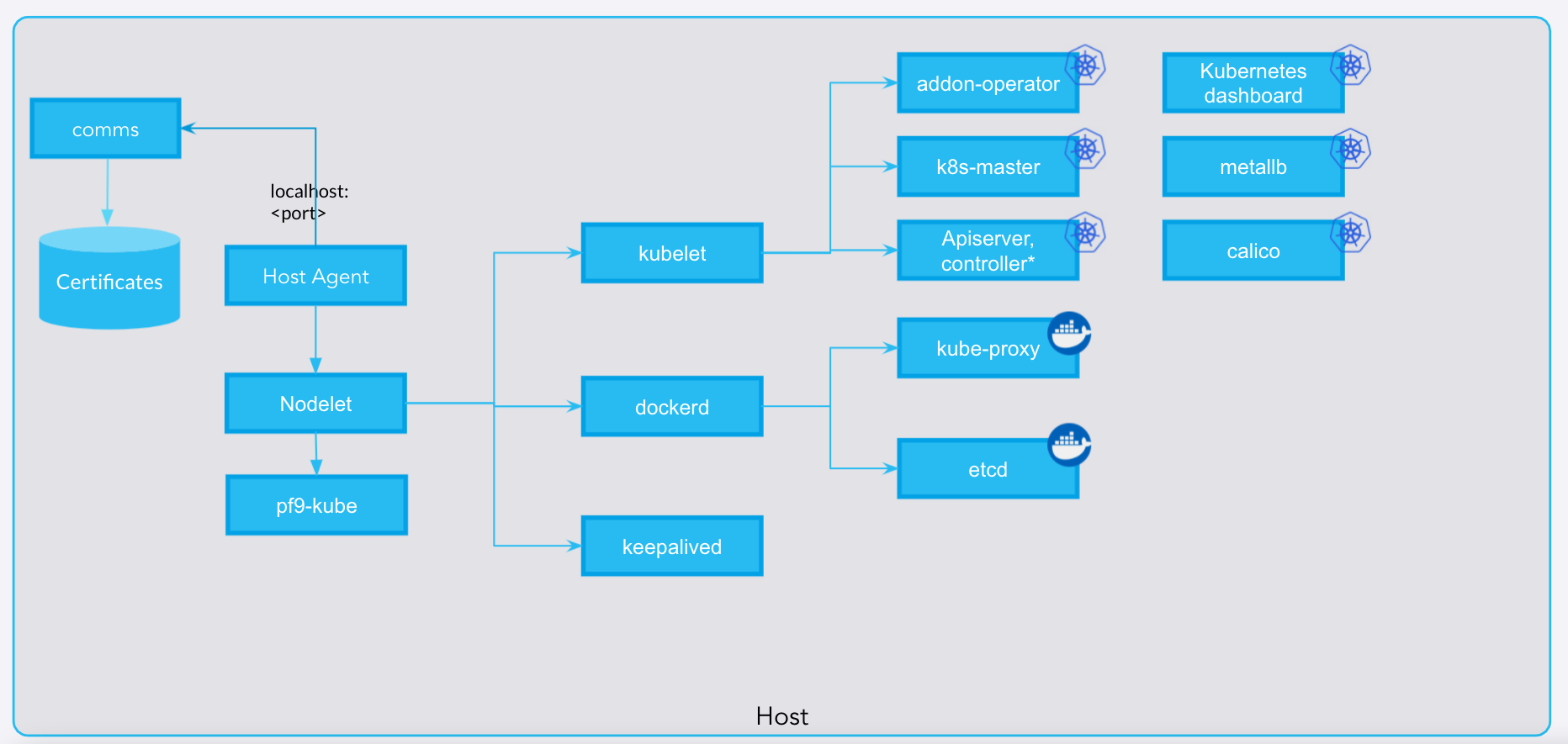

For further details on nodelet, please review the Nodelet page filed under the PMK documentation. The following diagram illustrates a high-level view of the different services, containers, and processes involved in creating the cluster. In the diagram below, note the hierarchy of various components.

- pf9-hostagent: This is the prominent service that is responsible for initiating nodeletd.

- nodeletd: The nodeletd functions on the worker and master differ slightly. For example, the following diagram shows a master configuration. It starts these three primary services:

- kubelet

- dockerd

- keepalived

- dockerd: This process creates containers via the docker daemon

- keepalived: Bare metal systems primarily use keepalived to provide highly available IP addresses between the different Kubernetes API servers.

- kubelet: This Kubernetes component runs as needed as a systemd unit file. It initiates various containers like the k8s-master, apiserver, controller, scheduler, etc. It also starts the addon-operator, which in turn launches other critical system addons.

- kube-proxy, etcd: both of these components run as a docker container.

Master - Worker Connections

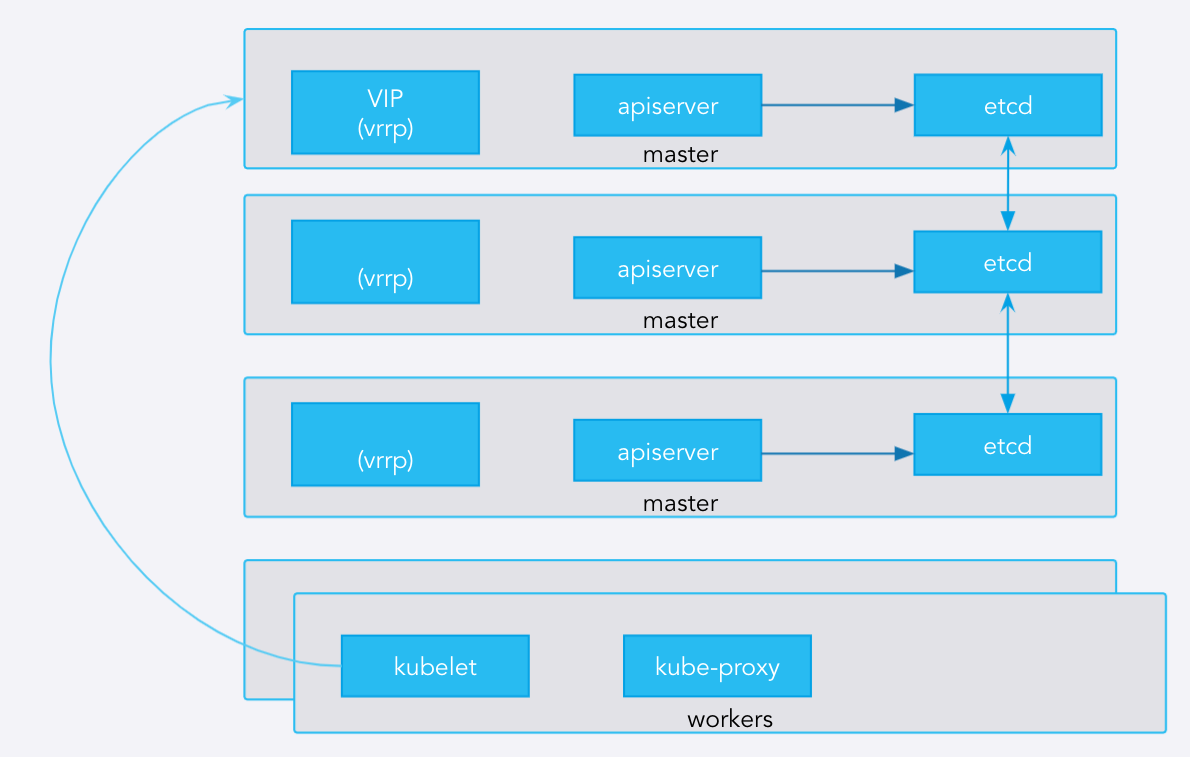

The following diagram illustrates the different networking connections between masters and workers.

- etcd: etcd creates clusters and allows them to talk, if it exists in a mesh network.

- apiserver: The apiserver on each master talks to the other corresponding etcd processes on each localhost

- VIP: A virtual IP (or VIP) is created and is maintained by keepalived using the VRRP protocol. Workers use the VIP to communicate with the masters. If a node fails, the VIP will move between the master nodes.

- kubelet: The kubelet on each worker node will connect to the VIP (and hence, each of the servers).

Was this page helpful?