MongoDB with Kubernetes operator: 200 Million Inserts Per Hour Performance

by Peter Fray (Platform9) and Alessandro Catale (Comfone)

There are plenty of articles describing how to setup MongoDB with a Kubernetes operator. However, is Kubernetes a viable solution for running high-performance MongoDB use cases? In this blog, we will describe a real-world application performance test that 200 million MongoDB document inserts and reads per hour on Kubernetes is possible. In addition, we will discuss how Kubernetes performs in such high-volume and high-performance environments.

The Comfone Use Case: International Mobile Roaming Services

Comfone’s business is to provide international roaming services to ensure that a mobile phone remains connected to a mobile network while the subscriber is abroad, or outside of the geographical coverage area of the home country network.

The mobile phone connects to a visited network (i.e. the network of a mobile operator in the visited country) which allows the subscriber to automatically make and receive voice calls, send and receive SMS and data, or access other services while traveling abroad.

For a subscriber to use a visited network while abroad, both the home and visited networks need to exchange various types of information. To do this, multiple interconnections need to exist between the home network and the visited network.

Comfone enables all the necessary interconnections between the two mobile network operators to ensure the necessary information can be exchanged. There are over 900 mobile network operators worldwide and they all need to be connected to offer roaming services to their subscribers.

The Application: Signal Statistics Generator (SSG)

The SSG gathers subscriber roaming data from locations around the world on a mobile network called SIGTRAN and aggregates it all into a MongoDB database in a central location and then performs analytics. The gathered data records are called TDRs (transaction data records) from various roaming-related protocols.

The data is accessible for the customer through Comfone’s business portal Pulse. The gathered records are conveniently displayed in Comfone’s household apps such as Hawkeye, enabling customers to filter per country, send welcome-SMS, and much more!

The MongoDB, Kubernetes and Compute environment

An enormous volume of roaming-related data (Packet level TCP dumps) is generated and aggregated at a high velocity resulting in more than 200,000,000 MongoDB document inserts per hour. This requires highly redundant shared MongoDB databases to handle the volume and speed of transactions. The previous environment we set up included 16 MongoDB shards running across 52 very large Virtual Machines with each 1TB of storage. Each shard consists of 3 members (Primary – Secondary – Secondary).

Then we switched to a MongoDB on Kubernetes compute environment using Platform9. The version of MongoDB we used for this test was 4.4.4 community edition and the Kubernetes version was 1.18.10. We set up a 12 node Kubernetes cluster running 3 masters running with calico as CNI. This enabled us to have the whole configuration of the cluster conveniently in YAML files. This is a huge advantage from an operational perspective. Setting up a new cluster of this size (16 Shards) in a traditional VM-setup would mean having to stage at least 52 virtual machines for a minimal setup with one router (48 Nodes, 3 configDb & one router). This would take several days with our old setup, as every VM must be staged and configured via Ansible playbooks. With the configuration, all handy in YAML files setting up a new cluster is as easy as copying the already existing YAML files into a new folder in the git-project, change some variables and deploy! This enables Comfone to setup new MongoDB Kubernetes clusters in less than an hour, the only thing besides changing the YAML files is creating the volumes in our storage system.

Tasks like adding more memory also become easier, our setup offers multiple ways to manage our resource consumption. There is on container level the option to limit the memory and CPU usage per container. Adding more memory to the cluster can be achieved by adding a worker node or add memory to the existing workers.

Kubernetes also handles the distribution of the containers automatically but for our MongoDB, we worked with Kubernetes-taints on the workers to “bind” a container to a location or worker, as Kubernetes doesn’t know how MongoDB works and balances solely based on resource consumption. This was our way of closing this gap to optimize Kubernetes resource scheduling for MongoDB.

MongoDB with Kubernetes: High-performance testing and monitoring

We performed “insert test” on our MongoDB with Kubernetes environment, and reached 200 Million documents inserted into the DB per hour running for weeks without any manual interaction from the operations teams.

During the above-mentioned MongoDB performance testing, Platform9’s Managed Kubernetes offering was used for the Kubernetes Layer. Comfone provided 12 VM’s for the Kubernetes test. 3 of these nodes were Kubernetes Master Nodes and the remaining 9 were worker nodes. The VM’s provided 64GB ram and 24 vCPU’s each and this was determined to be very much over-provisioned. Calico was used for the CNI and NFS was the underlying storage for CSI.

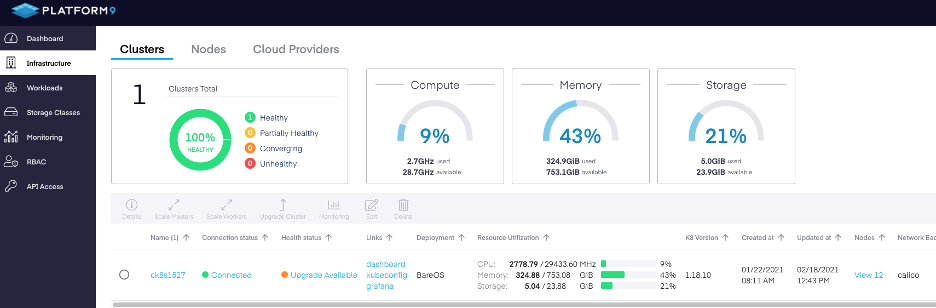

Platform9’s built-in cluster monitoring kept an eye on all the resources.

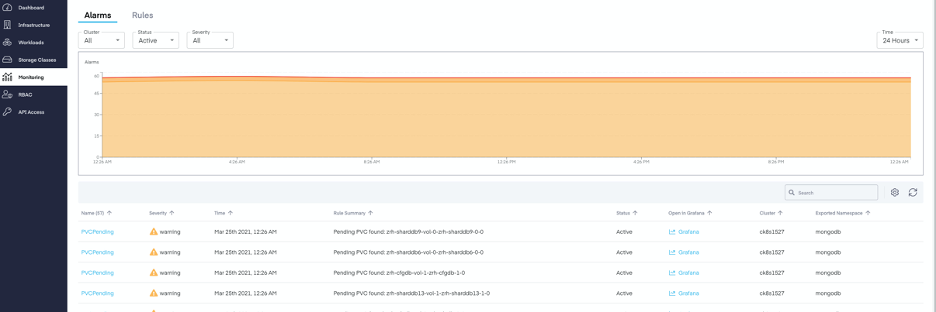

Platform9’s UI displayed useful information about current alerts in the Cluster.

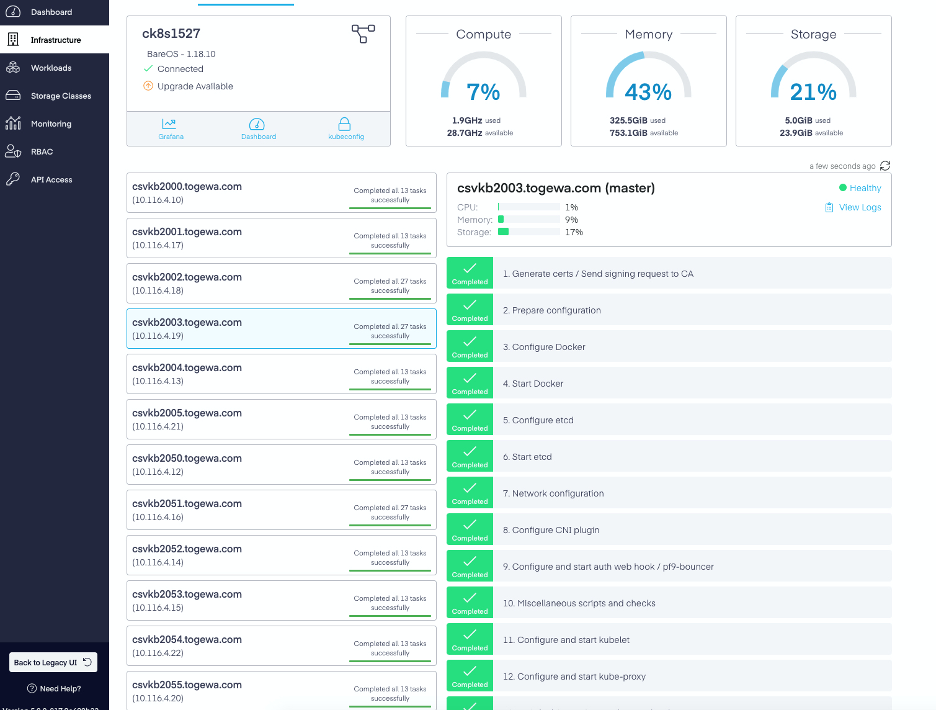

Below you can see the health of a node and the K8’s deployment clearly showing the CPU and Memory utilization for this node.

Conclusion

We were able to successfully demonstrate that running MongoDB on Kubernetes is a viable solution for high-performance, real world use cases and reached 200 Million documents inserted into the DB per hour. We are planning to run a read test next with even higher performance requirements.